Hadoop MapReduce vs. Apache Spark Who Wins the Battle?

Can spark mount challenge to Hadoop and become the top big data analytics tool. Hadoop MapReduce vs. Apache Spark-Who looks the big winner in the big data world?

Confused over which framework to choose for big data processing - Hadoop MapReduce vs. Apache Spark. This blog helps you understand the critical differences between two popular big data frameworks.

Learn Performance Optimization Techniques in Spark-Part 1

Downloadable solution code | Explanatory videos | Tech Support

Start ProjectHadoop and Spark are popular apache projects in the big data ecosystem. Apache Spark is an improvement on the original Hadoop MapReduce component of the Hadoop big data ecosystem. There is great excitement around Apache Spark as it provides fundamental advantages in interactive data interrogation on in-memory data sets and in multi-pass iterative machine learning algorithms. However, there is a hot debate on whether spark can mount a challenge to Apache Hadoop by replacing it and becoming the top big data analytics tool. What elaborates is a detailed discussion on Spark Hadoop comparison and helps users understand why spark is faster than Hadoop.

Table of Contents

- Hadoop vs. Spark Comparison in a Nutshell

- What is Apache Spark - The User-Friendly Face of Hadoop

- Hadoop MapReduce vs. Spark Differences

- Hadoop vs. Spark vs. Storm

- Hadoop MapReduce vs. Spark Benefits: Advantages of Spark over Hadoop

- Why is Spark considered “in memory” over Hadoop?

- Spark vs. Hadoop MapReduce Comparison -The Bottomline

- Will Apache Spark Eliminate Hadoop MapReduce?

- Confused Hadoop vs. Spark – Which One is Better?

- FAQs on Hadoop vs. Spark

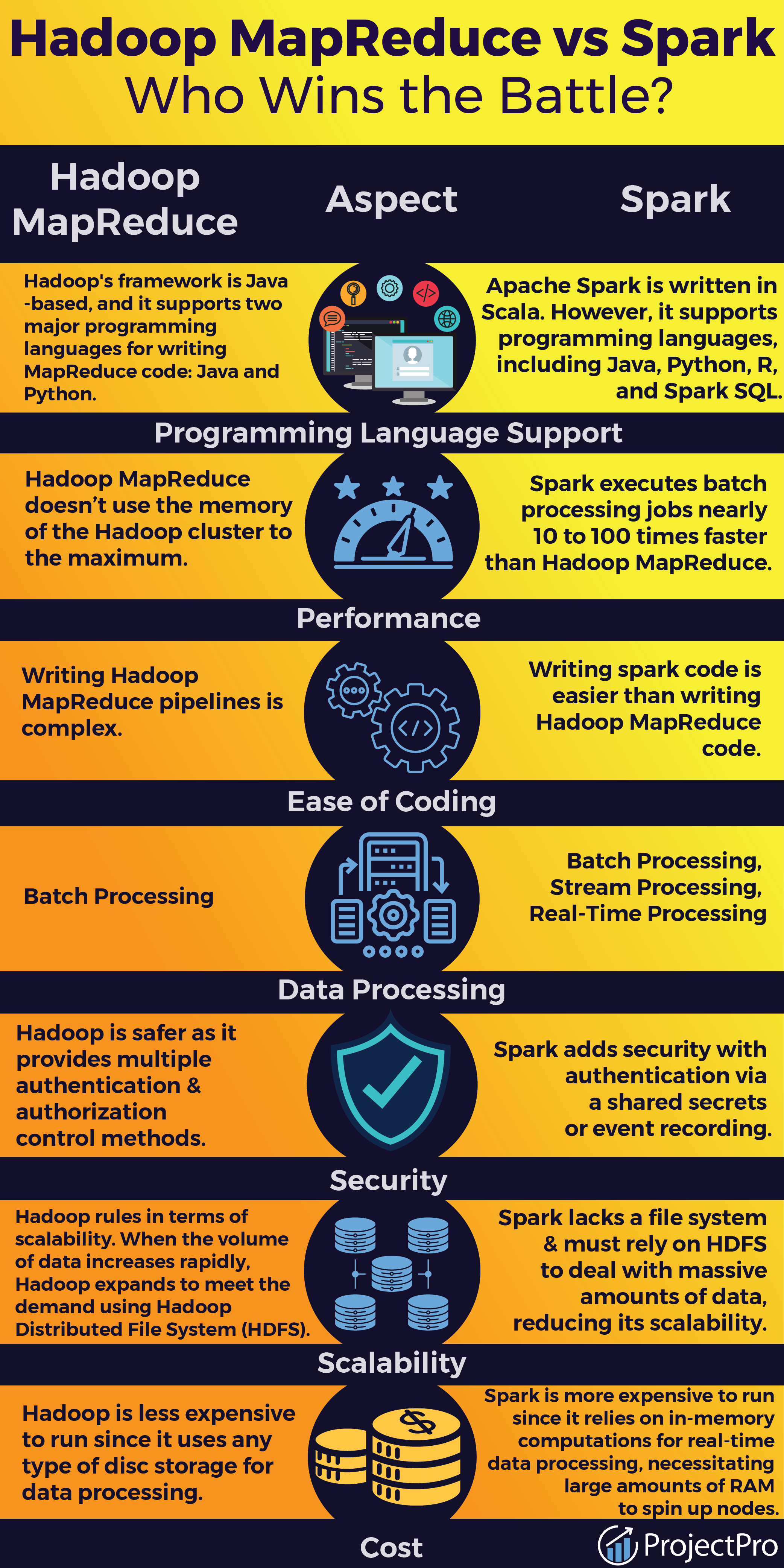

Hadoop MapReduce vs. Apache Spark Comparison in a Nutshell

|

Apache Spark |

Apache Hadoop |

|

Easy to program and does not require any abstractions. |

Difficult to program and requires abstractions. |

|

Programmers can perform streaming, batch processing, and machine learning, all in the same cluster. |

It is used for generating reports that help find answers to historical queries. |

|

It has an in-built interactive mode. |

No in-built interactive mode except for tools like Pig and Hive. |

|

Executes jobs 10 to 100 times faster than Hadoop MapReduce. |

Hadoop MapReduce does not leverage the memory of the Hadoop cluster to the maximum. |

|

Programmers can modify the data in real time through Spark streaming. |

It allows you to process just a batch of stored data. |

There are various approaches in the world of big data, making Apache Hadoop the perfect choice for big data processing, linear data processing, interactive queries, and ad hoc queries. Every Hadoop user knows that the Hadoop MapReduce framework is meant majorly for batch processing. Thus, using Hadoop MapReduce for machine learning processes, ad-hoc data exploration, and other similar processes is not apt.

Most of the Big Data vendors are trying to find an ideal solution to this challenging problem that has paved the way for the advent of a demanding and popular alternative named Apache Spark. Spark makes development a pleasurable activity and has a better performance execution engine over MapReduce while using the same storage engine Hadoop HDFS for executing huge data sets.

Apache Spark has gained great hype in the past few months and is now regarded as the most active project of the Hadoop Ecosystem.

New Projects

What is Apache Spark - The User-Friendly Face of Hadoop

Spark is a fast cluster computing system developed by the contributions of nearly 250 developers from 50 companies in UC Berkeley's AMP Lab to make data analytics more rapid and easier to write and run.

Apache Spark is an open-source data processing engine built for fast performance and large-scale data analysis. It uses RAM for caching and data processing. Spark follows a general execution model that helps in in-memory computing and optimization of arbitrary operator graphs, so querying data becomes much faster than disk-based engines like MapReduce.

Apache spark consists of five main components - spark core, Spark SQL (to gather information about structured data), spark streaming (for live data streams), ML Library, and GraphX. This helps spark work well for a variety of big data workloads, like batch processing, real-time data processing, graph computation, machine learning, and interactive queries. In addition, Apache Spark also has a well-designed application programming interface comprising various parallel collections with methods such as groupByKey, Map, and Reduce so that you feel you are programming locally. With Apache Spark, you can write collection-oriented algorithms using Scala's functional programming language.

Here's what valued users are saying about ProjectPro

Jingwei Li

Graduate Research assistance at Stony Brook University

Gautam Vermani

Data Consultant at Confidential

Not sure what you are looking for?

View All ProjectsWhy was Apache Spark developed?

Hadoop MapReduce was envisioned at Google and successfully implemented, and Apache Hadoop is a highly famous and widely used execution engine. You will find several applications that are on familiar terms with how to decompose their work into a sequence of MapReduce jobs. All these real-time applications will have to continue their operation without any change.

However, the users have consistently complained about the high latency problem with Hadoop MapReduce, stating that the batch mode response for all these real-time applications is highly painful when it comes to analyzing and processing data.

This paves the way for Hadoop Spark, a successor system that is more powerful and flexible than Hadoop MapReduce. Even though it might not be possible for all the future allocations or existing applications to abandon Hadoop MapReduce completely, there is a scope for most of the future applications to make use of a general-purpose execution engine such as Hadoop Spark that comes with many more innovative features, to accomplish much more than that is possible with MapReduce Hadoop.

Kickstart your data engineer career with end-to-end solved big data projects for beginners.

Hadoop MapReduce vs. Apache Spark Differences

Before we understand the critical differences between Hadoop and spark, let us know why Apache Spark is faster than MapReduce.

Apache Spark vs. Hadoop MapReduce - Why spark is faster than Mapreduce?

Apache Spark is an open-source standalone project that was developed to collectively function together with Hadoop distributed file system (HDFS). Apache Spark now has a vast community of vocal contributors and users because programming with Spark using Scala is much easier and faster than the Hadoop MapReduce framework both on disk and in memory.

Thus, Hadoop Spark is the apt choice for future big data applications that require lower latency queries, iterative computation, and real-time processing of similar data.

Hadoop Spark has many advantages over the Hadoop MapReduce framework in terms of the wide range of computing workloads it can deal with and the speed at which it executes the batch processing jobs.

Differences between Hadoop MapReduce and Apache Spark in Tabular Form

Hadoop vs. Spark - Performance

Hadoop Spark has been said to execute batch processing jobs nearly 10 to 100 times faster than the Hadoop MapReduce framework just by cutting down on the number of reads and writes to the disc.

In the case of MapReduce, there are these Map and Reduce tasks, after which there is a synchronization barrier, and one needs to preserve the data in the disc. This feature of the MapReduce framework was developed with the intent that the jobs can be recovered in case of failure. Still, the drawback to this is that it does not leverage the memory of the Hadoop cluster to the maximum.

Nevertheless, with Hadoop Spark, the concept of RDDs (Resilient Distributed Datasets) lets you save data on memory and preserve it to the disc if and only if it is required, and it does not have any synchronization barriers that could slow down the process. Thus the general execution engine of Spark is much faster than Hadoop MapReduce with memory.

Apache Spark vs. Hadoop - Easy Management

It is now easy for organizations to simplify their data processing infrastructure. With Hadoop Spark, it is possible to perform Streaming, Batch Processing, and Machine Learning in the same cluster.

Most real-time applications use Hadoop MapReduce to generate reports that help find answers to historical queries and then delay a different system that will deal with stream processing to get the key metrics in real-time. Thus the organizations ought to manage and maintain separate systems and then develop applications for both computational models.

However, with Hadoop Spark, all these complexities can be eliminated as it is possible to implement stream and batch processing on the same system, simplifying the application's development, deployment, and maintenance. With Spark, it is possible to control different workloads, so if there is an interaction between various workloads in the same process, it is easier to manage and secure such workloads, which comes as a limitation with MapReduce.

Spark vs. Hadoop Mapreduce – Real-Time Methods to Process Streams

In the case of Hadoop MapReduce, you get to process a batch of stored data, but with Hadoop Spark, it is possible to modify the data in real-time through Spark Streaming.

With Spark Streaming, it is possible to pass data through various software functions, for instance, performing data analytics as and when it is collected.

Spark can handle both batch and real-time processing because of its high performance. In addition, Spark is also capable of Graph processing in addition to data processing, and it comes with the MLlib machine learning library.

Apache Mahout, a machine-learning library for MapReduce, has been replaced by Spark.

Apache Hadoop vs. Spark - Caching

Spark ensures lower latency computations by caching the partial results across its memory of distributed workers, unlike MapReduce, which is disk oriented.

Hadoop MapReduce stores data on the local disc for further calculations, slowing down the processing speed. Whereas spark stores data in RAM and decreases the number of read/write cycles from the disc, resulting in faster processing speed. Thus, spark outperforms the Hadoop engine when it comes to data storage and processing speed.

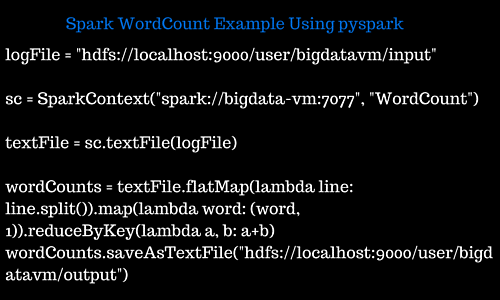

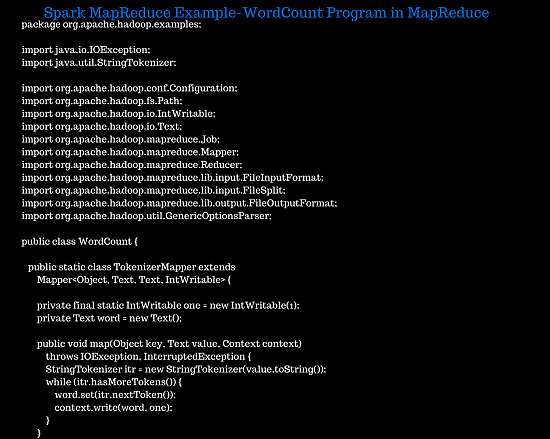

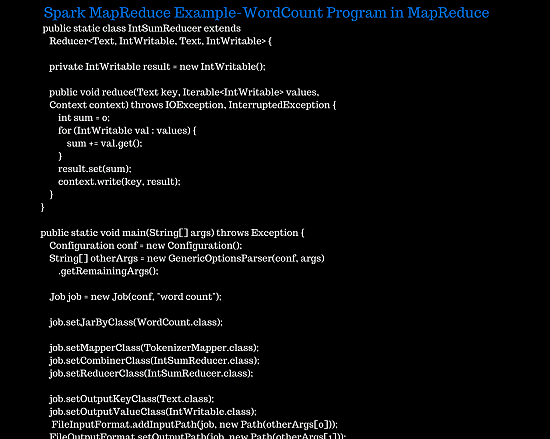

Apache Spark vs. Hadoop MapReduce - Ease of Use

Writing Spark is always more compact than writing Hadoop MapReduce code. Here is a Spark MapReduce example-The below images shows the word count program code in Spark and Hadoop MapReduce. If we look at the images, it is evident that the Hadoop MapReduce code is more verbose and lengthy.

Spark MapReduce Example- Wordcount Program in Spark

Spark MapReduce Example- Wordcount Program in Hadoop MapReduce

Hadoop MapReduce vs. Apache Spark - Fault Tolerance

Apache Hadoop and Apache Spark both provide a good level of fault tolerance. However, the approach used by these two systems to ensure fault tolerance varies significantly.

Hadoop has fault tolerance due to its mode of operation. There is a cluster of machines in Hadoop, and the data is replicated across multiple machines, also known as nodes, in the cluster. Suppose there is a failure in one of the nodes, either due to a system failure or a planned exit for any maintenance-related issues. In that case, the system can proceed by tracking any missing data from other nodes with replicas of the data. There is a master node that keeps track of the status of all the slave nodes. The slave nodes are required to send messages to the master node periodically. These are known as Heartbeat messages. If the master node does not receive these messages from a particular slave node for more than 10 minutes, the data being replicated onto that particular slave node is copied to another slave node. The master node, however, is a single point of failure for the cluster. If the master node fails, data is not lost, but the cluster will be down for a while.

In Spark, fault tolerance is achieved using RDDs. Resilient Distributed Datasets (RDD) is an immutable distributed collection of objects. Each dataset in RDD is divided into logical partitions, which may be computed on different cluster nodes. In case of a failure, the Spark system tracks how the immutable dataset was created and then restarts it accordingly. In Spark, data is rebuilt in a cluster using Directed Acyclic Graphs (DAG) to track the workflows.

Wondering if Spark is suitable for Big Data? Find out by working on Apache Spark Projects that will help you understand the fundamentals of Spark.

Hadoop MapReduce vs. Spark - Security

Spark supports authentication for RPC channels using a shared secret. Event logging and Web UIs in Spark can be secured using javax servlet filters. In addition, since Spark can use HDFS and run on YARN, it can use Kerberos for authentication, file permissions on HDFS, and encryption between nodes. Hadoop MapReduce provides all the security benefits and access control methods providing more fine-grained security features available from HDFS. It can also integrate with other Hadoop tools, including Knox Gateway and Apache Sentry.

In the case of security features, Spark is less advanced when compared to MapReduce. Another issue with Spark is that the security in Spark is set to off by default which can leave the application vulnerable to attack.

Apache Spark vs. Hadoop - Architecture

In the case of Hadoop, all the files passed into HDFS (Hadoop Distributed File System) are divided into blocks based on a configured block size. Each block is replicated several times across the cluster nodes, and the replication factor determines the number of replicas. The NameNode in a Hadoop cluster keeps track of the cluster, and it assigns blocks to the various DataNodes in a cluster, and these blocks are written on the DataNode they are assigned. The MapReduce algorithm is built on top of HDFS and has its JobTracker. Whenever an application has to be executed, Hadoop accepts the JobTracker and assigns the work to TaskTrackers on the other nodes. YARN (Yet Another Resource Negotiator) is responsible for allocating resources to perform the tasks assigned by the JobTracker, and YARN monitors and rotates the resources if needed for more efficiency.

In Spark, computations are carried out in memory and kept there until the user actively decides to persist them.

Spark first reads data from a file present on a filestore (the filestore can be HDFS, too) onto the SparkContext. From the SparkContext, Spark creates a structure that contains a collection of immutable elements which can be operated on in parallel. This structure is known as a Resilient Distributed Dataset or RDD. While the RDD is being created, Spark also creates a Directed Acyclic Graph (DAG) to visualize the order of operations and their relationship. DAGs have stages and steps. RDDs can be used to carry out transformations, actions, intermediate steps, and final steps. The results of these transformations go into the DAG but do not persist on the disk. However, the results of actions persist all the data in memory on the disk. As of Spark 2.0, a new abstraction called DataFrames was introduced. DataFrames are similar to RDDs, but data is organized into named columns in the case of DataFrames, making them more user-friendly than RDDs. SparkSQL allows querying of data from the DataFrames.

Spark vs. Hadoop - Machine learning

Spark and Hadoop are both provided with built-in libraries that can be used for machine learning.

Hadoop provides Mahout as a library for machine learning. Mahout supports classification, clustering, and batch-based collaborative filtering techniques, which all run on top of MapReduce. Mahout is being replaced by another tool called Samsara, a domain-specific language (DSL) written in Scala. It provides a platform for in-memory and algebraic operations and allows users to incorporate their own algorithms.

Spark provides MLlib as its library for machine learning. MLlib can be used for iterative machine learning applications in-memory. The library is available in Java, Scala, Python, and R. It allows users to perform classification and regression and build user-defined machine-learning pipelines with hyperparameter tuning. Since Spark allows machine learning algorithms to run in memory, it is faster when handling several machine learning algorithms, including k-means and Naive Bayes.

Hadoop MapReduce vs. Apache Spark Scalability

Hadoop rules in terms of scalability. It uses HDFS to deal with large amounts of data. When the volume of data increases rapidly, Hadoop expands to meet the demand using the Hadoop Distributed File System (HDFS). On the other hand, spark lacks a file system and must rely on HDFS to deal with massive amounts of data, reducing its scalability.

Also, Hadoop can be readily scaled by adding nodes and disc storage. In contrast, Spark is difficult to scale as it relies on RAM for computations.

Apache Hadoop is a popular open source big data framework, but its cousins, Spark and Storm, are even more popular. Storm is incredibly fast, processing more than a million records per second per node on a small cluster. Let us now understand the differences between the three - Hadoop, Spark, and Storm.

Hadoop vs. Spark vs. Storm

Hadoop is an open-source distributed processing framework that stores large data sets and conducts distributed analytics tasks across various clusters. Many businesses choose Hadoop to store large datasets when dealing with budget and time constraints.

Spark is an open-source data-parallel processing framework. Spark workflows are created in Hadoop MapReduce but are significantly more efficient. The best aspect of Apache Spark is that it does not rely on Hadoop YARN to work, providing its streaming API and separate processes enabling continuous batch processing across varied short periods.

Storm is an open-source task parallel distributed computing system. It has its procedures in topologies, such as Directed Acyclic Graphs. Storm topologies run until there is some disruption or the system entirely breaks down. Storm does not run on Hadoop clusters but manages its processes with Zookeeper and its minion worker.

Also Read Hadoop vs. Spark vs. Storm

Hadoop MapReduce vs. Spark Benefits: Advantages of Spark over Hadoop

-

It has been found that Spark can run up to 100 times faster in memory and ten times faster on disk than Hadoop’s MapReduce. Spark can sort 100 TB of data 3 times faster than Hadoop MapReduce using ten times fewer machines.

-

Spark has also been found to be faster when working with specific machine learning applications such as Naive Bayes and k-means.

-

Spark is said to have a more optimal performance in terms of processing speed when compared to Hadoop. This is because Spark does not have to deal with input-output overhead every time it runs a task, unlike in the case of MapReduce, and hence Spark is found to be much faster for many applications. In addition, the DAG of Spark also provides optimizations between steps. Since Hadoop does not draw any cyclical connection between the steps in MapReduce, it cannot perform any performance tuning at that level.

-

Spark works better than Hadoop for Iterative processing. Spark’s RDDs allow multiple map operations to be carried out in memory, but MapReduce will have to write the intermediate results to a disk.

-

Due to its faster computational speed, Spark is better for handling real-time processing or immediate insights.

-

Spark supports graph processing better than Hadoop since it is better for iterative computations and has its API called GraphX, which is used for graph analytics tasks.

-

Spark works better for machine learning since it has a dedicated machine learning library called MLlib, which has built-in algorithms that can also run in memory. In addition, users can use the library to adjust the algorithms to meet the processing requirements. In addition, users can use the library to change the algorithms to complete the processing requirements.

Why is Spark considered “in memory” over Hadoop?

The in-memory term for Spark indicates that the data is kept in random access memory (RAM) rather than on slower disk drives. Keeping the data in memory allows for distributed parallel processing and makes it more efficient to analyze large amounts of data. Allowing the data to be held in memory improves performance by magnitudes. The primary method of data abstraction in Spark is through RDD’S.

RDDs are cached using either the cache() or the persist() method. By using the cache() method, the RDDs get stored in memory. In cases where all data present in the RDDs does not fit in memory, either a recalculation is performed, or the excess data is sent to be stored on the disk. This way, whenever an RDD is required to perform some computation, it can be retrieved from memory without going to the disk. This reduces the space-time complexity and the overhead of I/O operations to be performed on the disk while also reducing the overhead of disk storage.

The persist() method, too, allows the RDDs to be stored in memory. The difference is that in the case of the cache() method, the default storage level is MEMORY_ONLY, but the persist() method allows various storage levels. The persist() method supports the following storage levels:

MEMORY_ONLY: RDDs are stored as deserialized Java objects in JVM. The remainder will be recomputed if the full RDD cannot fit in memory.

MEMORY_AND_DISK: RDDs are stored as deserialized Java objects in JVM. The remainder is stored on the disk if the full RDD cannot fit into memory.

MEMORY_ONLY_SER: RDDs are stored as serialized Java objects, and only one-byte arrays are stored per partition. It is similar to MEMORY_ONLY but more space-efficient.

MEMORY_AND_DISK_SER: RDDs are stored as serialized Java objects, and the remaining part of the RDD that does not fit in memory is stored on the disk.

DISK_ONLY: The RDD partitions are stored only on a disk.

MEMORY_ONLY_2: it is similar to MEMORY_ONLY, but each partition is replicated on two cluster nodes.

MEMORY_AND_DISK_2: it is similar to MEMORY_AND_DISK, but each partition is replicated on two cluster nodes.

Apache Hadoop allows users to handle the processing and analysis of large volumes of data at very low costs. However, Hadoop relies on persistent data storage on the disk and does not support in-memory storage like Spark. The in-memory feature of Spark makes it a better choice for low-latency applications and iterative jobs. Spark performs better than Hadoop when it comes to machine learning and graph algorithms. In-memory processing allows for faster data retrieval and is suitable for real-time risk management and fraud detection. Since the data is highly accessible, the computation speed of the system improves and allows it to better handle complex event processing.

Hadoop MapReduce vs. Apache Spark Comparison -The Bottomline

-

Hadoop MapReduce is meant for data that does not fit in the memory. In contrast, Apache Spark performs better for the data that fits in the memory, particularly on dedicated clusters.

-

Hadoop MapReduce can be an economical option because of Hadoop as a service offering(HaaS) and the availability of more personnel. According to the benchmarks, Apache Spark is more cost-effective, but staffing would be expensive in the case of Spark.

-

Hadoop and Spark are failure tolerant, but comparatively, Hadoop MapReduce is more failure tolerant than Spark.

-

Spark and Hadoop MapReduce have similar data types and source compatibility.

-

Programming in Apache Spark is more accessible as it has an interactive mode, whereas Hadoop MapReduce requires core java programming skills. However, several utilities make programming in Hadoop MapReduce easier.

Will Apache Spark Eliminate Hadoop MapReduce?

Most users condemned Hadoop MapReduce as a log jam in Hadoop Clustering because MapReduce executes all the jobs in Batch Mode, which implies that analyzing data in real time is impossible. With the advent of Hadoop Spark, which is proven to be a great alternative to Hadoop MapReduce, the biggest question that hinders the minds of Data Scientists and data engineers is Hadoop vs. Spark- Who wins the battle?

Apache Spark executes the jobs in micro-batches that are very short, say approximately 5 seconds or less than that. Apache Spark has, over time, been successful in providing more stability when compared to the real-time stream-oriented Hadoop Frameworks.

Nevertheless, every coin has two faces, and yeah so does Hadoop Spark comes with some backlogs, such as the inability to handle in case if the intermediate data is greater than the memory size of the node, problems in case of a node failure, and, most important, of all is the cost factor.

Hadoop Spark uses journaling (also known as "Recomputation") to provide resiliency in case there is a node failure by chance. As a result, the recovery behavior in node failure is similar to that in the case of Hadoop MapReduce, except that the recovery process would be much faster.

Spark also has the spill-to-disk feature in the case of a particular node, and there is insufficient RAM for storing the data partitions. It provides graceful degradation for disk-based data handling. When it comes to cost, with street RAM prices being 5 USD per GB, we can have near about 1TB of RAM for 5K USD, thus making a memory to be a minor fraction of the overall node cost.

One great advantage coupled with Hadoop MapReduce over Apache Spark is that if the data size is more significant than memory, then under such circumstances, Apache Spark will not be able to leverage its cache. It will likely be slower than the batch processing of MapReduce.

Confused Hadoop MapReduce vs. Apache Spark – Which One is Better?

If the question needs clarification about Hadoop MapReduce or Apache Spark or, instead, choose Disk-Based Computing or RAM-Based Computing, then the answer to this question is straightforward. It all depends, and the variables on which this decision depends on changing dynamically with time.

Nevertheless, the current trends favor in-memory techniques like Apache Spark, as the industry trends render positive feedback. So to conclude, the choice of Hadoop MapReduce vs. Apache Spark depends on the user-based case, and we cannot make an autonomous choice.

Get FREE Access to Data Analytics Example Codes for Data Cleaning, Data Munging, and Data Visualization

FAQs on Hadoop MapReduce vs. Apache Spark

-

When to use Spark vs. Hadoop

Both Hadoop and Spark process data in a distributed environment. Hadoop is ideal for linear data processing and batch processing, while Spark is best suited for projects requiring live unstructured data streams and real-time data processing.

-

Is Spark faster than Hadoop?

The answer is yes. This is because spark reduces the amount of disc read/writes cycles by storing intermediate data in memory, resulting in faster processing speed. Hence, Spark processes data 100 times faster in memory than Hadoop.

-

Is Spark better than Hadoop?

Hadoop and Spark are good frameworks to process big data but choosing one over the other depends on various aspects. For instance, Spark is potentially 100 times faster than Hadoop in terms of speed. In comparison, Hadoop is less expensive than Spark when it comes to cost.

-

Is Hadoop replaced by Spark?

Most professionals prefer using Apache spark over Hadoop because of certain advantages that spark offers. Spark uses RDDs to access the data stored more quickly, which adds value to a Hadoop cluster by reducing lag time and improving performance.

About the Author

ProjectPro

ProjectPro is the only online platform designed to help professionals gain practical, hands-on experience in big data, data engineering, data science, and machine learning related technologies. Having over 270+ reusable project templates in data science and big data with step-by-step walkthroughs,