Data Engineer's Guide to Apache Spark Architecture

Spark Architecture Overview: Understand the master/slave spark architecture through detailed explanation of spark components in spark architecture diagram.

"Spark is beautiful. With Hadoop, it would take us six-seven months to develop a machine learning model. Now, we can do about four models a day.” - said Rajiv Bhat, senior vice president of data sciences and marketplace at InMobi.

Apache Spark is considered a powerful complement to Hadoop, big data’s original technology of choice. Spark is a more accessible, powerful, and capable big data tool for tackling various big data challenges. With more than 500 contributors from across 200 organizations responsible for code and a user base of 225,000+ members- Apache Spark has become the mainstream and most in-demand big data framework across all major industries.

What are the Top Features of Apache Spark?

Ecommerce companies like Alibaba, social networking companies like Tencent, and Chinese search engine Baidu, all run Apache spark operations at scale. Here are a few features that are responsible for its popularity.

1) Fast Processing Speed

Speed has been the USP for Apache Spark since its inception. The first and foremost advantage of using Apache Spark for your big data is that it offers 100x faster in memory and 10x faster on the disk in Hadoop clusters. Having set the world record on-disk data sorting Apache Spark has shown lightning-fast speed when a large scale of data is stored on disk.

2) Polyglot - Supports a Variety of Programming Languages

Spark applications can be implemented in a variety of languages like Scala, R, Python, Java, and Clojure. With high-level APIs for each of these programming languages and a shell in Python and Scala, it becomes easy for developers to work according to their preferences.

Here's what valued users are saying about ProjectPro

Anand Kumpatla

Sr Data Scientist @ Doubleslash Software Solutions Pvt Ltd

Ray han

Tech Leader | Stanford / Yale University

Not sure what you are looking for?

View All Projects3) Powerful Libraries

It contains more than just map and reduce functions. It contains libraries SQL and dataframes, MLlib (for machine learning), GraphX, and Spark streaming which offer powerful tools for data analytics.

4) Real-Time Processing

Spark has MapReduce that can process data stored in Hadoop and it also has Spark Streaming which can handle data in real-time.

5) Deployment and Compatibility

Spark can run on Hadoop, Apache Mesos, Kubernetes, standalone, or in the cloud. It can operate diverse data sources.

6) Caching - Speeding Up Big Data Applications

The effectiveness of a big data application majorly depends on its performance. Caching in Apache Spark provides a 10x to 100x improvement in the performance of big data applications through disk persistence capabilities.

Now that you are aware of its exciting features, let us explore Spark Architecture to realize what makes it so special. This article is a single-stop resource that gives a spark architecture overview with the help of a spark architecture diagram and is a good beginners resource for people looking to learn spark.

Build a Real-Time Dashboard with Spark, Grafana, and InfluxDB

Downloadable solution code | Explanatory videos | Tech Support

Start ProjectApache Spark Architecture Overview

Apache Spark Architecture is based on a distributed computing model, consisting of a cluster manager, a distributed file system, and a processing engine. It enables efficient processing of large-scale data sets by leveraging in-memory computation, fault tolerance, and parallel data processing across multiple nodes.

Apache Spark has a well-defined and layered architecture where all the spark components and layers are loosely coupled and integrated with various extensions and libraries. However, before delving deep into understanding how does spark works, it is important to understand the Apache Spark Ecosystem and what are the key components of the Spark run-time architecture.

Get FREE Access to Data Analytics Example Codes for Data Cleaning, Data Munging, and Data Visualization

Apache Spark Explained - Components

Apache Spark has various components that make it a powerful big data processing framework. The main components include Spark Core, Spark SQL, Spark Streaming, Spark MLlib, and Spark GraphX. These components provide different functionalities for data processing, analysis, and machine learning tasks.

Let us understand about the each component below:

-

Spark Core

It's the basic fundamental module of the Apache Spark project for structured and distributed data processing. It's also home to the main programming abstractions of Spark i.e. RDDs. I/O operations, task scheduling, fault tolerance, in-memory computation are some of the core functionalities of the Apache Spark Core module.

-

Spark SQL

With Dataframes as its main programming abstraction, Spark SQL acts as the distributed SQL engine for querying data and running queries 100x faster on existing deployments and data. Spark SQL supports ETL operations on data in various formats including JSON, Parquet, etc, and ad-hoc querying.

-

Spark Streaming

An extension of the Spark Core API for data engineers to process real-time data from different sources like Kinesis, Apache Kafka, and Flume. Optimized resource usage, fast recovery, better load balancing are some of the key features of Spark Streaming.

-

GraphX

GraphX package of Spark unifies ETL functionality of data into graph structure and also provides exploratory analysis. It can transform and join graphs with RDD’s efficiently. GraphX in Spark is faster than Giraph and slightly slower than GraphLab. GraphX makes processing data easier with a very rich library of algorithms like PageRank, Connected Component Algorithm, Triangle Counting, SVD++, and Label Propagation.

-

SparkR

The R front-end for Apache Spark comprises two important components -

i. R-JVM Bridge : R to JVM binding on the Spark driver making it easy for R programs to submit jobs to a spark cluster.

ii. Excellent support to run R programs on Spark Executors and supports distributed machine learning using Spark MLlib.

SparkR provides distributed dataframe implementation with operations like aggregation, filtering, and selection.

-

Spark MLlib

Spark MLlib is a powerful machine learning library that is built on Apache Spark, an open-source distributed computing framework. MLlib provides a wide range of algorithms and tools for performing various machine learning tasks, making it a popular choice for large-scale data processing and analysis.

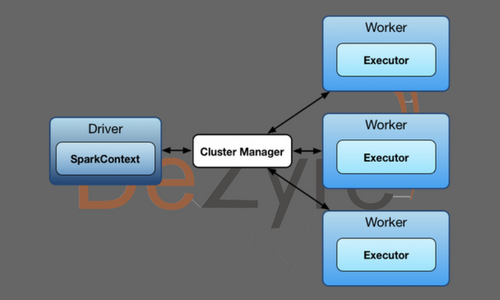

Components of Apache Spark Run-Time Architecture

The three high-level components of the architecture of a spark application include -

- Spark Driver

- Cluster Manager

- Executors

Role of Driver in Spark Architecture

Spark Driver – Master Node of a Spark Application

It is the central point and the entry point of the Spark Shell (Scala, Python, and R). The driver program runs the main () function of the application and is the place where the Spark Context and RDDs are created, and also where transformations and actions are performed. Spark Driver contains various components – DAGScheduler, TaskScheduler, BackendScheduler, and BlockManager responsible for the translation of spark user code into actual spark jobs executed on the cluster.

Spark Driver performs two main tasks: Converting user programs into tasks and planning the execution of tasks by executors. A detailed description of its tasks is as follows:

- The driver program that runs on the master node of the Spark cluster schedules the job execution and negotiates with the cluster manager.

- It translates the RDD’s into the execution graph and splits the graph into multiple stages.

- The driver stores the metadata about all the Resilient Distributed Databases and their partitions.

- Cockpits of Jobs and Tasks Execution -The driver program converts a user application into smaller execution units known as tasks. Tasks are then executed by the executors i.e. the worker processes which run individual tasks.

- After the task has been completed, all the executors submit their results to the Driver.

- Driver exposes the information about the running spark application through a Web UI at port 4040.

Ace Your Next Job Interview with Mock Interviews from Experts to Improve Your Skills and Boost Confidence!

Role of Executor in Spark Architecture

An executor is a distributed agent responsible for the execution of tasks. Every spark application has its own executor process. Executors usually run for the entire lifetime of a Spark application and this phenomenon is known as “Static Allocation of Executors”. However, users can also opt for dynamic allocations of executors wherein they can add or remove spark executors dynamically to match with the overall workload.

- Executor performs all the data processing and returns the results to the Driver..

- Reads from and writes data to external sources.

- Executor stores the computation results in data in-memory, cache or on hard disk drives.

- Interacts with the storage systems.

- Provides in-memory storage for RDDs that are collected by user programs, via a utility called the Block Manager that resides within each executor. As RDDs are collected directly inside of executors, tasks can run parallelly with the collected data.

Role of Cluster Manager in Spark Architecture

An external service is responsible for acquiring resources on the Spark cluster and allocating them to a spark job. There are 3 different types of cluster managers a Spark application can leverage for the allocation and deallocation of various physical resources such as memory for client spark jobs, CPU memory, etc. Hadoop YARN, Apache Mesos, Kubernetes, or the simple standalone spark cluster manager either of them can be launched on-premise or in the cloud for a spark application to run.

- Standalone Cluster Manager

Standalone Cluster Manager of Apache Spark provides an effortless method of executing applications on a cluster. It contains one master and several workers, each having a configured size of memory and CPU cores. When one submits an application, they can decide beforehand what amount of memory the executors will use, and the total number of cores for all executors. One can run the Standalone cluster manager either by starting a master and workers manually or through launch scripts of Spark’s ‘sbin’ directory.

There are two deploy modes that the Standalone cluster manager offers for where the driver program of an application can execute. They are:- Client Mode (Default Mode): In this mode, the driver will be launched on that machine where the spark-submit command was executed.

-

Cluster Mode: In this mode, the driver will run inside the Standalone cluster as another procedure on one of the worker nodes, and after that, it will link back to request executors.

One important point to note about the Standalone cluster manager is that it spreads out each application over the maximum number of executors by default.

- Hadoop YARN

YARN is another option for Cluster Manager in Spark. It was introduced in Hadoop 2.0 and supports utilizing varied data processing frameworks on a distributed resource pool. It is essentially placed on the same nodes as Hadoop’s Distributed File System (HDFS). The added advantage of this placement is that it allows Spark to obtain HDFS data swiftly, on the same nodes where the data is kept.

One can use YARN in Spark effortlessly by setting an environment variable that points to the user’s Hadoop configuration directory and then submitting jobs to a special master URL using spark-submit.

As is the case with the Standalone cluster manager, for YARN as well, there are two modes to link an application with the cluster: client mode and cluster mode. For client mode, the driver program for an application is launched on the machine that you submitted the application from (e.g., your laptop). In cluster mode, the driver program runs on the machine where the driver also runs inside a YARN container. - Apache Mesos

This cluster manager is a common-purpose cluster manager that can perform both analytics workloads and long-running utilities (e.g., web applications or key/value stores) on a cluster. However, there is a restriction on using Apache Mesos which is that it allows running applications only in client mode.

-

Kubernetes

This is a relatively new addition to the list of cluster managers as it was introduced in 2018 after the launch of Spark 2.3. Only recently, in March 2021, Kubernetes has been declared generally available with the release of Spark 3.1. It has been gaining popularity ever since its launch because it offers containerization through Docker containers. Like YARN and the Standalone cluster, one can run Spark applications on Kubernetes in both client and cluster mode.

Which Cluster Manager should we use and when?

Choosing a cluster manager for any spark application depends on the goals of the application because all cluster managers provide a different set of scheduling capabilities. To get started with apache-spark, the standalone cluster manager is the easiest one to use when developing a new spark application.

Let us now discuss a few points that will help you in deciding which cluster manager you should use.

-

To get started with apache-spark, the standalone cluster manager is the easiest one to use when developing a new spark application. Also, it is a good choice as it offers most features that other cluster managers offer if one is only using Spark.

-

If one wishes to use other applications along with Spark, or they want to use richer resource scheduling capabilities (e.g., queues), both YARN and Mesos will be good options as they offer these advantages. But YARN can be a more preferred choice as it usually comes preinstalled in many Hadoop projects.

-

In some cases, Mesos proves to be advantageous over the other two cluster managers because of its fine-grained sharing option, which supports interactive implementations like the Spark shell level down their CPU allotments between commands. This feature makes Mesos appealing in situations where more than one user is working on interactive shells.

-

For most cases, it is foremost to run Spark on the same nodes as HDFS for speedy access to storage. One can install Mesos or the Standalone cluster manager on the same nodes manually, or most Hadoop projects have preinstalled YARN and HDFS together.

Apache Spark Architecture is based on two main abstractions-

- Resilient Distributed Datasets (RDD)

- Directed Acyclic Graph (DAG

Resilient Distributed Datasets (RDD)

RDD’s are collection of data items that are split into partitions and can be stored in-memory on workers nodes of the spark cluster architecture. In terms of datasets, Apache Spark supports two types of RDD’s – Hadoop Datasets which are created from the files stored on HDFS and parallelized collections which are based on existing Scala collections. Spark RDD’s support two different types of operations – Transformations and Actions. An important property of RDDs is that they are immutable, thus transformations never return a single value. Instead, transformation functions simply read an RDD and generate a new RDD. On the other hand, the Action operation evaluates and produces a new value. When an Action function is applied on an RDD object, all the data processing requests are evaluated at that time and the resulting value is returned.

Read More about Resilient Distributed Datasets in Spark.

Directed Acyclic Graph (DAG)

Direct - Transformation is an action that transitions data partition state from A to B.

Acyclic -Transformation cannot return to the older partition

DAG is a sequence of computations performed on data where each node is an RDD partition and edge is a transformation on top of data. The DAG abstraction helps eliminate the Hadoop MapReduce multi0stage execution model and provides performance enhancements over Hadoop.

New Projects

How does Spark Work?

Apache Spark follows a master/slave architecture with two main daemons and a cluster manager –

- Master Daemon – (Master/Driver Process)

- Worker Daemon –(Slave Process)

Apache Spark Architecture Diagram – Overview of Apache Spark Cluster

A spark cluster has a single Master and any number of Slaves/Workers. The driver and the executors run their individual Java processes and users can run them on the same horizontal spark cluster or on separate machines i.e. in a vertical spark cluster or in mixed machine configuration.

For classic Hadoop platforms, it is true that handling complex assignments require developers to link together a series of MapReduce jobs and run them in a sequential manner. Here, each job has a high latency. The job output data between each step has to be saved in the HDFS before other processes can start. The advantage of having DAG and RDD is that they replace the disk IO with in-memory operations and support in-memory data sharing across DAGs, so that different jobs can be performed with the same data allowing complicated workflows.

Get More Practice, More Big Data and Analytics Projects, and More guidance.Fast-Track Your Career Transition with ProjectPro

Apache Spark Applications Examples at Various Companies

Spark at NC Eateries

In most parts of the United States, local health departments inspect the restaurants and assign a grade to each of them to prevent the country’s citizens from falling sick due to food-borne diseases. A higher grade is not something that restaurants will boast about because the criteria are such that lower grades indicate the kitchen has been maintained well, the food has been stored safely, etc.

But, unfortunately, no place is available for looking at each restaurant’s grade in one go in North Carolina. However, there is one website by the name of, NCEatery.com which is trying to realize this by centralizing the information and performing predictive analytics on this information to find patterns in restaurant quality. It is using Apache Spark in the backend to consume restaurants data from different countries. It then crunches the data, performs necessary analysis, and then provides a summary on its website. It uses Spark to process 1.6 × 1021 datapoints and uploads approx. 2,500 pages every 18 hours using a small cluster.

Spark at Lumeris

Lumeris is the name for an information-based healthcare services company which is based in St. Louis, Missouri. It has conventionally assisted healthcare providers to draw more relevant conclusions from their data. The company’s ultra-modern IT system required a thrust to take in more customers and achieve more significant inferences from the data it had. At Lumeris, as part of the data engineering procedures, Apache Spark consumes thousands of comma-separated values (CSV) files stored on Amazon Simple Storage Service (S3), develops health-care-compliant HL7 FHIR resources,7 and places them in a specialized document store where they can be ingested by both the current applications and a new generation of client applications. This technology stack supports Lumeris in continuing its growth, both in terms of processed data and applications. And with the help of this technology, Lumeris aims to save lives in the future.

Spark analyzes equipment logs for CERN

CERN or the European Organization for Nuclear Research is a world-renowned nuclear particle physics lab where the Higgs Boson, often known as the god particle was discovered. It has the world’s largest particle accelerator: Large Hadron Collider (LHC), a 27-kilometre ring located 100 meters under the border between France and Switzerland, in Geneva. In the LHC, two directed beams of particles are forced to collide and the resulting radiation is recorded with the help of four giant detectors placed along the circumference of the LHC.

These collisions result in 1 petabyte (PB) of data per second. After applying relevant filtering techniques, the data size is brought down to 900 GB per day. In a collaborative effort with Oracle, Impala, and Spark, the CERN team outlined the Next CERN Accelerator Logging Service (NXCALS) around Spark on an on-premises cloud running OpenStack with up to 250,000 cores. The beneficiaries of this unique architecture are scientists (through custom applications and Jupyter notebooks), developers, and apps. CERN has the ambition of collecting even more data and improving the all-inclusive velocity of data processing.

Build an Awesome Job Winning Project Portfolio with Solved End-to-End Big Data Projects

Understanding the Spark Application Architecture

What happens when a Spark Job is submitted?

When a client submits a spark user application code, the driver implicitly converts the code containing transformations and actions into a logical directed acyclic graph (DAG). At this stage, the driver program also performs certain optimizations like pipelining transformations, and then it converts the logical DAG into a physical execution plan with a set of stages. After creating the physical execution plan, it creates small physical execution units referred to as tasks under each stage. Then tasks are bundled to be sent to the Spark Cluster.

The driver program then talks to the cluster manager and negotiates for resources. The cluster manager then launches executors on the worker nodes on behalf of the driver. At this point the driver sends tasks to the cluster manager based on data placement. Before executors begin execution, they register themselves with the driver program so that the driver has holistic view of all the executors. Now executors start executing the various tasks assigned by the driver program. At any point of time when the spark application is running, the driver program will monitor the set of executors that run. Driver program in the spark architecture also schedules future tasks based on data placement by tracking the location of cached data. When driver program main () method exits or when it call the stop () method of the Spark Context, it will terminate all the executors and release the resources from the cluster manager.

The structure of a Spark program at a higher level is - RDD's are created from the input data and new RDD's are derived from the existing RDD's using different transformations, after which an action is performed on the data. In any spark program, the DAG operations are created by default and whenever the driver runs the Spark DAG will be converted into a physical execution plan.

Launching a Spark Program

spark-submit is the single script used to submit a spark program and launches the application on the cluster. There are multiple options through which spark-submit script can connect with different cluster managers and control on the number of resources the application gets. For few cluster managers, spark-submit can run the driver within the cluster like in YARN on worker node whilst for others it runs only on local machines.

Related Posts

About the Author

ProjectPro

ProjectPro is the only online platform designed to help professionals gain practical, hands-on experience in big data, data engineering, data science, and machine learning related technologies. Having over 270+ reusable project templates in data science and big data with step-by-step walkthroughs,