Top 50 Hadoop Interview Questions for 2024

This article lists Top 50 Hadoop Interview questions for 2024.This list has hadoop interview questions for freshers and hadoop interview questions for experienced.

With the help of our best in class Hadoop faculty, we have gathered top Hadoop developer interview questions that will help you get through your next Hadoop job interview.

Hadoop Project-Analysis of Yelp Dataset using Hadoop Hive

Downloadable solution code | Explanatory videos | Tech Support

Start ProjectIT organizations from various domains are investing in big data technologies, increasing the demand for technically competent Hadoop developers. To build career as a Hadoop developer, one must be clear with Hadoop concepts and have a working knowledge of analysing data using MapReduce, Hive and Pig. Typical Hadoop interview questions include topics such as replication factor, node failures and distributed caching. If you are looking for frequently asked Hadoop Interview questions then you are at the right place. We have put together the list of top 50 Hadoop Interview Questions with the help of ProjectPro’s Hadoop faculty that will help you get through your first Hadoop Interview. The most probably questions asked during Hadoop Interviews are covered in this list. If you would like to read more questions, check out our blog on Top 100 Hadoop Interview Questions and Answers

Table of Contents

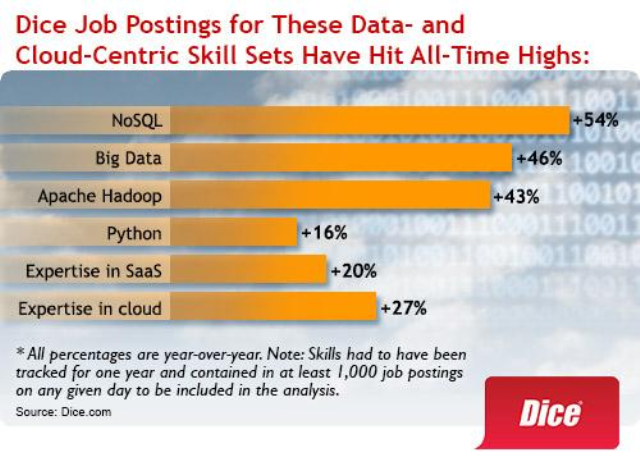

Gartner predicted that, “Big Data Movement will generate 4.4 million new IT jobs by end of 2015 and Hadoop will be in most advanced analytics products by 2015.” With the increasing demand for Hadoop for Big Data related issues, the prediction by Gartner is ringing true.

Ace Your Next Job Interview with Mock Interviews from Experts to Improve Your Skills and Boost Confidence!

The President of Dice.com, Mr. Shravan Goli said “The demand for Hadoop developers is up 34% from a year earlier, based on the number of jobs posted and Hadoop-related searches on the site.”

Image Credit: mapr.com

During March 2014, there were approximately 17,000 Hadoop Developer jobs advertised online. As of 4 th, April 2015 - there are about 50,000 job openings for Hadoop Developers across the world with close to 25,000 openings in the US alone. Of the 3000 Hadoop students that we have trained so far, the most popular blog article request was one on hadoop interview questions.

Image Credit: wantedanalytics.com

New Projects

On “The Hiring Scale” score chart where 1 denotes “Easy-to-fill” and 99 denotes “Hard-to-fill” positions, the role of a Hadoop Developer is ranked at 83 on an average in US which is slightly higher than the average IT jobs score which is 78. Three years ago only few companies were using Hadoop. Now, Hadoop technology is at the top in Big Data Analytics with an increasing user base.

Image Credit: slideshare.net

A Hadoop Developer is the person behind coding and programming of Hadoop applications in the Big Data domain. A Hadoop Developer should have strong understanding and hands-on experience of building, designing, installing and configuring Hadoop as he/she is the person responsible for Hadoop development and implementation which include implementation of MR jobs, preprocessing of Pig and Hive, loading of disparate data sets, database tuning and troubleshooting. With so many responsibilities to handle, it is not easy to get into the role of a Hadoop developer.

First impression is all it takes to impress recruiters. The best way to do this is by creating the most technically sound resume that can sell your Hadoop skills with viable examples of how you put those skills to use. A resume which does not address the need of the company that you are applying to – speaks volumes of your lack of knowledge of the industry and creates the impression that you will not be able to successfully utilize your skills. ProjectPro's Hadoop faculty has outlined some tips for improving your technical resume:

“For the past couple of years, I have been training aspiring Big Data enthusiasts across the globe on the Hadoop Stack. A couple of really common questions that pop up midway into the course or close to the end are, "How do I tailor my resume to land a job?" or "I am learning this for the first time, how do I showcase the learning to be able to get a job?"

While these are really valid and critical questions, the answer is rather complex in today's IT scenario. There are several articles by eminent and experienced recruiters and hiring managers on what they like or dislike about resumes. These articles are quite comprehensive, and clearly define the aesthetic hygiene, the flow and the relevance of a resume. Therefore, I am not going to talk about how to write a resume and get noticed. Good resources to get that information, is from LinkedIn Pulse articles and careercup.com among other sources.

Get FREE Access to Data Analytics Example Codes for Data Cleaning, Data Munging, and Data Visualization

Big Data Hadoop Developer Resume Tips

Apart from tailoring your hadoop developer resume, there are 4 steps which you must take if you are trying to get a job in emerging technology domains, including but not restricted to Big Data, Mobile Development, Cloud computing etc.

1.Hadoop Developer Resume Should Carefully outline the roles and responsibilities

The space of designation nomenclature has become really creative and innovative in the last few years. There is no way to generalize a Software Engineer or an ETL Architect in the industry today. Therefore it requires a bit of searching and introspection to zero in on the job profiles one wishes to apply for. Research and identify the roles and responsibilities and shortlist potential positions. The introspection part is needed to figure out if you have the necessary skills or the learning curve to take up the new role.

2. Make your hadoop developer resume highlight the required core skills

Every designation that you will come across on job portals will be searching for ‘Demi Gods’ amongst tech professionals. Multiple Programming Languages, Multiple Software Tools, Multiple Technology Platforms there is no end to the list. Identify the skills which you already have from the list of desired skills and highlight them on your profile. Try to figure out which are the most important skills for the role and make an attempt to learn about the skills.

Here's what valued users are saying about ProjectPro

Ed Godalle

Director Data Analytics at EY / EY Tech

Abhinav Agarwal

Graduate Student at Northwestern University

Not sure what you are looking for?

View All Projects3. Document each and every step of your efforts in the Hadoop Developer Resume

This is possibly one of the most important areas where you should focus on. There are several online platforms which allow you to showcase your skills while you contribute and collaborate. Getting shortlisted for a job interview is much more than just because of your skills. Here is what I have seen work time and again for professionals in my network.

Active experimentation and blogging about the newly learned skills:

You could use "WordPress" or "Blogger" or send your blogs to care@projectpro.io and we will publish them. Add the blog links to your resume.

Answer questions on forums:

If you have figured out certain pieces of working with new technologies actively search and help answer questions on forums like "Stack Overflow" on the same topic.

Maintain code base and collaborate on GitHub:

Maintain all your experimental code on GitHub and contribute to projects that interest you. Get some friends to work on the project with you. Mention the GitHub project link on your resume.

4. Purposefully Network:

Be genuine and connect with people in the technical domain where you are trying to get into. Engage in meaningful conversations and share your work. Collect feedback and be open to assist and consult for free.”

Once you know that you have optimally restricted your resume to show up in recruiter’s search results, you now have to prepare in order to clear your technical interviews. As Hadoop grows and the bugs get eliminated to produce improved versions – we can see that Hadoop interview questions are maturing a good deal as well. There are several technical, scenario-based, complex and analytical Hadoop interview questions asked in Hadoop Developer job interviews which are unlike other technical interviews.

Tom Hart, vice president of Eliassen Group "If you really want to get a big data job, ideally, if you knew something about storing, retrieving and interpreting data, and something more about representing that information in a meaningful way with the use of dashboards and business intelligence tools, and you could convey your knowledge of both of these things in an interview with a hiring manager, your chances of employment would be materially enhanced.”

Big Data Hadoop Interview Questions

Hadoop interviewers don’t bother with syntax questions or other simple hadoop interview questions that can be easily answered with the help of Google. You can answer the Hadoop interview questions if your basic concepts about the components are clear - as most of the Hadoop interview questions are based on the understanding of the concepts. Hadoop interview questions are generally based on the core components of Hadoop:

- Hadoop basic interview questions

- Hdfs interview questions

- Hadoop YARN Interview questions

- Hadoop MapReduce Interview Questions

With the help of our best in class Hadoop faculty, we have gathered top Hadoop developer interview questions that will help you get through your Hadoop Developer and admin job interviews.

Hadoop Developer Interview Questions

1) Explain how Hadoop is different from other parallel computing solutions.

Hadoop |

Parallel Computing Systems |

| Has a Master -Slave architecture. | Massively Parallel architecture |

| Fault-Tolerant, Shared Memory | Independent Memory and Processor Space. |

| Centralized Job Distribution. | Random Job Distribution |

| Coordinate resource management | Self managed resources and worker |

| Process Structured and Semi-Structured Data. | Process Unstructured Data. |

In parallel computing, different activities happen at the same time, i.e. a single application is spread across multiple processes so that it gets done faster. The differences between Hadoop and other parallel computing solutions might not be evidently clear. Smartphone is the best example of parallel computing as it has multiple cores and specialized computing chips.

Hadoop is an implementation of the “Map and Reduce” abstract idea where specific calculations consist of a parallel “map” followed by gathering data. Hadoop is usually applied to voluminous amount of data and in a distributed context.

The distinction between Hadoop and parallel computing solutions is still foggy and has a very thin boundary line.

2) What are the modes Hadoop can run in?

Hadoop can run in three different modes-

Local/Standalone Mode

- This is the single process mode of Hadoop, which is the default mode, wherein which no daemons are running.

- This mode is useful for testing and debugging.

Pseudo Distributed Mode

- This mode is a simulation of fully distributed mode but on single machine. This means that, all the daemons of Hadoop will run as a separate process.

- This mode is useful for development.

Fully Distributed Mode

- This mode requires two or more systems as cluster.

- Name Node, Data Node and all the processes run on different machines in the cluster.

- This mode is useful for the production environment.

Test your Practical Hadoop Knowledge

3) What will a Hadoop job do if developers try to run it with an output directory that is already present?

By default, Hadoop Job will not run if the output directory is already present, and it will give an error. This is for saving the user- if accidentally he/she runs a new job, they will end up deleting all the effort and time spent. Having said that, this does not pose us with the limitation, we can achieve our goal from other workarounds depending upon the requirements.

4) How can you debug your Hadoop code?

Hadoop has a web interface to debug the code or users can make use of counters to debug Hadoop codes. There are some other simple ways to debug Hadoop code -

The simplest way is to use System.out.println () or System.err.println () commands, available in Java. To view all the stdout logs, easiest way is go to job tracker page, click on the completed jobs, then on map/reduce task, the task no. comes handy now, click on task id and then task logs, finally stdout logs.

But if your code produces huge number of logs, in that case there are various other methods to debug the code-

i) To check the details of the failed tasks, one can simply add the variable keep.failed.task.files in config. Once you do that, you can go to the failed tasks directory and run that particular task in isolation, which will run on single jvm.

ii)Other option is to run the same job on small cluster with same input. This will keep all the logs in one place, but we need to make sure that logging level is set to INFO.

5) Did you ever built a production process in Hadoop? If yes, what was the process when your Hadoop job fails due to any reason? (Open Ended Question)

6) Give some examples of companies that are using Hadoop architecture extensively.

- PayPal

- JPMorgan Chase

- Walmart

- Yahoo

- AirBnB

Recommended Reading:

Hadoop Admin Interview Questions

7) If you want to analyze 100TB of data, what is the best architecture for that?

8) Explain about the functioning of Master Slave architecture in Hadoop?

Hadoop applies Master Slave architecture at both storage level and computation level.

Master: NameNode

- File system namespace management and access control from client.

- Executes and manages operation of file system namespace like closing, opening, renaming of directories and files.

Slave: DataNode

- A cluster has only one DataNode - per node. File system namespace are exposed to the clients, which allow them to store the files. These files are split into two or more blocks, which are stored in the DataNode. DataNode is responsible for replication, creation and deletion of block, as and when instructed by NameNode.

Build a Big Data Project Portfolio by working on ProjectPro's Hadoop Mini Projects

9) What is distributed cache and what are its benefits?

For the execution of a job many a times the application requires various files, jars and archives. Distributed Cache is a mechanism provided by the MapReduce framework that copies the required files to the slave node, much before the execution of the task starts.All the required files will be copied only once per job.

All the required files will be copied only once per job. The efficiency we gain from Distributed Cache is that the necessary files are copied before the execution of the task before a particular job starts on that node. Other than this, all the cluster machines can use this cache as local file system.However, under rare circumstances it would be better for the tasks to use the standard HDFS I/O to copy the files instead of depending on the Distributed Cache. For instance, if a particular application has very few reduces and requires huge artifacts of size greater than 512MB in the distributed cache, then it is a better to opt for HDFS I/O.

10) What are the points to consider when moving from an Oracle database to Hadoop clusters? How would you decide the correct size and number of nodes in a Hadoop cluster?

11) How do you benchmark your Hadoop Cluster with Hadoop tools?

There are couple of benchmarking tools which come with Hadoop Distributions like TestDFSIO, nnbench, mrbench, TeraGen or TeraSort.

- For Network bottlenecks and IO related performance issues TestDFSIO can be used to stress test the cluster.

- NNBench can used to do load test on NameNode by creating, deleting, and renaming files on HDFS.

- Once the cluster passes TestDFSIO tests, TeraSort benchmarking tool can be used to test the configuration. Yahoo used TeraSort and created a record of sorting 1PB of data in 16 hours on a cluster of 3800 nodes.

Hadoop Interview Questions on HDFS

12) Explain the major difference between an HDFS block and an InputSplit.

HDFS block is physical chunk of data in a disk. For e.g. if you have block of 64 MB and a file of size 50 MB, then block 1 will be taken by record 1 but record 2 will not fit completely and ends in block 2. InputSplit is a Java class that points to start and end location in the block.

13) Does HDFS make block boundaries between records?

Yes

14) What is streaming access?

Data is not read as chunks or packets but rather comes in at a constant bit rate. The application starts reading data from the beginning of the file and continues in a sequential manner.

15) What do you mean by “Heartbeat” in HDFS?

All slaves send a signal to their respective master node i.e. DataNode will send signal to NameNode, and TaskTracker will send signal to JobTracker that they are alive. These signals are the “heartbeat” in HDFS.

16) If there are 10 HDFS blocks to be copied from one machine to another. However, the other machine can copy only 7.5 blocks, is there a possibility for the blocks to be broken down during the time of replication?

No, the blocks cannot be broken, it is the responsibility of the master node to calculate the space required and accordingly allocate the blocks.Master node monitors the number of blocks that are in use and keeps track of the available space.

17) What is Speculative execution in Hadoop?

Hadoop ecosystem works on dividing tasks into smaller sub tasks, which are then spread over the nodes for processing. While processing a task, there is a possibility that some of the systems could be slow, which may slow down the overall process thus requiring lot of time to complete a particular task.Multiple copies of MapReduce tasks are run on other DataNodes. As most of the tasks finish, Hadoop creates redundant copies of the remaining tasks, and assigns it to the nodes that are not executing any other task. This process is referred to as Speculative Execution. This way if the same task is finished by some other node then Hadoop will stop all the other nodes which are processing that task.

18) What is WebDAV in Hadoop?

WebDAV are a set of extensions provided on HTTP that can be mounted as file systems, so that HDFS can be accessed just like any other standard file system.

19) What is fault tolerance in HDFS?

Whenever a system fails, the whole MapReduce process has to be executed again. Even if the fault occurs after the mapping process, the process has to be restarted. The backup intermediary key value pairs help improve the performance at failure time. The intermediary key value pairs help retrieve or resume the job when there is any fault in the system.Apart from this, since HDFS assumes that the data stored in nodes is unreliable, it creates copies of the data which are available across all the nodes that can be used on failure.

Get More Practice, More Big Data and Analytics Projects, and More guidance.Fast-Track Your Career Transition with ProjectPro

20) How are HDFS blocks replicated?

The default replication factor is 3 which means that the data is safe if 3 copies are created. HDFS follows a simple procedure to replicate blocks.One replica is present on the machine on which the client is running, the second replica is created on a randomly chosen rack (ensuring that it is different from the first allocated rack) and a third replica is created on a randomly selected machine on the same remote rack on which second replica is created.

21) Which command is used to do a file system check in HDFS?

hadoop fsck

22) Explain about the different types of “writes” in HDFS.

There are two different types of asynchronous writes–posted and non-posted. In posted writes we do not wait for acknowledgement after we write whereas in non-posted writes we wait for the acknowledgement after we write, which is more expensive in terms IO and network bandwidth.

Hadoop MapReduce Interview Questions

23) What is a NameNode and what is a DataNode?

DataNode is the place where the data actually resides before any processing takes place. NameNode is the master node that contains file system metadata and has information about - which file maps to which block locations and which blocks are stored on the DataNode.

24) What is Shuffling in MapReduce?

The process of transferring data (outputs) from mapper to the reducers is known as Shuffling.

25) Why would a Hadoop developer develop a Map Reduce by disabling the reduce step?

26) What is the functionality of Task Tracker and Job Tracker in Hadoop? How many instances of a Task Tracker and Job Tracker can be run on a single Hadoop Cluster?

27) How does NameNode tackle DataNode failures?

All the data nodes periodically send notifications a.k.a Heartbeat signal to the NameNode, which implies that the DataNode is alive. Apart from Heartbeat, NameNode also receives Block report from DataNodes, which consists of all the blocks on a DataNode. In case NameNode does not receive this, it marks that DataNode as a dead node.As soon as the DataNode is marked as non-functional or dead, block transfer is initiated to the DataNode with which replication was done initially.

Become a Hadoop Developer By Working On Industry Oriented Hadoop Projects

28) What is InputFormat in Hadoop?

As the name suggests, it specifies the process of reading data from files into an instance of the Mapper. There are various implementations of InputFormat, like for reading text files, binary data and etc.We can even create our own custom InputFormat implementation. Another important job of InputFormat is to split the data and provide inputs to map tasks.

29) What is the purpose of RecordReader in Hadoop?

RecordReader is a class that loads the data from files and converts it into key, value pair format as required by the mapper. InputFormat class instantiates RecordReader after it splits the data.

30) What is InputSplit in MapReduce?

InputSplit is a Java class that points to the start and end location in the block.

31)In Hadoop, if custom partitioner is not defined then, how is data partitioned before it is sent to the reducer?

In this case default partitioner is used, which does all the work of hashing and partitioning assignment to the reducer.

32) What is replication factor in Hadoop and what is default replication factor level Hadoop comes with?

For being fault tolerant, HDFS is designed in such a way that it replicates the data blocks on all the nodes in the cluster. Replication factor is that property which decides how many copies of the blocks have to be created on the nodes. Hadoop will keep n – 1 copies of the data.Default value of replication factor is 3, which means, it will keep 2 copies of the data.

33) What is SequenceFile in Hadoop and Explain its importance?

A SequenceFile can be treated as a container or a zip archive for storing small files. For e.g. if we have metadata (filename, path, size etc.) we can store that in a SequenceFile as key/value pairs.It is required when we have very small amounts of data for processing, as creating mappers and reducers for small amounts of data would be an overhead. This will also increase memory overhead for NameNode as it has to store information of huge number of small files.

34) If you are the user of a MapReduce framework, then what are the configuration parameters you need to specify?

35) Explain about the different parameters of the mapper and reducer functions.

The basic parameters to the Mapper and Reducer functions are -

- KEYIN

- VALUEIN

- KEYOUT

- VALUEOUT

36) How can you set random number of mappers and reducers for a Hadoop job?

Mappers and reducers are calculated by Hadoop, based on the DFS block size. It is possible to set an upper limit for the mappers using conf.setNumMapTasks (int num) function. However, it is not possible to set it to a lower value than the one calculated by Hadoop.

During command line execution of jar, use the following command to set the number of mappers and reducers -"-D mapred.map.tasks=4" and "-D mapred.reduce.tasks=3"

The above command will allocate 4 mappers and 3 reducers for the task.

37) How many Daemon processes run on a Hadoop System?

5 Daemon processes run on a Hadoop system, of which 3 daemon processes run on the master node and 2 run on the slave node.

On Master Node, the 3 daemon processes are-

1) NameNode- This daemon process maintains the metadata for HDFS.

2) JobTracker – Manages MapReduce Jobs.

3) Secondary NameNode –Deals with the organization functions of NameNode.

On Slave Node, 2 daemon processes that run are-

4) DataNode- Consists of the actual HDFS data blocks before any processing is done.

5) TaskTracker- This daemon process instantiates and monitors each map reduce task.

3 more daemon processes have been added in Hadoop 2.x

38) What happens if the number of reducers is 0?

When the number of reducers is set to 0, no reducers will run. The output of mappers will be stored in separate file on HDFS.

39) What is meant by Map-side and Reduce-side join in Hadoop?

Map – Side Join

As the name suggests, if a join is performed at mapper side it is termed as Map – Side Join. To perform this join, the data has to be partitioned equally, sorted by the same key and records for the key should be in the same partition.

Reduce – Side Join

It is much simpler than Map – Side Join, as reducers get the structured data after processing, unlike Map-Side join which require data to be sorted and partitioned. Reduce Side joins are not as efficient as Map-Side joins because they have to go through the sort and shuffle phase.

Join implementation depends on the size of the dataset and how they are partitioned. If the size of dataset is too large to be distributed across all the nodes in a cluster or it is too small to be distributed - in either case, Side Data Distribution technique is used.

40) How can the NameNode be restarted?

- Go to /etc/init.d/hadoop-namenode stop

- Then, hadoop-namenode start

41) Hadoop attains parallelism by isolating the tasks across various nodes; it is possible for some of the slow nodes to rate-limit the rest of the program and slows down the program. What method Hadoop provides to combat this?

This can be achieved using Speculative Execution mechanism.

42) What is the significance of conf.setMapper class?

setMapper class sets up all the required parameters for a job to execute.

43) What are combiners and when are these used in a MapReduce job?

Power of Hadoop can be seen on all dimensions of data. When the output of a Map task is huge, transferring it over the network can slow down whole process. Hadoop has a concept of combiners, also known as semi – reducers. Their task is to create a summary of the map output with same key and provide it to reducers for further processing.

44) How does a DataNode know the location of the NameNode in Hadoop cluster?

In the configuration file - conf/*-site.xml contains the NameNode location on each DataNode.

45) How can you check whether the NameNode is working or not?

Use the command -/etc/init.d/hadoop-0.20-namenode status or use the ‘jps’ command.

Build an Awesome Job Winning Project Portfolio with Solved End-to-End Big Data Projects

Pig Interview Questions

46) When doing a join in Hadoop, you notice that one reducer is running for a very long time. How will address this problem in Pig?

To address this problem, we can use Skew Join of Pig. Skew join identifies the largest dataset on the right side of join. It then splits the dataset and passes it through different reducers on the cluster. For the rest of the dataset, a regular standard Join is performed.

47) Are there any problems which can only be solved by MapReduce and cannot be solved by PIG? In which kind of scenarios MR jobs will be more useful than PIG?

There are several scenarios or problems which can be solved only by MapReduce. Basically, wherever there is a requirement of a custom partitioner, then MapReduce is more useful than PIG, as the latter does not allow one.

48) Give an example scenario on the usage of counters.

The power of Hadoop lies in splitting the task and executing it on different nodes in the cluster, where the cluster can comprise of n number of nodes. If a record fails in any of the MapReduce phases, then it becomes very difficult to check how many records have failed. Although Hadoop provides exhaustive logging control, but again, it’s difficult to check the logs on individual nodes. In these scenarios, Counters come handy, whenever there is a failed record, the developer just has to increment only the counter. But, the main advantage of using a counter is that, it provides total value of the whole job.

Hive Interview Questions

49) Explain the difference between ORDER BY and SORT BY in Hive?

ORDER BY

This operation is performed on the complete query result set. The whole data set has to be passed to a single reducer to perform this operation, which may slow down the whole process.

SORT BY

We can treat this as a local ORDER BY operation in reducer. This means that SORT BY is performed on the data inside each reducer but the whole dataset is not ordered.

50) Differentiate between HiveQL and SQL.

We would like to know about your experience in Hadoop interviews. Please comment below to let us know if we missed any important question that is regularly asked in these interviews.

Citing Sources : slideshare.net, dice.com, experfy.com ,fromdev.com.

Related Posts

How much Java is required to learn Hadoop?

Top 100 Hadoop Interview Questions and Answers

Difference between Hive and Pig - The Two Key components of Hadoop Ecosystem

About the Author

ProjectPro

ProjectPro is the only online platform designed to help professionals gain practical, hands-on experience in big data, data engineering, data science, and machine learning related technologies. Having over 270+ reusable project templates in data science and big data with step-by-step walkthroughs,