Solved end-to-end Data Science & Big Data projects

Get ready to use Data Science and Big Data projects for solving real-world business problems

START PROJECTBig Data Project Categories

AWS Projects

30 Projects

Apache Hadoop Projects

27 Projects

Apache Hive Projects

22 Projects

Microsoft Azure Projects

17 Projects

Spark SQL Projects

14 Projects

Apache Pig Projects

2 Projects

Trending Big Data Projects

Build a Data Pipeline with Azure Synapse and Spark Pool

In this Azure Project, you will learn to build a Data Pipeline in Azure using Azure Synapse Analytics, Azure Storage, Azure Synapse Spark Pool to perform data transformations on an Airline dataset and visualize the results in Power BI.

View Project Details

Build Classification and Clustering Models with PySpark and MLlib

In this PySpark Project, you will learn to implement pyspark classification and clustering model examples using Spark MLlib.

View Project Details

SQL Project for Data Analysis using Oracle Database-Part 4

In this SQL Project for Data Analysis, you will learn to efficiently write queries using WITH clause and analyse data using SQL Aggregate Functions and various other operators like EXISTS, HAVING.

View Project Details

Data Science Project Categories

Data Science Projects in Retail & Ecommerce

28 Projects

Data Science Projects in Banking and Finance

19 Projects

NLP Projects

14 Projects

Pytorch

6 Projects

Keras Deep Learning Projects

2 Projects

IoT Projects

1 Projects

Trending Data Science Projects

NLP and Deep Learning For Fake News Classification in Python

In this project you will use Python to implement various machine learning methods( RNN, LSTM, GRU) for fake news classification.

View Project Details

Build a Graph Based Recommendation System in Python -Part 1

Python Recommender Systems Project - Learn to build a graph based recommendation system in eCommerce to recommend products.

View Project Details

NLP Project for Multi Class Text Classification using BERT Model

In this NLP Project, you will learn how to build a multi-class text classification model using using the pre-trained BERT model.

View Project Details

Customer Love

Unlimited 1:1 Live Interactive Sessions

- 60-minute live session

Schedule 60-minute live interactive 1-to-1 video sessions with experts.

- No extra charges

Unlimited number of sessions with no extra charges. Yes, unlimited!

- We match you to the right expert

Give us 72 hours prior notice with a problem statement so we can match you to the right expert.

- Schedule recurring sessions

Schedule recurring sessions, once a week or bi-weekly, or monthly.

- Pick your favorite expert

If you find a favorite expert, schedule all future sessions with them.

-

Use the 1-to-1 sessions to

- Troubleshoot your projects

- Customize our templates to your use-case

- Build a project portfolio

- Brainstorm architecture design

- Bring any project, even from outside ProjectPro

- Mock interview practice

- Career guidance

- Resume review

Latest Blogs

How to Learn Tableau for Data Science in 2024?

Wondering how to learn Tableau for Data Science? This blog offers easy-to-follow tips to help you master Tableau for visualizing & analyzing data. ProjectPro

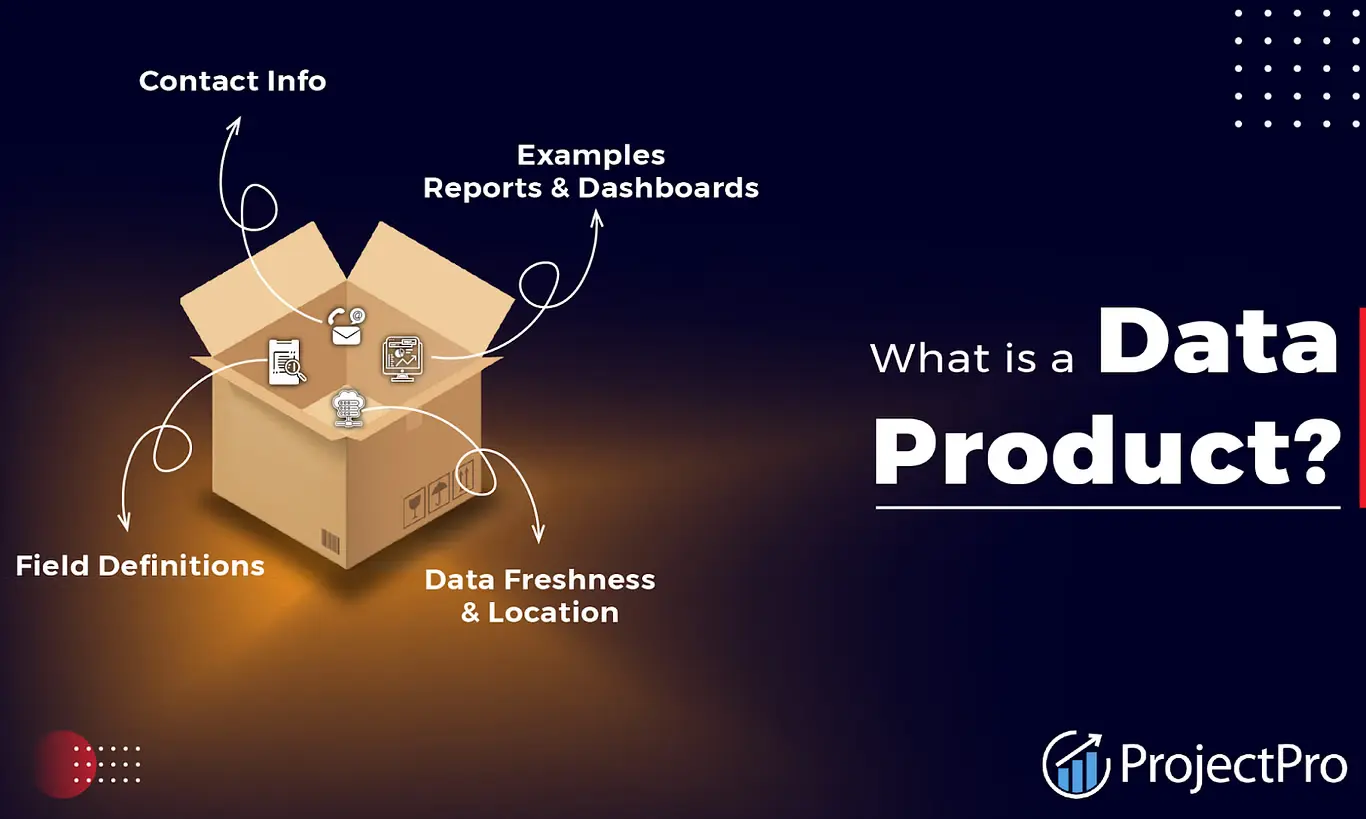

Data Products-Your Blueprint to Maximizing ROI

Explore ProjectPro's Blueprint on Data Products for Maximizing ROI to Transform your Business Strategy.

30+ Python Pandas Interview Questions and Answers

Prepare for Data Science interviews like a pro! Check out our blog with 30+ Python Pandas Interview questions and answers. | ProjectPro

We power Data Science & Data Engineering

projects at

Join more than

115,000+ developers worldwide

Get a free demo