Solved end-to-end Data Science & Big Data projects

Get ready to use Data Science and Big Data projects for solving real-world business problems

START PROJECTBig Data Project Categories

Spark Streaming Projects

6 Projects

Apache Impala Projects

4 Projects

Apache Flume Projects

4 Projects

Spark GraphX Projects

3 Projects

Neo4j Projects

2 Projects

Redis Projects

1 Projects

Trending Big Data Projects

A Hands-On Approach to Learn Apache Spark using Scala

Get Started with Apache Spark using Scala for Big Data Analysis

View Project Details

Yelp Data Processing using Spark and Hive Part 2

In this spark project, we will continue building the data warehouse from the previous project Yelp Data Processing Using Spark And Hive Part 1 and will do further data processing to develop diverse data products.

View Project Details

Build an ETL Pipeline on EMR using AWS CDK and Power BI

In this ETL Project, you will learn build an ETL Pipeline on Amazon EMR with AWS CDK and Apache Hive. You'll deploy the pipeline using S3, Cloud9, and EMR, and then use Power BI to create dynamic visualizations of your transformed data.

View Project Details

Data Science Project Categories

Data Science Projects in Python

138 Projects

Data Science Projects in R

22 Projects

Data Science Projects in Banking and Finance

19 Projects

Machine Learning Projects in R

16 Projects

NLP Projects

14 Projects

IoT Projects

1 Projects

Trending Data Science Projects

LLM Project to Build and Fine Tune a Large Language Model

In this LLM project for beginners, you will learn to build a knowledge-grounded chatbot using LLM's and learn how to fine tune it.

View Project Details

GCP MLOps Project to Deploy ARIMA Model using uWSGI Flask

Build an end-to-end MLOps Pipeline to deploy a Time Series ARIMA Model on GCP using uWSGI and Flask

View Project Details

Mastering A/B Testing: A Practical Guide for Production

In this A/B Testing for Machine Learning Project, you will gain hands-on experience in conducting A/B tests, analyzing statistical significance, and understanding the challenges of building a solution for A/B testing in a production environment.

View Project Details

Customer Love

Unlimited 1:1 Live Interactive Sessions

- 60-minute live session

Schedule 60-minute live interactive 1-to-1 video sessions with experts.

- No extra charges

Unlimited number of sessions with no extra charges. Yes, unlimited!

- We match you to the right expert

Give us 72 hours prior notice with a problem statement so we can match you to the right expert.

- Schedule recurring sessions

Schedule recurring sessions, once a week or bi-weekly, or monthly.

- Pick your favorite expert

If you find a favorite expert, schedule all future sessions with them.

-

Use the 1-to-1 sessions to

- Troubleshoot your projects

- Customize our templates to your use-case

- Build a project portfolio

- Brainstorm architecture design

- Bring any project, even from outside ProjectPro

- Mock interview practice

- Career guidance

- Resume review

Latest Blogs

Using CookieCutter for Data Science Project Templates

Explore simplicity, versatility, and efficiency of Cookiecutter for Data science project templating and collaboration

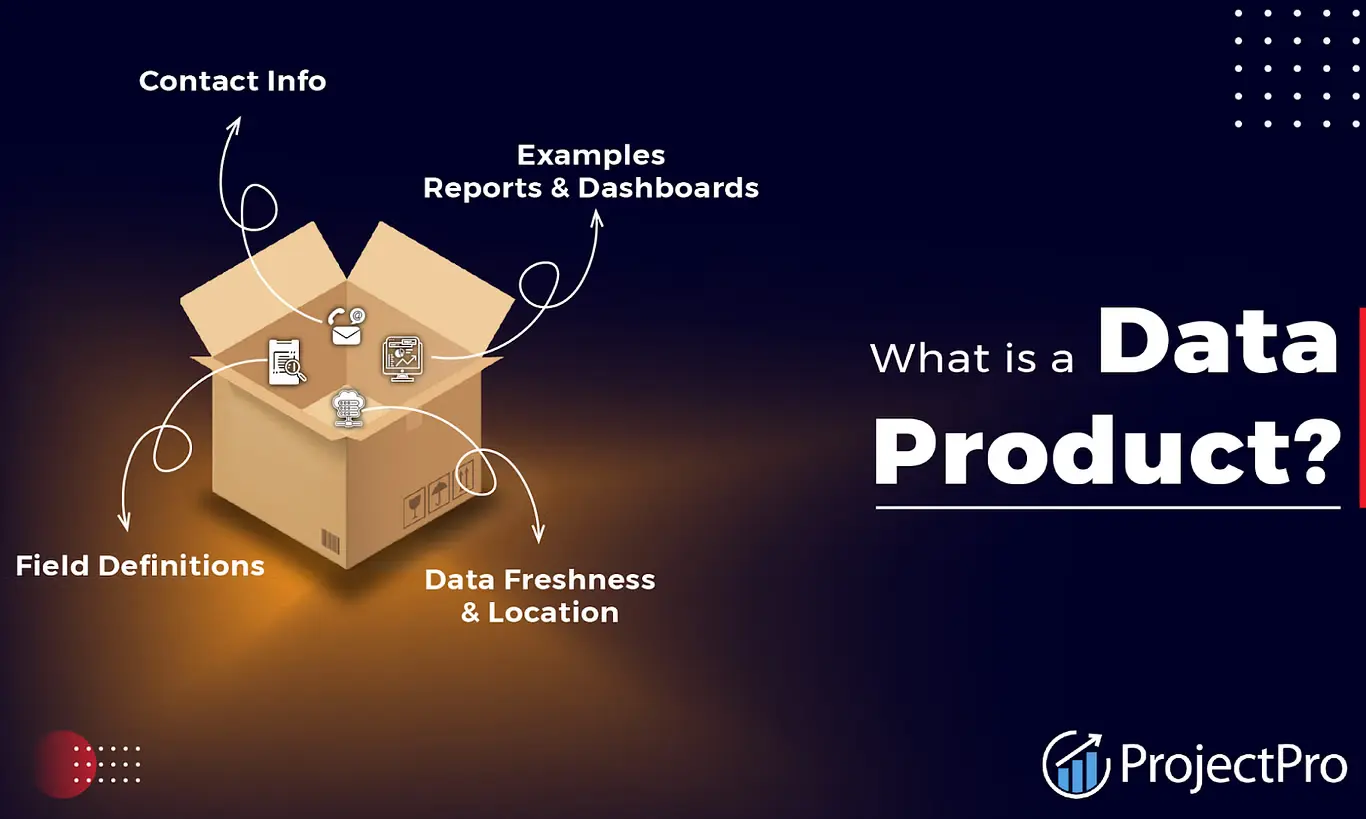

Data Products-Your Blueprint to Maximizing ROI

Explore ProjectPro's Blueprint on Data Products for Maximizing ROI to Transform your Business Strategy.

Best MLOps Certifications To Boost Your Career In 2024

Chart your course to success with our ultimate MLOps certification guide. Explore the best options and pave the way for a thriving MLOps career. | ProjectPro

We power Data Science & Data Engineering

projects at

Join more than

115,000+ developers worldwide

Get a free demo