Solved end-to-end Apache Hadoop Projects

Get ready to use Apache Hadoop Projects for solving real-world business problems

START PROJECTApache Hadoop Projects

Customer Love

Latest Blogs

Best MLOps Certifications To Boost Your Career In 2024

Chart your course to success with our ultimate MLOps certification guide. Explore the best options and pave the way for a thriving MLOps career. | ProjectPro

A comprehensive guide on LLama2 architecture, applications, fine-tuning, tokenization, and implementation in Python.

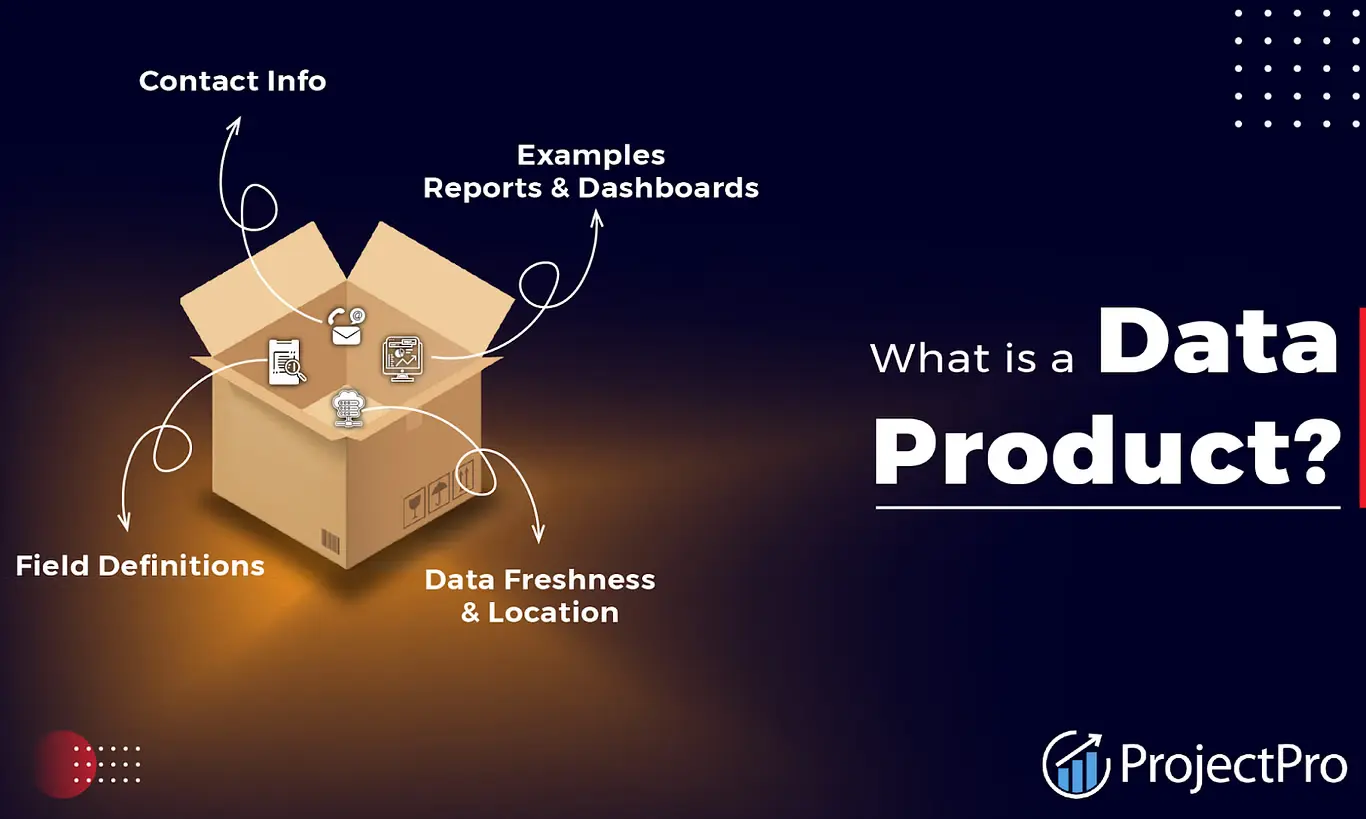

Data Products-Your Blueprint to Maximizing ROI

Explore ProjectPro's Blueprint on Data Products for Maximizing ROI to Transform your Business Strategy.

Hadoop Projects

Professionals and students who complete learning Hadoop from ProjectPro often ask our industry experts –

“How and where can I get projects in Hadoop, Hive, Pig or HBase to get more exposure to the big data tools and technologies?”

The reason behind the question is obvious, that is, the buzz that Apache Hadoop has created in the domain of distributed computing and parallel processing. When it comes to implementing data analysis techniques and machine learning algorithms over large datasets, many companies rely on tools offered by the Apache Software Foundation like Apache Hadoop, Apache Spark, Apache Storm, etc. for business growth. And, if you are interested in exploring Big data technology, then take a look at ProjectPro’s projects on Apache Hadoop that are designed to provide beginners and experienced professionals. They support an in-depth understanding of complex Hadoop architecture and its components. You will learn how the distributed file system is implemented using Hadoop Distributed File System (HDFS) and why Hadoop YARN serves as a framework for cluster resource management and jobs scheduling. Along with that, you will learn about the significance of Hadoop Common and Hadoop MapReduce in Hadoop architecture. The projects have datasets spread across diverse business domains -Retail, Travel, Banking, Finance, Media, etc. So, you can choose from a variety of domains.

Why you should enrol for ProjectPro’s Big Data Hadoop projects?

-

To better understand the Hadoop Ecosystem and its components.

-

Learn and upgrade your skills whenever there are enhancements to the existing version of Apache Hadoop.

-

You get to work on the latest big data and data analysis tools released in the market that help you stay updated with the industry trends.

-

You can use Apache Hadoop projects with source code from ProjectPro to build your own big data and data analysis services based on the business requirements.

Key Learnings from ProjectPro’s Apache Hadoop Projects

-

ProjectPro’s Hadoop projects will help you learn how to weave various data analysis and big data open-source software tools together into real-time projects.

-

These Hadoop projects for practice will not just let you learn about the various components of the Hadoop ecosystem but will also help you understand how they are being used across diverse business domains to build data lakes in various organizations.

-

You will build cutting-edge know-how in the most trending technology –Apache Hadoop, through these interesting project ideas.

What are the best Apache Hadoop project Ideas for beginners?

For big data beginners who want to get started learning the basics of Big Data and data analysis tools, ProjectPro has interesting Hadoop project ideas for beginners that will help them learn it quickly.

-

Implementing Slow Changing Dimensions in a Data Warehouse using Hive and Spark

-

Using Apache Hive for Real-Time Queries and Analytics of Log Files

What will you get when you enrol for ProjectPro’s Hadoop projects?

-

Source Code: Examine and solve end-to-end real-world problems from the Banking, eCommerce, and Entertainment sector using this source code.

-

Recorded Demo: Watch a video explanation on how to implement the solution step-by-step.

-

Complete Solution Kit: Get access to the solution design, documents, and supporting reference material, if any for every Hadoop project.

-

Mentor Support: Get your technical questions answered with mentorship from the best industry experts for free.

-

Hands-On Knowledge: Equip yourself with practical skills in the Hadoop ecosystem.

Big Data Analytics Real-Time Projects

Today every organization needs a data infrastructure that can help them deliver contextual experiences in real-time to their customers. Be it the language of a transactional email sent, an advertisement shown on social media sites like Instagram, Twitter, and Facebook, or be it the home screen of any mobile application.

As the raw data from a data lake has to be first converted into structured data, there is often a requirement to process data in real-time for optimum results and ensure quick response times when needed. There are several tools available in the Hadoop framework that enable big data developers to manage data in real-time. You can master the big data technologies by practising and working on these hands-on real-time big data projects.

Real-time Queries and Analytics using Apache Hive

In this project, you will get a log file that contains details about users who have visited various pages on a particular site. The aim is to implement a Hadoop job that performs data analysis over the log file and answers queries such as "Which page did user C visit more than four times a day?" and "Which pages were visited by users exactly ten times in a day?"

Check Out Apache Hive Real Time Projects to Build Your Portfolio

Stream Processing in Apache Kafka with KSQL

If you want to get some hands-on experience in building an ETL pipeline on streaming datasets using Kafka as a tool and get exposure to using KSQL, this project is a good choice. The project makes use of data generated by the New York Taxi and Limousine Commission. You can learn how to join two separate data streams, get data in real-time, and store the streaming data in a database.

Real-Time Data Collection and Spark Streaming Aggregation

In this project, you can understand how to perform data analysis in real-time and the tools required to set up a virtual environment on your computer and learn to connect Kafka, Spark, HBase and Hadoop. It involves creating and using your own ZooKeeper, using Spark Streaming to fetch data, building and running a data simulation and data visualization using Pie charts. Additionally, you will find videos that demonstrate the real-time aggregation of movements along several dimensions, including effective distance, duration and trajectories.

Hadoop Projects for Practising Data Processing

The best way to learn about any big data technology is to gain some hands-on experience. To practice and build up your skills in big data analytics, it is essential to have some experience with the various data analysis tools and techniques. Below are some of the sample Hadoop projects that find applications in the real world that will help you master your Hadoop and Big Data skills.

Data Processing Using Spark SQL

Through this project, you can learn how to use Spark as a Big Data distributed file system and the basics of Graph theory and Directed Acyclic Graphs. It involves learning more about the Resilient Distributed Dataset architecture used in Spark. You will get an introduction to Spark Streaming, Spark MLlib, Spark GraphX and Spark SQL modules in Apache Spark. In this Hadoop project, the model's performance tuning will help in getting the optimum output, and you will understand how to benchmark queries using Hive, Spark SQL and Impala and other Hadoop warehouse tools.

Building a Music Recommendation Engine

Recommendation engines help businesses to reach target audiences. In this project, you can learn how to build a recommendation engine on a music dataset. You will get an understanding of the horizontal scalability of Hadoop and the vertical scalability of RDBMS. By working on this project, you will learn to analyze large datasets efficiently. Pig Tez mode can help in overcoming bandwidth challenges. The project will also require you to understand the Haversine formula and its application using Pig Latin UDF and work with Hierarchical Data Format in HDFS.

Choosing the Right DBMS: SQL vs NoSQL

A good chunk of data engineering involves making important decisions. There are dozens of database solutions available, and choosing the right one depends on the requirements. Through this project, the industry expert demonstrates going through classes of NoSQL and understanding the features, functionalities and limitations involved in streaming data from some of the traditional RDBMS. At the end of the project, you will learn how to select a database based on required business specifications and non-functional requirements while also building up knowledge with respect to using SQL and NoSQL databases.

Processing Unstructured Data Using Spark

Big Data can be unstructured or semi-structured and not just in a structured format. Under such scenarios, developers might be required to create structured data from unstructured data. This project teaches you how to create data schemas and handle insufficient data through Apache Spark. You will learn how to automate the data pipeline while also working on integrating Spark and Hive.

Hadoop Projects for Students

As a student, if you are looking for some Big Data analytics projects to get some inspiration for your final year projects, you may find something of interest here. Maybe you just want to build up your project portfolio so that you can learn more about the open-source software, Apache Hadoop, and associated technologies. If that is the case, here are some projects that you should definitely explore on the ProjectPro platform -

SQL Analytics with Hive

With this Hive project, you can understand using analytical features and performing analytical queries over large datasets using Apache Hive. You will learn about serializing and deserializing and how it works. The project will also help you in understanding how to move data from MySQL to HDFS. Sqoop, Spark, and Hive will help in the process of data ingestion and data transformation. You will learn more about creating and executing Sqoop Jobs and using Parquet and Xpath to access schema.

Design a Network Crawler by Mining Github Social Profiles

Through this big data project, one can get a closer look at how to mine and make sense of connections using Github. Github has evolved to become a social coding platform and can hence be used to explore social networks among its users. Through this project, you can learn how to build a network model in HBase and learn how to run your own network crawler. The project uses Apache Spark to analyze the network, and GraphFrame or Spark GraphX will be running the graph algorithms to mine the connections associated with some Github projects.

Building an IoT infrastructure

This project is a good place to start if your aim is to get an understanding of the general architecture to be used for a smart IoT infrastructure. It uses streaming architectures like Lamba and Kappa and MQTT as the lightweight messaging protocol and the IoT. You will be able to understand the difference between Arduino and Raspberry pie and the differences between SmartPie technologies and implementation software. It makes use of Redis to perform real-time auto-tracking and uses Apache Kafka as the data hub for the streaming architecture. It involves the extraction of data from each sensor from the HBase and creating a chain base. There will also be an integration of HBase and Spark using the Spark HBase connector. The major features of the project involve leakage detection, regulation of supplies to the various chains of the pipeline as per event detention, and regulation of pipeline flow, including shutdown and restarting the flow as per event detection.

Big Data Analytics Projects

Explore some interesting projects on big data that involve analytics to be performed for profitable business decision-making -

Zeppelin for Data Analysis Collaboration

Through this project, you can get an in-depth understanding of Apache Zeppelin and how it works. You will be able to learn to install Zeppelin interpreters and will be running Spark, Hive, and Pig codes on your Zeppelin notebook. A notebook allows for the collaboration of your code and its execution and visualization. You will also get more information about other notebooks in the data ecosystem, such as Jupyter and the Dat cloud notebooks.

Airline Dataset Analysis

This project is all about analyzing an airline dataset by using Apache Hive, Pig, and Impala. You will understand more about the ingestion of data through data infrastructure methods such as data warehouses and backend services. Apache Pig will help carry out data preprocessing, and Hive and Impala will be the primary tools for partitioning the data and clustering it. The project gives some insight into building time series models.

Yelp Dataset Processing Using Spark and Hive

Processing Yelp datasets involves working with JSON files and understanding the data schema of JSON. You will learn to read the data, transform it into a Hive table, perform normalization and denormalization of the dataset into the Hive tables, and learn how Spark can perform data ingestion. The project also involves using HDFS to save data and using different ways to integrate Hive and Spark. It will give you some experience writing customized queries in Hive and performing self joins among the tables.

Build a Data Pipeline Based on Messaging Using Spark and Hive

You can learn how to simulate real-world data pipelined based on messages through this PySpark project. It involves parsing complex JSON data into CSV format using NiFi and then storing it in HDFS. The project demonstrates the use of Kafka for data processing via PySpark and writing the data generated as an output to a Kafka topic. The pipeline consumes the data from Kafka and stores the processed data in HDFS. Deploying the project uses the following tools - NiFi, PySpark, Hive, HDFS, Kafka, Airflow, Tableau and AWS QuickSight.

Recommended Project Categories that Might Interest You

If the above-mentioned list of projects excites you to explore some more solved end-to-end projects on Cloud computing, streaming analytics, relational databases, and advanced analytics, check out the other categories of the ProjectPro repository.

Projects using Spark Streaming

Frequently Asked Questions on Hadoop Projects

What can Hadoop be used for?

Hadoop is widely used for its ability to process enormous amounts of semi-structured and unstructured data. Hadoop is a big data platform that collects data from various sources in diverse forms. Hadoop is used in big data applications that integrate data from several sources, such as social media data, transaction data, etc.

What are some good Hadoop big data projects?

-

Visualizing Website Clickstream Data with Apache Hadoop - In this project, you'll use Hadoop Hive to analyze website clickstream data to boost sales by improving every part of the consumer experience on the site, from the very first click till the last.

-

SQL Analytics with Hive - In this Hadoop project, you will learn about the Hive features that allow you to execute analytical queries over massive datasets.

-

Analysis of Yelp Dataset using Hadoop Hive - You will implement Hive table operations, create Hive Buckets, and apply data engineering principles to the Yelp Dataset for processing, storage, and retrieval for this Hadoop project.

We power Data Science & Data Engineering

projects at

Join more than

115,000+ developers worldwide

Get a free demo