Director of Data Science & AnalyticsDirector, ZipRecruiter

Director of Business Intelligence , CouponFollow

NLP Engineer, Speechkit

Principal Data Scientist - Cyber Security Risk Management, Verizon

Use the RACE dataset to extract a dominant topic from each document and perform LDA topic modeling in python.

Understanding the problem statement

Get started today

Request for free demo with us.

Schedule 60-minute live interactive 1-to-1 video sessions with experts.

Unlimited number of sessions with no extra charges. Yes, unlimited!

Give us 72 hours prior notice with a problem statement so we can match you to the right expert.

Schedule recurring sessions, once a week or bi-weekly, or monthly.

If you find a favorite expert, schedule all future sessions with them.

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

250+ end-to-end project solutions

Each project solves a real business problem from start to finish. These projects cover the domains of Data Science, Machine Learning, Data Engineering, Big Data and Cloud.

15 new projects added every month

New projects every month to help you stay updated in the latest tools and tactics.

500,000 lines of code

Each project comes with verified and tested solutions including code, queries, configuration files, and scripts. Download and reuse them.

600+ hours of videos

Each project solves a real business problem from start to finish. These projects cover the domains of Data Science, Machine Learning, Data Engineering, Big Data and Cloud.

Cloud Lab Workspace

New projects every month to help you stay updated in the latest tools and tactics.

Unlimited 1:1 sessions

Each project comes with verified and tested solutions including code, queries, configuration files, and scripts. Download and reuse them.

Technical Support

Chat with our technical experts to solve any issues you face while building your projects.

7 Days risk-free trial

We offer an unconditional 7-day money-back guarantee. Use the product for 7 days and if you don't like it we will make a 100% full refund. No terms or conditions.

Payment Options

0% interest monthly payment schemes available for all countries.

Business Context

With the advent of big data and Machine Learning along with Natural Language Processing, it has become the need of an hour to extract a certain topic or a collection of topics that the document is about. Think when you have to analyze or go through thousands of documents and categorize under 10 – 15 buckets. How tedious and boring will it be ?

Thanks to Topic Modeling where instead of manually going through numerous documents, with the help of Natural Language Processing and Text Mining, each document can be categorized under a certain topic.

Thus, we expect that logically related words will co-exist in the same document more frequently than words from different topics. For example, in a document about space, it is more possible to find words such as: planet, satellite, universe, galaxy, and asteroid. Whereas, in a document about the wildlife, it is more likely to find words such as: ecosystem, species, animal, and plant, landscape. A topic contains a cluster of words that frequently occurs together. A topic modeling can connect words with similar meanings and distinguish between uses of words with multiple meanings.

A sentence or a document is made up of numerous topics and each topic is made up of numerous words.

Data Overview

The dataset has odd 25000 documents where words are of various nature such as Noun,Adjective,Verb,Preposition and many more. Even the length of documents varies vastly from having a minimum number of words in the range around 40 to maximum number of words in the range around 500. Complete data is split 90% in the training and the rest 10% to get an idea how to predict a topic on unseen documents.

Objective

To extract or identify a dominant topic from each document and perform topic modeling.

Tools and Libraries

We will be using Python as a tool to perform all kinds of operations.

Main Libraries used are

Approach

Topic EDA

Documents Pre-processing

Topic Modelling algorithms

Code Overview

Recommended

Projects

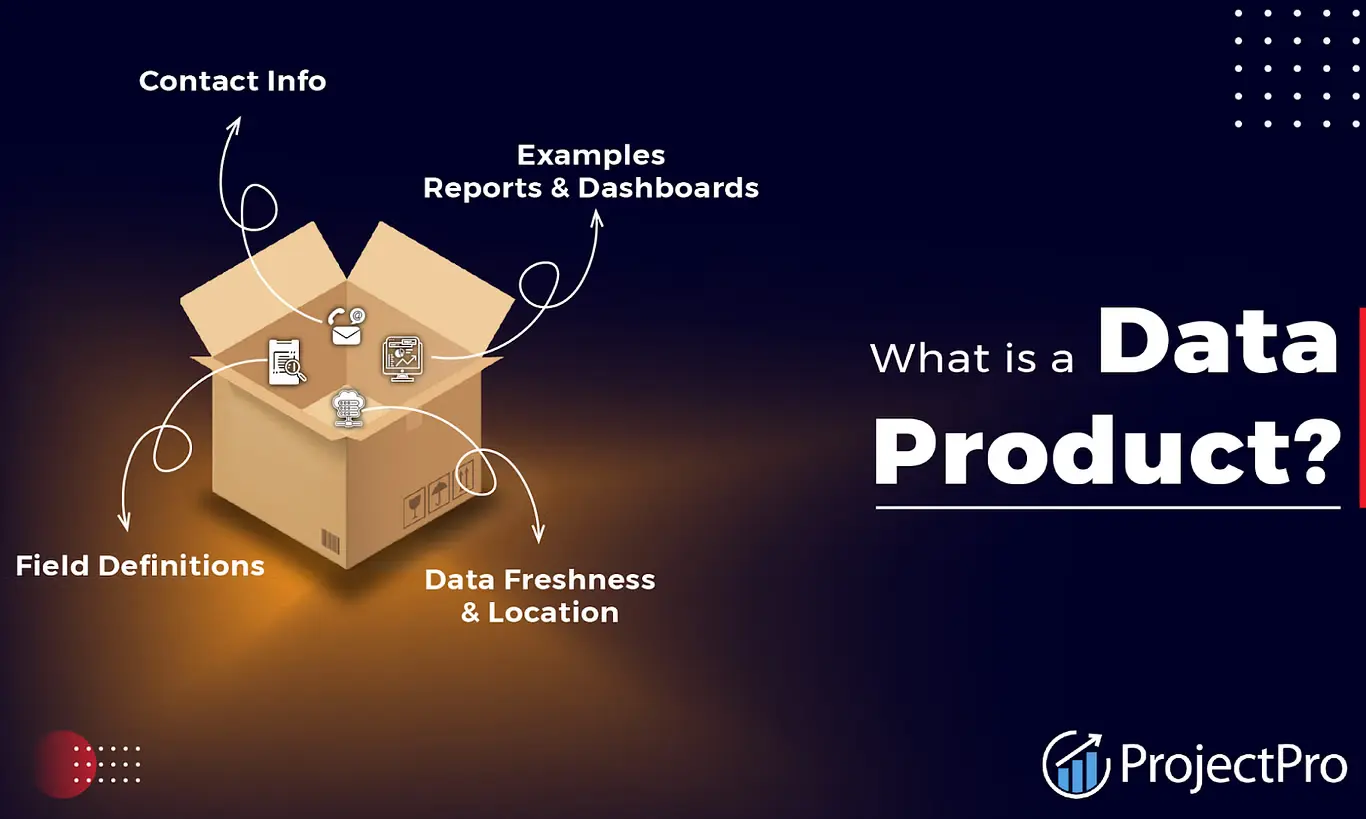

Data Products-Your Blueprint to Maximizing ROI

Explore ProjectPro's Blueprint on Data Products for Maximizing ROI to Transform your Business Strategy.

Best MLOps Certifications To Boost Your Career In 2024

Chart your course to success with our ultimate MLOps certification guide. Explore the best options and pave the way for a thriving MLOps career. | ProjectPro

Adam Optimizer Simplified for Beginners in ML

Unlock the power of Adam Optimizer: from theory, tutorials, to navigating limitations.

Get a free demo