Data Engineering Lead - Uber

Head of Data science, OutFund

Data Science, Yelp

Data Scientist, SwissRe

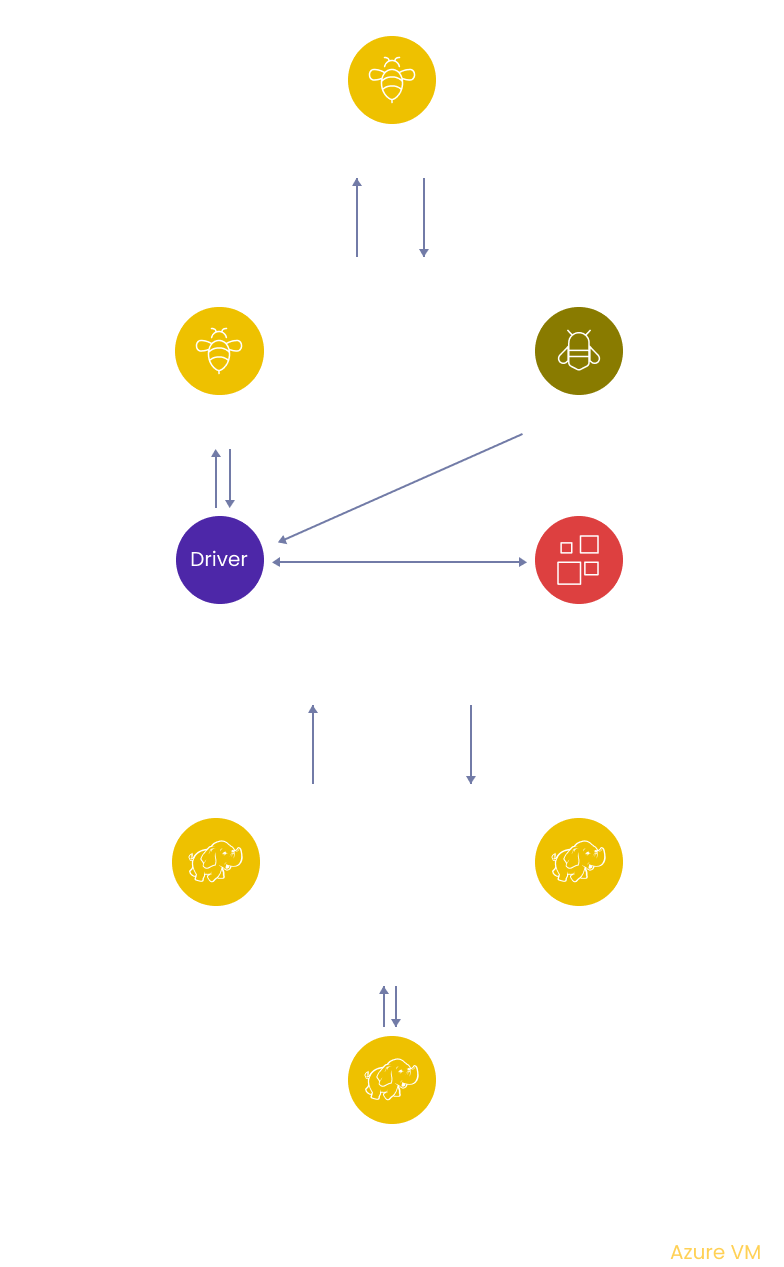

Hive Practice Example - Explore hive usage efficiently for data transformation and processing in this big data project using Azure VM.

Get started today

Request for free demo with us.

Schedule 60-minute live interactive 1-to-1 video sessions with experts.

Unlimited number of sessions with no extra charges. Yes, unlimited!

Give us 72 hours prior notice with a problem statement so we can match you to the right expert.

Schedule recurring sessions, once a week or bi-weekly, or monthly.

If you find a favorite expert, schedule all future sessions with them.

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

250+ end-to-end project solutions

Each project solves a real business problem from start to finish. These projects cover the domains of Data Science, Machine Learning, Data Engineering, Big Data and Cloud.

15 new projects added every month

New projects every month to help you stay updated in the latest tools and tactics.

500,000 lines of code

Each project comes with verified and tested solutions including code, queries, configuration files, and scripts. Download and reuse them.

600+ hours of videos

Each project solves a real business problem from start to finish. These projects cover the domains of Data Science, Machine Learning, Data Engineering, Big Data and Cloud.

Cloud Lab Workspace

New projects every month to help you stay updated in the latest tools and tactics.

Unlimited 1:1 sessions

Each project comes with verified and tested solutions including code, queries, configuration files, and scripts. Download and reuse them.

Technical Support

Chat with our technical experts to solve any issues you face while building your projects.

7 Days risk-free trial

We offer an unconditional 7-day money-back guarantee. Use the product for 7 days and if you don't like it we will make a 100% full refund. No terms or conditions.

Payment Options

0% interest monthly payment schemes available for all countries.

Big Data is a collection of massive quantities of semi-structured and unstructured data created by a heterogeneous group of high-performance devices spanning from social networks to scientific computing applications. Companies have the ability to collect massive amounts of data, and they must ensure that the data is in highly usable condition by the time it reaches data scientists and analysts. The profession of data engineering involves designing and constructing systems for acquiring, storing, and analyzing vast volumes of data. It is a broad field with applications in nearly every industry.

Apache Hadoop is a Big Data solution that allows for the distributed processing of enormous data volumes across computer clusters by employing basic programming techniques. It is meant to scale from a single server to thousands of computers, each of which will provide local computation and storage.

Apache Hive is a fault-tolerant distributed data warehouse system that allows large-scale analytics. Hive allows users to access, write, and manage petabytes of data using SQL. It is built on Apache Hadoop, and as a result, it is tightly integrated with Hadoop and is designed to manage petabytes of data quickly. Hive is distinguished by its ability to query enormous datasets utilizing a SQL-like interface and an Apache Tez, MapReduce, or Spark engine.

A data pipeline is a technique for transferring data from one system to another. The data may or may not be updated, and it may be handled in real-time (or streaming) rather than in batches. The data pipeline encompasses everything from harvesting or acquiring data using various methods to storing raw data, cleaning, validating, and transforming data into a query-worthy format, displaying KPIs, and managing the above process.

In this project, we will use the Airlines dataset to demonstrate the issues related to massive amounts of data and how various Hive components can be used to tackle them. Following are the files used in this project, along with a few of their fields :

airlines.csv - IATA_code, airport_name, city, state, country

carrier.csv - code, description

plane-data.csv - tail_number, type, manufacturer, model, engine_type

Flights data (yearly) - flight_num, departure, arrival, origin, destination, distance

➔ Language: HQL

➔ Services: Azure VM, Hive, Hadoop

Recommended

Projects

20+ Natural Language Processing Datasets for Your Next Project

Use these 20+ Natural Language Processing Datasets for your next project and make your portfolio stand out.

Data Science vs Data Engineering:Choosing Your Career Path

Data Science vs Data Engineering-Learn key differences, and career tips to seamlessly transition from data engineer to data scientist with ProjectPro

8 Deep Learning Architectures Data Scientists Must Master

From artificial neural networks to transformers, explore 8 deep learning architectures every data scientist must know.

Get a free demo