Global Data Community Lead | Lead Data Scientist, Thoughtworks

Data Scientist, SwissRe

Data Engineer - Capacity Supply Chain and Provisioning, Microsoft India CoE

Head of Data science, OutFund

In this big data project, you will learn how to process data using Spark and Hive as well as perform queries on Hive tables.

Get started today

Request for free demo with us.

Schedule 60-minute live interactive 1-to-1 video sessions with experts.

Unlimited number of sessions with no extra charges. Yes, unlimited!

Give us 72 hours prior notice with a problem statement so we can match you to the right expert.

Schedule recurring sessions, once a week or bi-weekly, or monthly.

If you find a favorite expert, schedule all future sessions with them.

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

Source: ![]()

250+ end-to-end project solutions

Each project solves a real business problem from start to finish. These projects cover the domains of Data Science, Machine Learning, Data Engineering, Big Data and Cloud.

15 new projects added every month

New projects every month to help you stay updated in the latest tools and tactics.

500,000 lines of code

Each project comes with verified and tested solutions including code, queries, configuration files, and scripts. Download and reuse them.

600+ hours of videos

Each project solves a real business problem from start to finish. These projects cover the domains of Data Science, Machine Learning, Data Engineering, Big Data and Cloud.

Cloud Lab Workspace

New projects every month to help you stay updated in the latest tools and tactics.

Unlimited 1:1 sessions

Each project comes with verified and tested solutions including code, queries, configuration files, and scripts. Download and reuse them.

Technical Support

Chat with our technical experts to solve any issues you face while building your projects.

7 Days risk-free trial

We offer an unconditional 7-day money-back guarantee. Use the product for 7 days and if you don't like it we will make a 100% full refund. No terms or conditions.

Payment Options

0% interest monthly payment schemes available for all countries.

Business Overview:

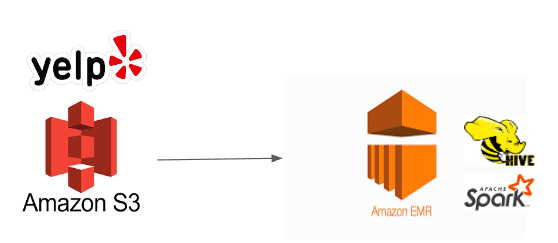

In this project, we will perform data processing and analysis on Yelp dataset using Spark and Hive. For this project, we will use Amazon EMR which is an alternative to the Hadoop cluster in AWS and S3 where our data is stored.

Yelp is a community review site and an American multinational firm based in San Francisco, California. It publishes crowd-sourced reviews of local businesses as well as the online reservation service Yelp Reservations. Yelp has made a portion of their data available in order to launch a new activity called the Yelp Dataset Challenge, which allows anyone to do research or analysis to find what insights are buried in their data. Due to the bulk of the data, this project only selects a subset of Yelp data. User and Review dataset is considered for this session.

Tech Stack:

Language: Spark, Scala.

Services: Amazon EMR, Hive, HDFS, AWS S3

Approach:

Create a S3 bucket and upload files

Create a keypair in EC2

Create an EMR cluster with master and slave nodes along with Spark, Hive components

Basic Dataframe operations like Read and write to tables and hdfs locations

Hive Integration from spark

Normalizing data using RDD operations

Normalizing data using Dataframe operation

Note: You can download dataset from this link.

Architecture Diagram:

Recommended

Projects

Feature Scaling in Machine Learning: The What, When, and Why

Enhance your knowledge of feature scaling in machine learning to refine algorithms, learn to use Python to implement feature scaling, and much more!

How to Learn Airflow From Scratch in 2024?

The ultimate curated collection of premier resources tailored to guide you to learn Apache Airflow from the ground up in 2024. | ProjectPro

How to Learn Tableau for Data Science in 2024?

Wondering how to learn Tableau for Data Science? This blog offers easy-to-follow tips to help you master Tableau for visualizing & analyzing data. ProjectPro

Get a free demo