How to Handle Outliers in Python?

Handling outliers in Python - Learn effective strategies to identify, analyze, and mitigate outliers in your data analysis projects. | ProjectPro

Outliers are data points that significantly deviate from a dataset's rest of the data. They can adversely affect statistical analysis and machine learning models by skewing results and reducing accuracy. Let's consider a simple dataset related to the monthly income of employees in a company. Most employees have salaries ranging from $2,000 to $8,000 per month. However, due to an anomaly, one employee's income is recorded as $200,000 for a particular month, significantly higher than the rest of the income values.

If this outlier is not handled correctly, it can distort the overall understanding of the income distribution within the company. For instance, it might falsely inflate the average income, leading to inaccurate budget planning or salary adjustments within the organization.

By detecting and handling this outlier appropriately, such as by removing it from the dataset or replacing it with a more typical income value, data scientists can ensure that their analysis and subsequent decision-making processes are based on a more accurate representation of the income distribution among employees.Therefore, handling outliers is crucial for robust data analysis and model building. This tutorial explores various techniques for identifying and handling outliers in Python.

Table of Contents

Handling Outliers in Machine Learning in Python

Handling outliers in Machine Learning in Python involves several steps. Check them below -

-

Detecting outliers: Detecting outliers in Python involves using descriptive statistics (mean, median, etc.) and graphical techniques (box plots, histograms) to identify values significantly different from the data's central tendency. Statistical tests (z-scores, t-tests) and machine learning algorithms (clustering, isolation forest) are also employed for outlier detection, tailored to the data's characteristics.

-

Removing outliers: One approach to handling outliers is removing them from the dataset in Python. This can be achieved by applying a threshold or rule, such as eliminating values beyond a certain number of standard deviations from the mean or those outside the interquartile range. However, It's crucial to ensure that outliers being removed are irrelevant or erroneous and won't distort the interpretation or outcomes of the data analysis or modeling task.

-

Replacing outliers: In Python, another strategy for handling outliers is to replace them with more reasonable values. This can be accomplished through various imputation methods, such as using the mean, median, mode, or constant value to substitute the outliers with the data's central tendency or typical value. Alternatively, techniques like nearest neighbors, linear regression, or machine learning models can be employed to predict values based on other features or variables in the dataset.

-

Transforming outliers: Python offers the option of transforming outliers using mathematical functions or techniques that alter the scale or shape of the data. Transformations such as logarithmic, square root, and inverse can reduce skewness or asymmetry, making the data more normal or symmetrical. Standardization, normalization, and scaling can change the range or magnitude of the data, facilitating comparability or consistency.

Why Remove Outliers in Python Pandas?

Outliers can significantly impact statistical measures and machine learning models, leading to misleading conclusions and poor model performance. Removing outliers helps analysts improve the quality of their data, enhance the robustness of their models, and facilitate more accurate interpretations of trends and patterns. This process helps meet the assumptions of many statistical techniques and improves the reliability and trustworthiness of insights derived from the data.

What are the Methods to Handle Outliers?

Outliers, which are observations significantly different from the rest of the data, can skew results and distort interpretations. Check below the various methods to detect and handle outliers effectively:

-

Percentile Method: The percentile method is a straightforward approach to outlier detection that compares individual data points to the dataset's distribution. By defining upper and lower bounds using specific percentiles, such as the 5th and 95th percentiles, outliers can be identified as observations lying beyond these bounds. This method benefits datasets with symmetrical and normal distributions, where extreme values can be easily identified based on their position relative to most data.

-

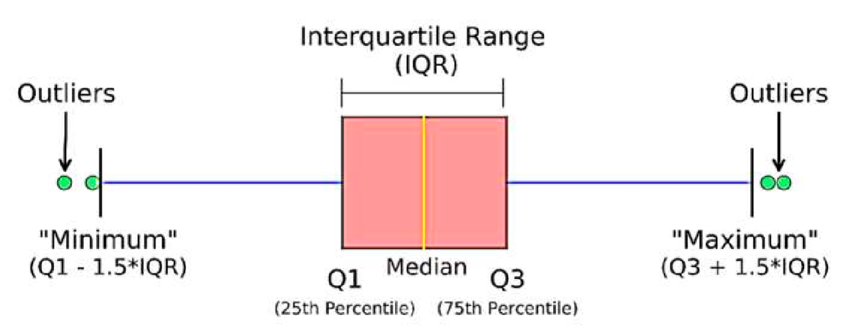

Interquartile Range (IQR) Method: The Interquartile Range (IQR) method provides a robust way to detect outliers, especially in datasets with non-normal distributions. By calculating the difference between the upper and lower quartiles (Q3 and Q1) and defining bounds as a multiple of this range (commonly 1.5 times the IQR), outliers can be identified as data points lying beyond these bounds. This method is advantageous as it is not sensitive to extreme values and can effectively capture outliers in skewed datasets.

Source: ResearchGate

-

Z-Score Method: The Z-score method offers a statistical approach to outlier detection by quantifying the distance of each data point from the mean in terms of standard deviations. By setting thresholds based on the number of standard deviations away from the mean (typically ±3 standard deviations), outliers can be identified as data points lying beyond these bounds. This method is beneficial for normally distributed data, where extreme values can be readily determined based on their deviation from the mean.

Source: Towards Data Science

-

Boxplot Method: The boxplot method visually represents the data distribution, making it easy to identify outliers. Boxplots display a dataset's median, quartiles, and outliers, allowing analysts to visually inspect for extreme values lying beyond the whiskers of the plot. This method is intuitive and quick, visually assessing outliers in the data and facilitating further investigation into their potential causes.

Source: Swampthingecology.org

-

Machine Learning Algorithms: Certain machine learning algorithms offer built-in mechanisms to handle outliers during training. Algorithms like Random Forest and Support Vector Machines are robust to outliers and can automatically adapt to their presence in the data. This approach is particularly advantageous for large datasets where manual detection and handling of outliers may be impractical, allowing analysts to focus on model development without explicit outlier treatment.

Example of How to Deal with Outliers in Data Analysis?

Check out the following data science Python source code to understand the complete process of handling outliers with the help of an example -:

Here are the steps involved in this process -

1. Imports pandas and numpy libraries.

2. Create your dataframe using pandas.

3. Outliers handling by dropping them.

4. Outliers handling using boolean marking.

5. Outliers handling using Rescaling of features.

Step 1 - Import the library

import numpy as np

import pandas as pd

We have imported numpy and pandas. These two modules will be required.

Step 2 - Creating a DataFrame

We first created an empty dataframe named farm and then added features and values to it. We can see that the value 100 is an outlier in feature Rooms.

farm = pd.DataFrame()

farm['Price'] = [632541, 425618, 356471, 7412512]

farm['Rooms'] = [2, 5, 3, 100]

farm['Square_Feet'] = [1600, 2850, 1780, 90000]

print(farm)

Step 3: Handling the Outliers

There are several methods to handle outliers in Python. Check them below -

Method 1 - Outliers Handling by Dropping them.

There are various ways to deal with outliers, including dropping them by applying some conditions to features.

h = farm[farm['Rooms'] < 20]

print(h)

Here, we have applied the condition to the feature room to select only the values less than 20.

Method 2 - Outliers Handling Using Boolean Marking.

We can also mark the outliers and will not use those outliers to train the model. Here, we use bool to mark the outlier based on certain conditions.

farm['Outlier'] = np.where(farm['Rooms'] < 20, 0, 1)

print(farm)

Explore More Data Science and Machine Learning Projects for Practice. Fast-Track Your Career Transition with ProjectPro

Method 3 - Outliers Handling Using Feature Rescaling.

When dealing with limited data points, discarding or labeling outliers becomes impractical. In such situations, a more viable approach is to rescale the data, allowing the outliers to remain within a usable range.

farm['Log_Of_Square_Feet'] = [np.log(x) for x in farm['Square_Feet']]

print(farm)

So, the final output of all the methods are

Price Rooms Square_Feet

0 632541 2 1600

1 425618 5 2850

2 356471 3 1780

3 7412512 100 90000

Price Rooms Square_Feet

0 632541 2 1600

1 425618 5 2850

2 356471 3 1780

Price Rooms Square_Feet Outlier

0 632541 2 1600 0

1 425618 5 2850 0

2 356471 3 1780 0

3 7412512 100 90000 1

Price Rooms Square_Feet Outlier Log_Of_Square_Feet

0 632541 2 1600 0 7.377759

1 425618 5 2850 0 7.955074

2 356471 3 1780 0 7.484369

3 7412512 100 90000 1 11.407565

Practice Data Analysis Projects with ProjectPro!

Learning how to deal with outliers in Python is essential for data analysis. But just reading about it isn't enough. You need to practice it with real-world projects to understand it truly. That's where ProjectPro comes in handy. It comes with over 250+ solved end-to-end data science and big data projects for you to try out. Working on data analysis projects will help you learn a lot about data analysis and how to handle outliers practically. So, if you want to improve at handling outliers and become a pro at data analysis, try ProjectPro!

Download Materials