How to join Excel data from Multiple files using Pandas?

This recipe helps you join Excel data from Multiple files using Pandas.

Recipe Objective: How to join Excel data from Multiple files using Pandas?

In most big data scenarios, we must merge multiple files or tables based on the various conditions to create a unified data model for quicker data analysis. In this recipe, we will learn how to merge multiple Excel files into one using Python.

Master the Art of Data Cleaning in Machine Learning

System Requirements To Perform Merge Two Excel Files in Python Pandas

-

Install pandas python module as follows: pip install pandas

-

The below codes can be run in Jupyter Notebook, or any Python console

-

In this scenario, we are going to use 3 Excel files to perform join using Products dataset, Orders dataset, Customers dataset

Steps To Perform Python Merge Excel Files Into One

The following steps will show how to perform Python Pandas merge excel files into one.

Step 1: Import the modules

In this example we are going to use the pandas library , this library is used for data manipulation pandas data structures and operations for manipulating numerical tables and time series

Import pandas as pd

Step 2: Read the Excel Files

In the below code, we will read the data from Excel files and create dataframes using the Pandas library.

orders = pd. read_excel('orders.xlsx') products =pd.read_excel("products.xlsx") customers = pd.read_excel("customers.xlsx")

Step 3: Join operations on the Data frames

Using the "how" parameter in the merge function, we will perform the join operations like left, right,..etc.

Left Join:

import pandas as pd orders = pd. read_excel('orders.xlsx') products =pd.read_excel("products.xlsx") customers = pd.read_excel("customers.xlsx") result = pd.merge(orders,customers[["Product_id","Order_id","customer_name",'customer_email']],on='Product_id', how='left') result.head()

Output of the above code:

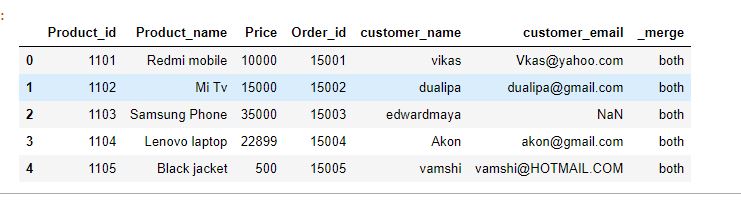

Inner Join:

import pandas as pd orders = pd. read_excel('orders.xlsx') products =pd.read_excel("products.xlsx") customers = pd.read_excel("customers.xlsx") result= pd.merge(products,customers,on='Product_id',how='inner',indicator=True) result.head()

Output of the above code:

Right Join:

import pandas as pd orders = pd. read_excel('orders.xlsx') products =pd.read_excel("products.xlsx") customers = pd.read_excel("customers.xlsx") result = pd.merge(orders, customers[["Product_id","Order_id","customer_name",'customer_email']], on='Product_id', how='right', indicator=True) result.head()

Output of the above code:

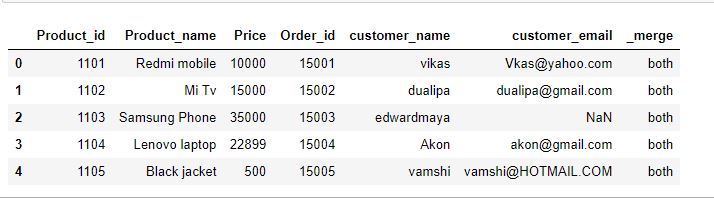

Outer Join:

import pandas as pd orders = pd. read_excel('orders.xlsx') products =pd.read_excel("products.xlsx") customers = pd.read_excel("customers.xlsx") result= pd.merge(products,customers,on='Product_id',how='outer',indicator=True) result.head()

Output of the above code:

Step 4: Write result to the CSV file

After getting the result, write to the hdfs or local file-

import pandas as pd orders = pd. read_excel('orders.xlsx') products =pd.read_excel("products.xlsx") customers = pd.read_excel("customers.xlsx") result = pd.merge(orders, customers[["Product_id","Order_id","customer_name",'customer_email']], on='Product_id') result.head() # write the results to the hdfs/ local result.to_excel("Results.xlsx", index = False)

Output of the above code will be an Excel file which will be written to current location of execution of the code and it looks like below-