Ultimate Machine Learning Cheatsheet: Algorithms, Techniques, Concepts

Your go-to Machine Learning Cheatsheet for quick references and insights on algorithms, techniques, and concepts. | ProjectPro

As technology continues to evolve at an unprecedented pace, machine learning has emerged as a powerful tool, enabling computers to learn from data and make predictions or decisions without explicit programming. From autonomous vehicles to personalized recommendations, machine learning is transforming countless industries and shaping our everyday lives.

However, diving into the realm of machine learning can be an overwhelming experience, especially for beginners. With a myriad of algorithms, concepts, and terminologies to grasp, it's easy to feel lost amidst the vast sea of information. But fear not! We have your back.

Allow us to introduce you to the Machine Learning Cheatsheet—an invaluable resource designed to demystify the fundamental concepts and algorithms of machine learning. Whether you're a seasoned data scientist looking for a quick reference or a novice taking your first steps into the field, this machine learning cheat sheet will serve as your compass, guiding you through the intricate landscape of machine learning. From supervised to unsupervised learning, we'll explore the core algorithms that form the backbone of modern machine learning models.

So, buckle up and get ready to unravel the mysteries of machine learning concepts—one cheat at a time!

Getting Started with Machine Learning CheatSheet!

Machine learning problems predominantly fall into two major categories: supervised learning and unsupervised learning. We will dive into the details of the two types of learnings so that with an understanding of the foundational concepts, we will be ready to delve into the comprehensive choosing a machine learning algorithms cheat sheet that will provide quick references and insights for various machine learning models. We will first cover the algorithms that fall under the category of supervised learning and then move ahead to the predictive models used for unsupervised learning problems in this machine learning models cheat sheet. Let's explore it in detail.

Supercharge your machine learning journey - Download the ultimate Machine Learning Cheatsheet PDF now and level up your skills!

Supervised Learning

Supervised learning is a fundamental concept in machine learning, playing a crucial role in solving a wide range of predictive and classification tasks. In supervised learning, we train a model using labeled data, where the desired outcome or target variable is already known. The goal is to learn a mapping function that can make accurate predictions on new, unseen data. The process of supervised learning typically involves two main components: the input features or independent variables, and the corresponding output or dependent variable. The model learns from the input-output pairs in the training data, identifying patterns and relationships to generalize its predictions.

In supervised learning, there are two main types: classification and regression. Both classification and regression are essential components of supervised learning, and the choice between them depends on the nature of the problem and the type of the target variable. Understanding the problem is thus crucial as it allows us to apply the appropriate techniques and algorithms to effectively address different prediction tasks.

Linear Regression

Linear regression is a supervised learning algorithm used for predicting continuous numeric values. The linear regression model represents the relationship between the dependent variable.

Equation:

The linear regression equation is written as: y = b0 + b1x1 + b2x2 + ... + bn*xn where y is the dependent variable, b0 is the intercept, b1, b2, ..., bn are the coefficients, and x1, x2, ..., xn are the independent variables.

Concepts:

Residuals, Ordinary Least Squares (OLS), Overfitting and Underfitting, multicollinearity, homoscedasticity, Mean squared error (MSE), root mean squared error (RMSE), mean absolute error (MAE), and R-squared value.

Scenarios:

It is used to identify the linear relationship between the dependent variable and the independent variables.

Mostly used in cases where data has minimal noise and outliers.

It is suitable for predicting continuous numeric values.

It can serve as a baseline model for comparison with more complex algorithms.

It can help identify the most influential features in the prediction task.

New Projects

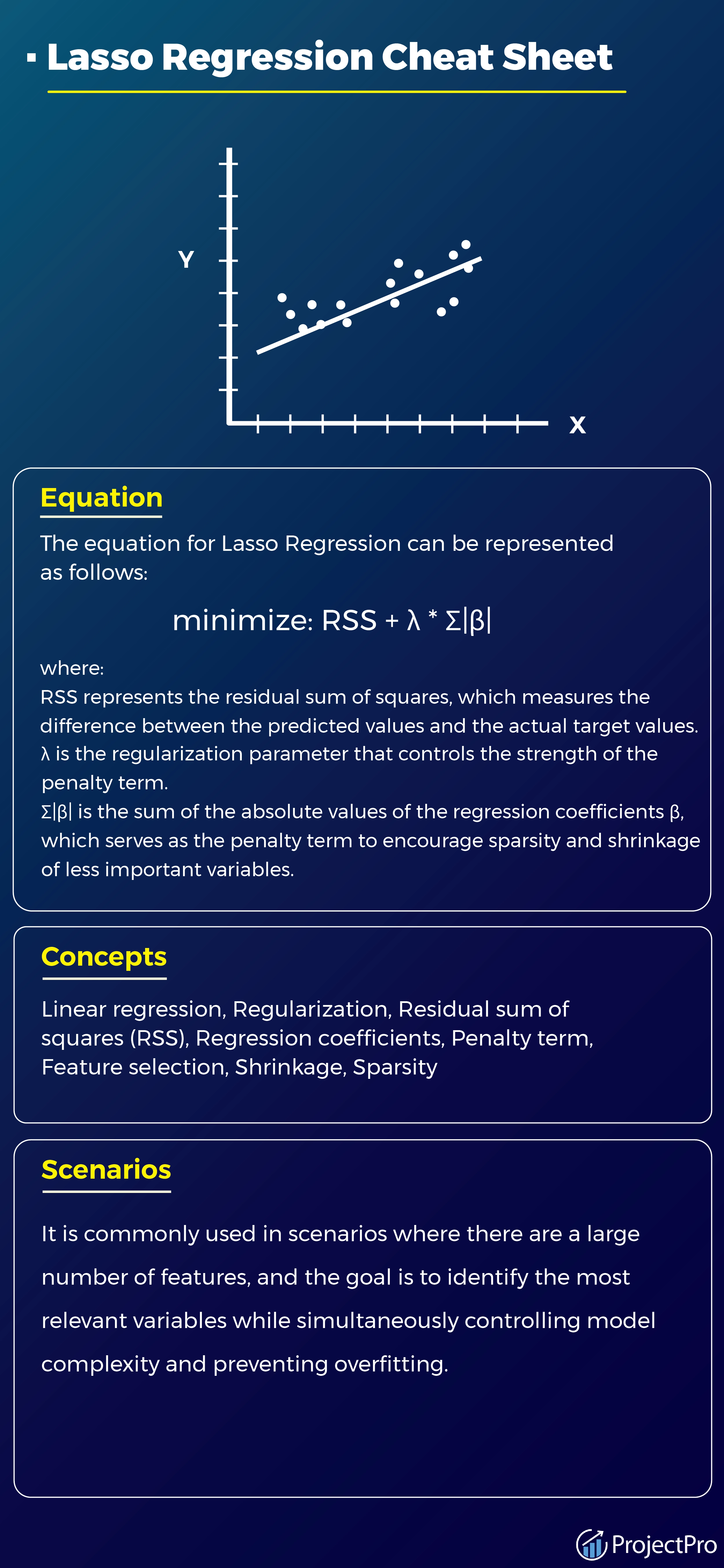

Lasso Regression

Lasso Regression, also known as L1 regularization, is a linear regression technique that performs both feature selection and regularization by adding the absolute value of the coefficients as a penalty term to the loss function, encouraging sparsity and shrinking less influential variables towards zero.

Equation:

The equation for Lasso Regression can be represented as follows:

minimize: RSS + λ * Σ|β|

where:

RSS represents the residual sum of squares, which measures the difference between the predicted values and the actual target values.

λ is the regularization parameter that controls the strength of the penalty term.

Σ|β| is the sum of the absolute values of the regression coefficients β, which serves as the penalty term to encourage sparsity and shrinkage of less important variables.

Here's what valued users are saying about ProjectPro

Anand Kumpatla

Sr Data Scientist @ Doubleslash Software Solutions Pvt Ltd

Ed Godalle

Director Data Analytics at EY / EY Tech

Not sure what you are looking for?

View All ProjectsConcepts:

Linear regression, Regularization, Residual sum of squares (RSS), Regression coefficients, Penalty term, Feature selection, Shrinkage, Sparsity

Scenarios:

It is commonly used in scenarios where there are a large number of features, and the goal is to identify the most relevant variables while simultaneously controlling model complexity and preventing overfitting.

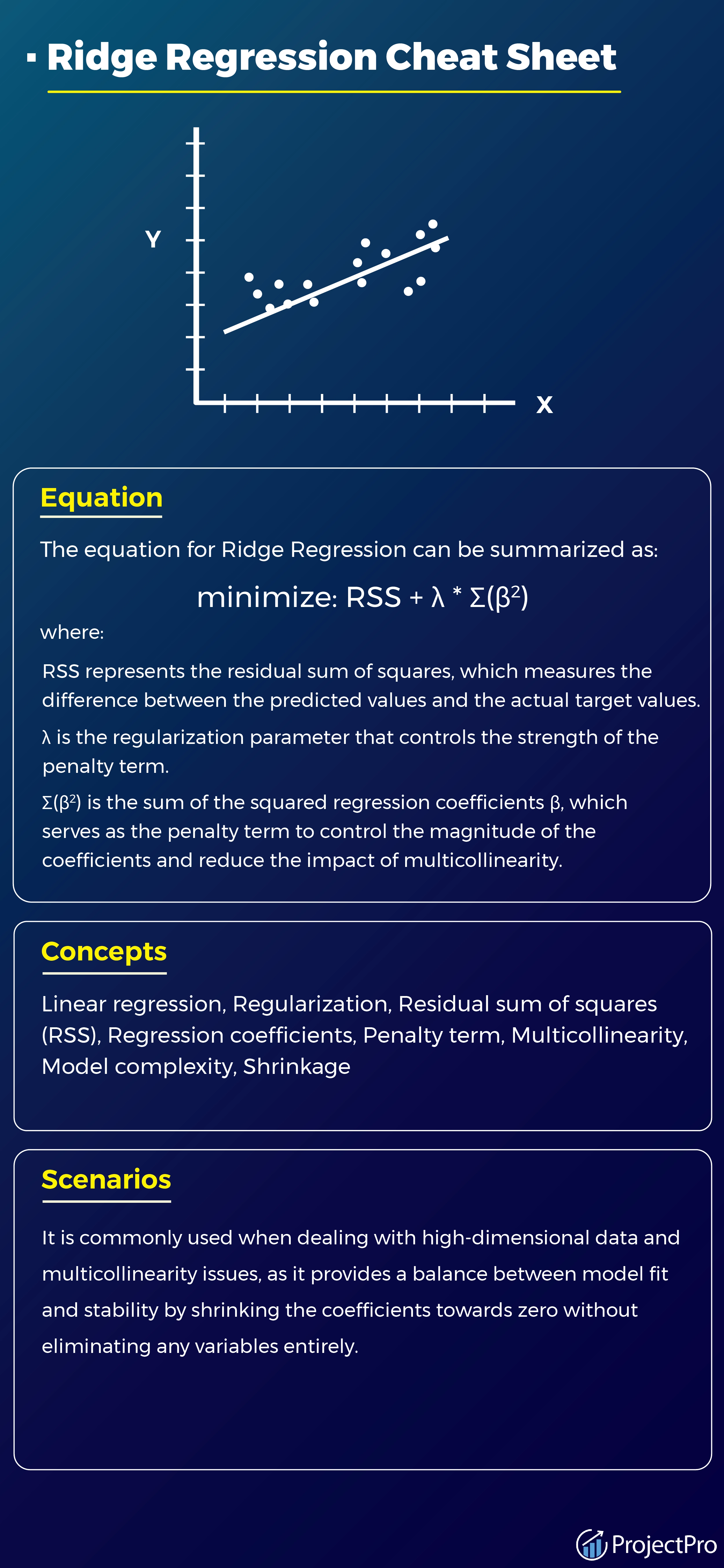

Ridge Regression

Ridge Regression, also known as L2 regularization, is a linear regression technique that adds a penalty term proportional to the square of the coefficients to the loss function, helping to control model complexity and reduce the impact of multicollinearity in the data.

Equation:

The equation for Ridge Regression can be summarized as:

minimize: RSS + λ * Σ(β^2)

where:

RSS represents the residual sum of squares, which measures the difference between the predicted values and the actual target values.

λ is the regularization parameter that controls the strength of the penalty term.

Σ(β^2) is the sum of the squared regression coefficients β, which serves as the penalty term to control the magnitude of the coefficients and reduce the impact of multicollinearity.

Concepts:

Linear regression, Regularization, Residual sum of squares (RSS), Regression coefficients, Penalty term, Multicollinearity, Model complexity, Shrinkage

Scenarios:

It is commonly used when dealing with high-dimensional data and multicollinearity issues, as it provides a balance between model fit and stability by shrinking the coefficients towards zero without eliminating any variables entirely.

With these Data Science Projects in Python, your career is bound to reach new heights. Start working on them today!

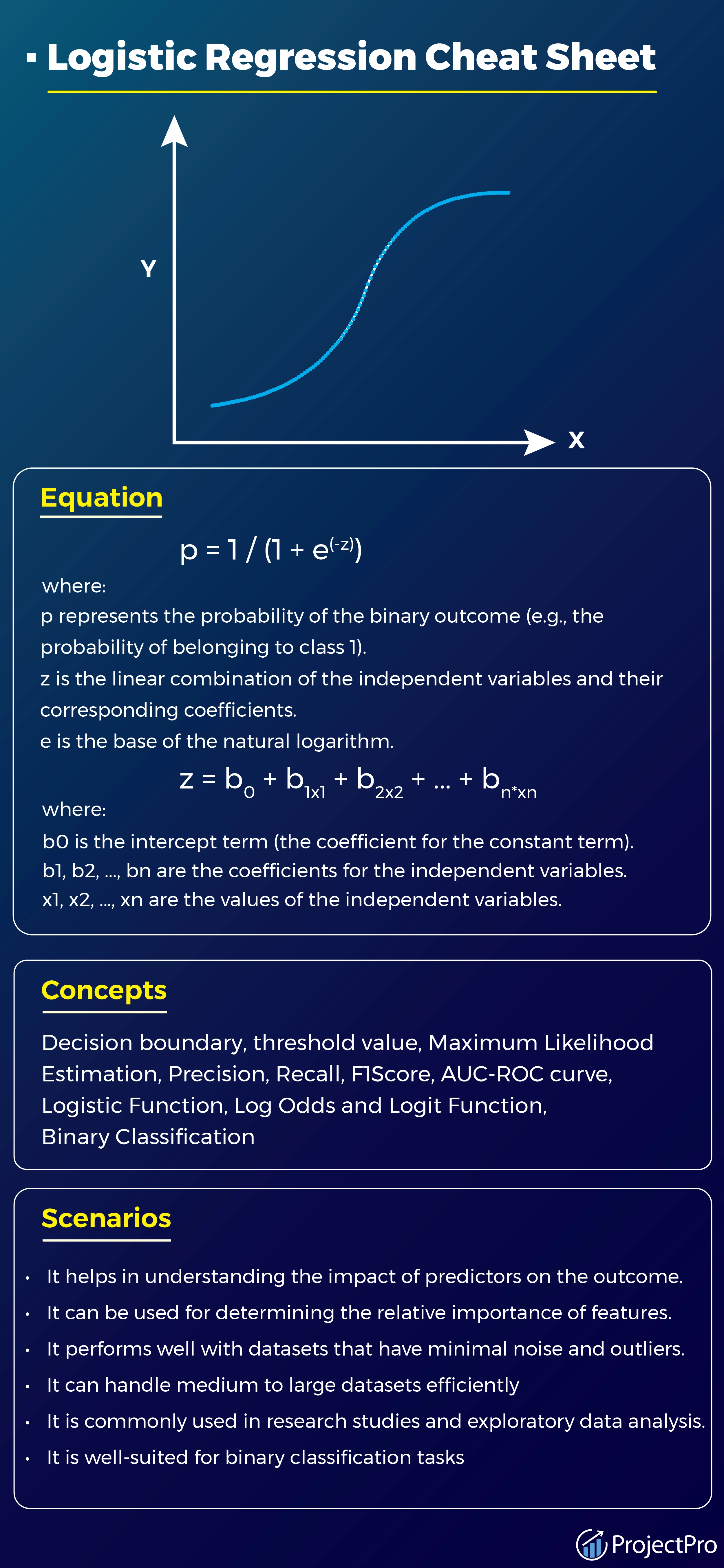

Logistic regression

The logistic regression model uses the logistic function (also known as the sigmoid function) to map the linear combination of the independent variables to a probability value between 0 and 1.

Equation:

p = 1 / (1 + e^(-z))

Where:

p represents the probability of the binary outcome (e.g., the probability of belonging to class 1).

z is the linear combination of the independent variables and their corresponding coefficients.

e is the base of the natural logarithm.

The linear combination (z) is calculated as:

z = b0 + b1x1 + b2x2 + ... + bn*xn

Where:

b0 is the intercept term (the coefficient for the constant term).

b1, b2, ..., bn are the coefficients for the independent variables.

x1, x2, ..., xn are the values of the independent variables.

Concepts:

Decision boundary, threshold value, Maximum Likelihood Estimation, Precision, Recall, F1Score, AUC-ROC curve, Logistic Function, Log Odds and Logit Function, Binary Classification

Scenarios:

It helps in understanding the impact of predictors on the outcome.

It can be used for determining the relative importance of features.

It performs well with datasets that have minimal noise and outliers.

It can handle medium to large datasets efficiently

It is commonly used in research studies and exploratory data analysis.

It is well-suited for binary classification tasks

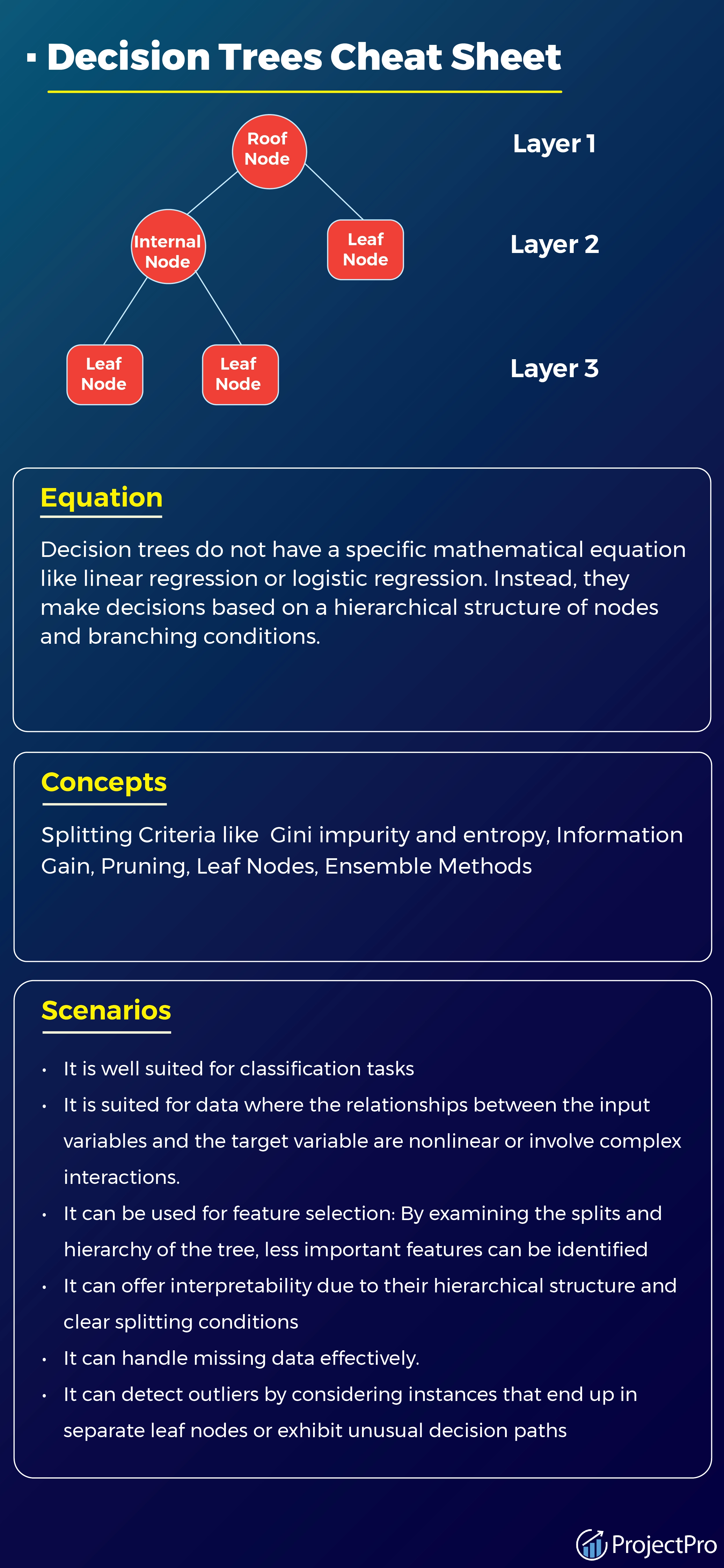

Decision Trees

Decision trees are a machine learning algorithm that recursively partitions data based on a sequence of hierarchical conditions to make predictions or decisions.

Equation:

Decision trees do not have a specific mathematical equation like linear regression or logistic regression. Instead, they make decisions based on a hierarchical structure of nodes and branching conditions.

Concepts:

Splitting Criteria like Gini impurity and entropy, Information Gain, Pruning, Leaf Nodes, Ensemble Methods

Scenarios:

It is well suited for classification tasks

It is suited for data where the relationships between the input variables and the target variable are nonlinear or involve complex interactions.

It can be used for feature selection: By examining the splits and hierarchy of the tree, less important features can be identified

It can offer interpretability due to their hierarchical structure and clear splitting conditions

It can handle missing data effectively.

It can detect outliers by considering instances that end up in separate leaf nodes or exhibit unusual decision paths

Random Forest

Random forest is an ensemble learning method that combines multiple decision trees to make more accurate predictions and improve generalization.

Equation:

The random forest algorithm does not have a specific equation. Instead, it is a combination of decision trees.

Concepts:

Ensemble Learning, Decision Trees, Bagging, Out of Bag Error, Random Feature Selection

Scenarios:

It can be used for classification and regression tasks.

It can handle high-dimensional data effectively.

Random Forests can be used for outlier detection by analyzing the anomaly score of instances

It can handle imbalanced datasets by adjusting class weights or using sampling techniques such as oversampling or undersampling.

It can capture non-linear relationships between the predictors and the target variable.

It can serve as a benchmark model for comparison against other complex algorithms.

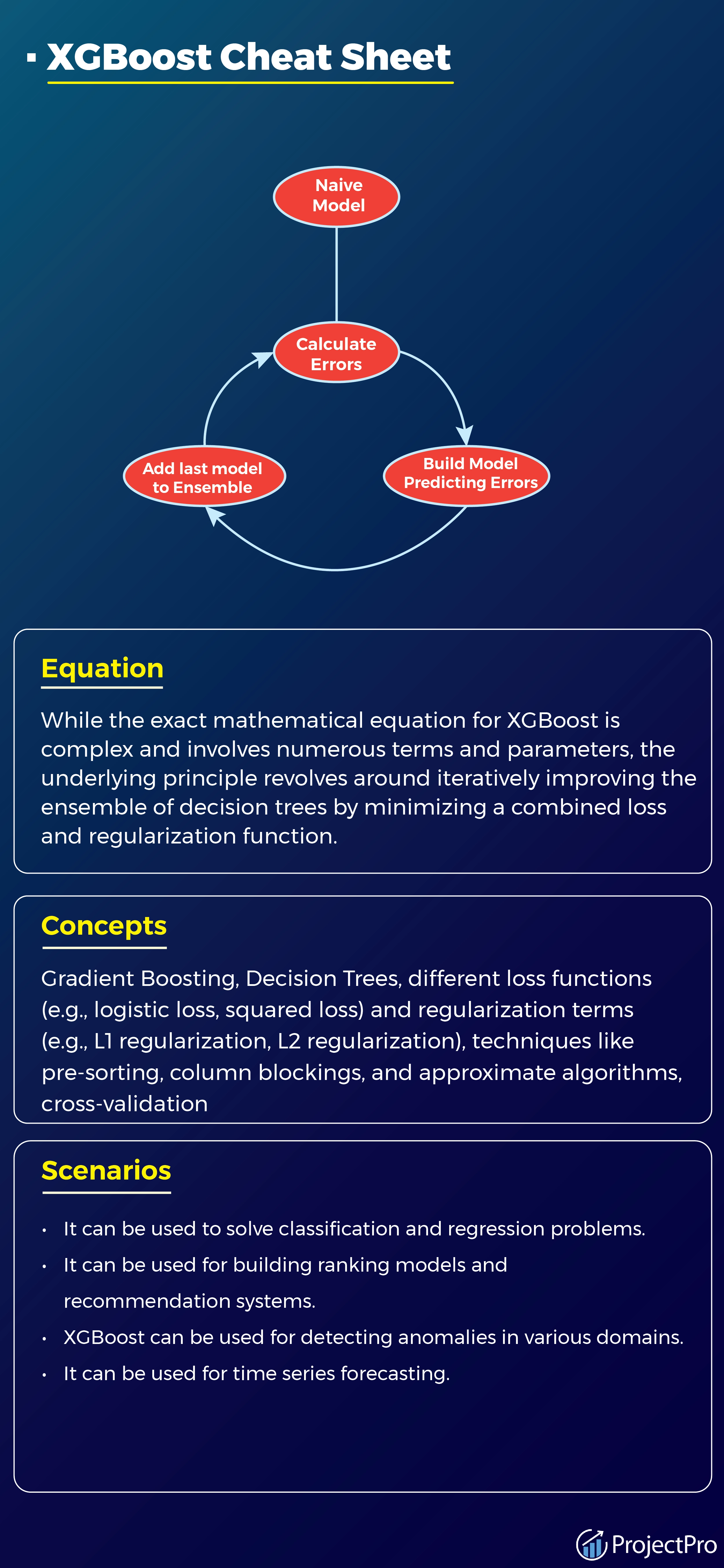

XGBoost

XGBoost is an ensemble learning method that combines multiple weak prediction models, typically decision trees, into a strong predictive model. It operates by iteratively building decision trees and gradually improving their performance through boosting.

Equation:

While the exact mathematical equation for XGBoost is complex and involves numerous terms and parameters, the underlying principle revolves around iteratively improving the ensemble of decision trees by minimizing a combined loss and regularization function.

Concepts:

Gradient Boosting, Decision Trees, different loss functions (e.g., logistic loss, squared loss) and regularization terms (e.g., L1 regularization, L2 regularization), techniques like pre-sorting, column blockings, and approximate algorithms,cross-validation

Scenarios:

It can be used to solve classification and regression problems.

It can be used for building ranking models and recommendation systems.

XGBoost can be used for detecting anomalies in various domains.

It can be used for time series forecasting.

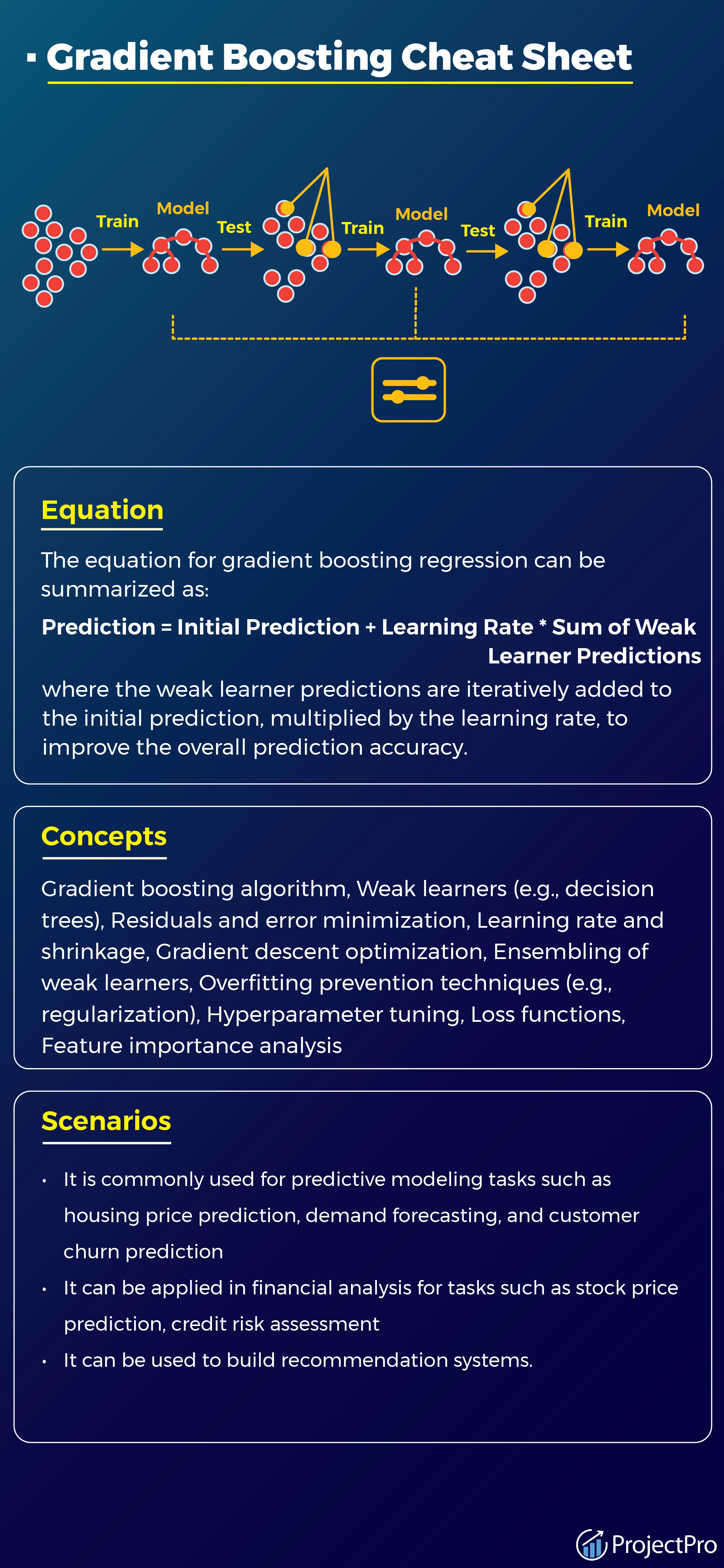

Gradient Boosting Regression

Gradient boosting regression is a powerful ensemble learning technique that combines weak regression models to make accurate predictions. It iteratively improves predictions by fitting weak learners to the residuals of the previous models, leveraging gradient descent optimization to minimize the overall prediction errors.

Equation:

The equation for gradient boosting regression can be summarized as:

Prediction = Initial Prediction + Learning Rate * Sum of Weak Learner Predictions

where the weak learner predictions are iteratively added to the initial prediction, multiplied by the learning rate, to improve the overall prediction accuracy.

Concepts:

Gradient boosting algorithm, Weak learners (e.g., decision trees), Residuals and error minimization, Learning rate and shrinkage, Gradient descent optimization, Ensembling of weak learners, Overfitting prevention techniques (e.g., regularization), Hyperparameter tuning, Loss functions, Feature importance analysis

Scenarios:

It is commonly used for predictive modeling tasks such as housing price prediction, demand forecasting, and customer churn prediction

It can be applied in financial analysis for tasks such as stock price prediction, credit risk assessment

It can be used to build recommendation systems.

Unsupervised Learning

Unsupervised learning is a branch of machine learning where the goal is to discover patterns, structures, and relationships in unlabeled data. Unlike supervised learning, unsupervised learning algorithms work with data that does not have predefined target labels or outcomes. Instead, the algorithms aim to find inherent patterns and groupings within the data without any explicit guidance. By exploring the inherent structure of the data, unsupervised learning provides valuable insights and helps uncover hidden patterns that may not be readily apparent. It is particularly useful in exploratory data analysis, customer segmentation, recommendation systems, and anomaly detection.

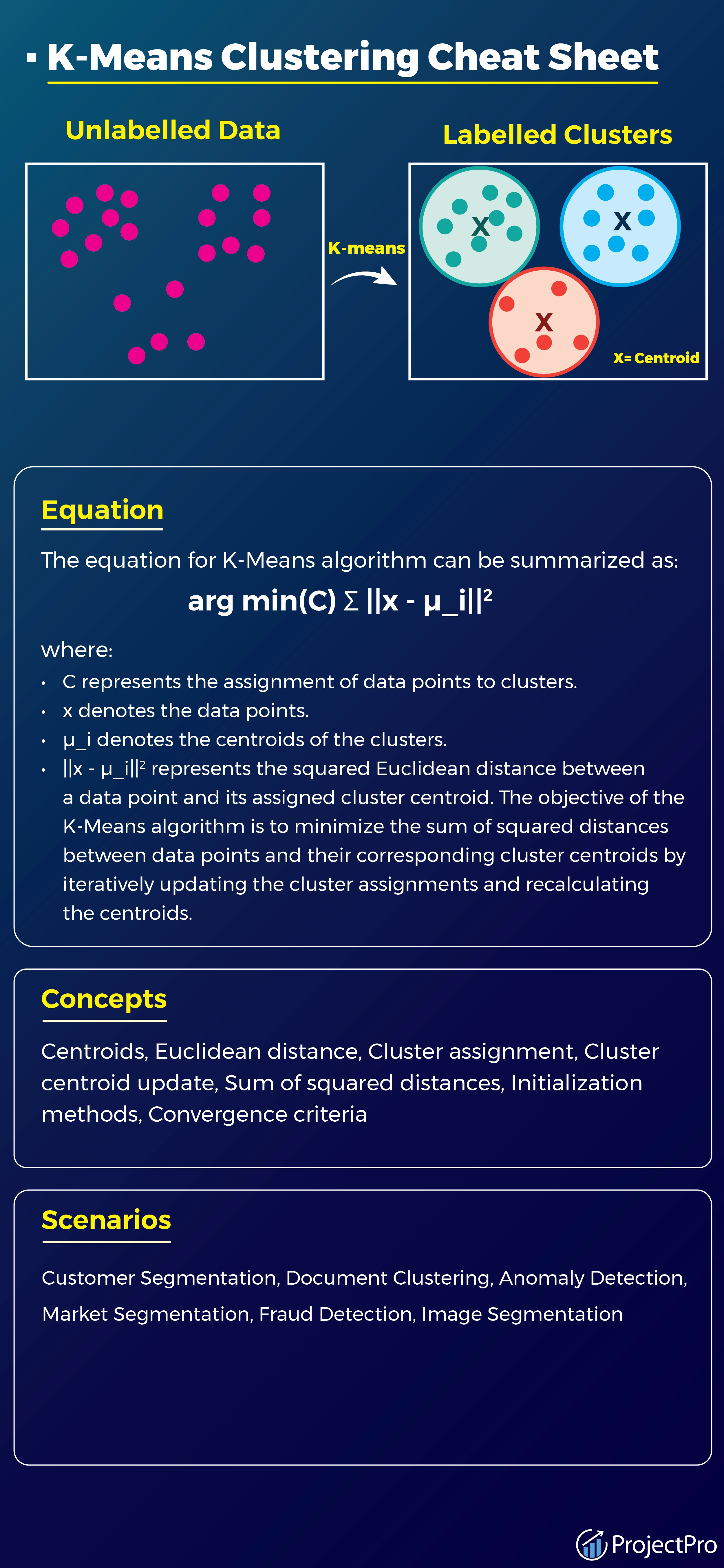

K-Means Clustering

K-Means is a popular unsupervised machine learning algorithm that aims to partition data points into k clusters based on their similarity. It iteratively assigns data points to the nearest cluster centroid and updates the centroids to minimize the sum of squared distances within each cluster.

Equation:

The equation for K-Means algorithm can be summarized as:

arg min(C) Σ ||x - µ_i||^2

where:

-

C represents the assignment of data points to clusters.

-

x denotes the data points.

-

µ_i denotes the centroids of the clusters.

-

||x - µ_i||^2 represents the squared Euclidean distance between a data point and its assigned cluster centroid. The objective of the K-Means algorithm is to minimize the sum of squared distances between data points and their corresponding cluster centroids by iteratively updating the cluster assignments and recalculating the centroids.

Concepts:

Centroids, Euclidean distance, Cluster assignment, Cluster centroid update, Sum of squared distances, Initialization methods, Convergence criteria

Scenarios:

Customer Segmentation, Document Clustering, Anomaly Detection, Market Segmentation, Fraud Detection, Image Segmentation

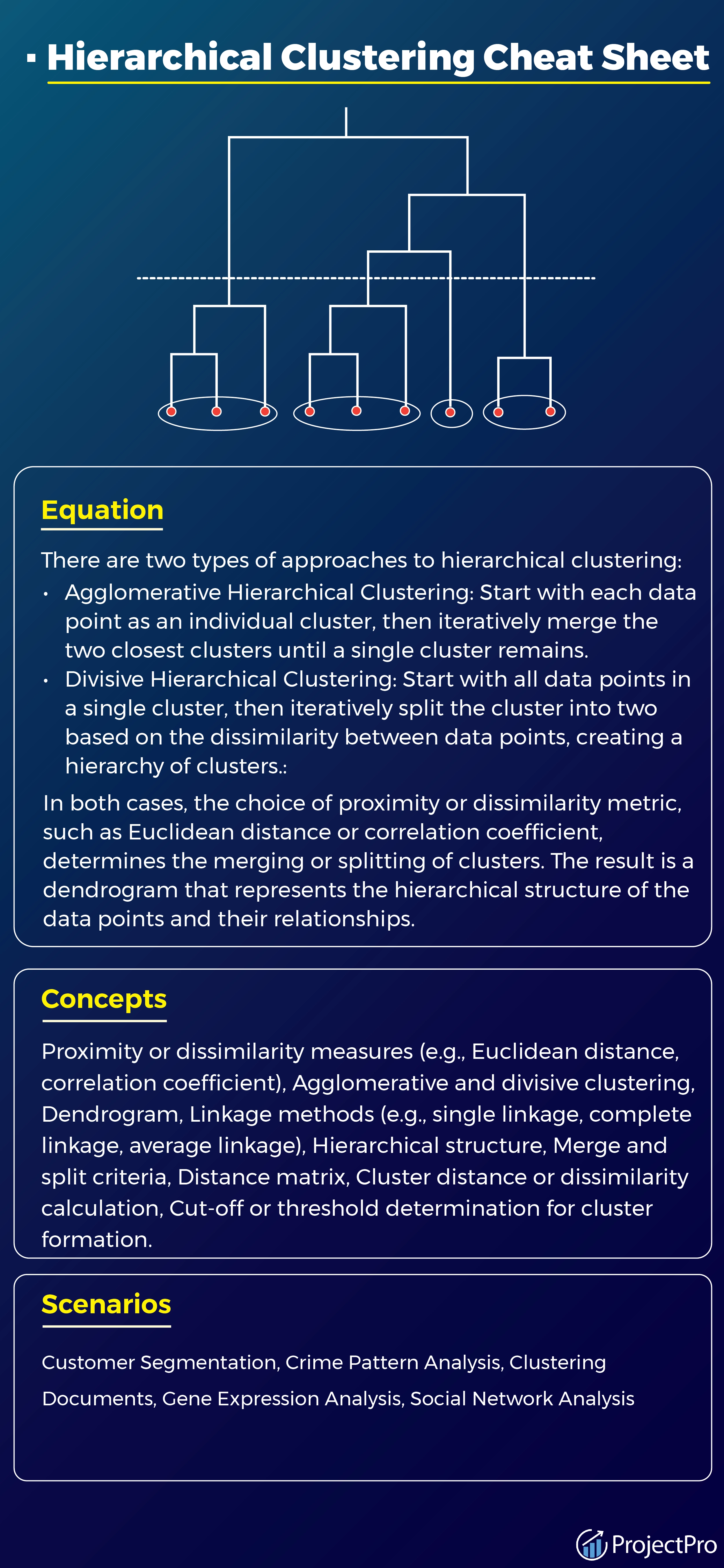

Hierarchical Clustering

Hierarchical clustering is a clustering algorithm that organizes data points into a hierarchy of nested clusters, creating a tree-like structure known as a dendrogram. It operates by iteratively merging or splitting clusters based on the proximity or dissimilarity between data points, allowing for the exploration of clusters at different levels of granularity.

Equation:

There are two types of approaches to hierarchical clustering:

-

Agglomerative Hierarchical Clustering: Start with each data point as an individual cluster, then iteratively merge the two closest clusters until a single cluster remains.

-

Divisive Hierarchical Clustering: Start with all data points in a single cluster, then iteratively split the cluster into two based on the dissimilarity between data points, creating a hierarchy of clusters.

In both cases, the choice of proximity or dissimilarity metric, such as Euclidean distance or correlation coefficient, determines the merging or splitting of clusters. The result is a dendrogram that represents the hierarchical structure of the data points and their relationships.

Concepts:

Proximity or dissimilarity measures (e.g., Euclidean distance, correlation coefficient), Agglomerative and divisive clustering, Dendrogram, Linkage methods (e.g., single linkage, complete linkage, average linkage), Hierarchical structure, Merge and split criteria, Distance matrix, Cluster distance or dissimilarity calculation, Cut-off or threshold determination for cluster formation.

Scenarios:

Customer Segmentation, Crime Pattern Analysis, Clustering Documents, Gene Expression Analysis, Social Network Analysis

What's the best way to learn Python? Work on these Machine Learning Projects in Python with Source Code to know about various libraries that are extremely useful in Data Science.

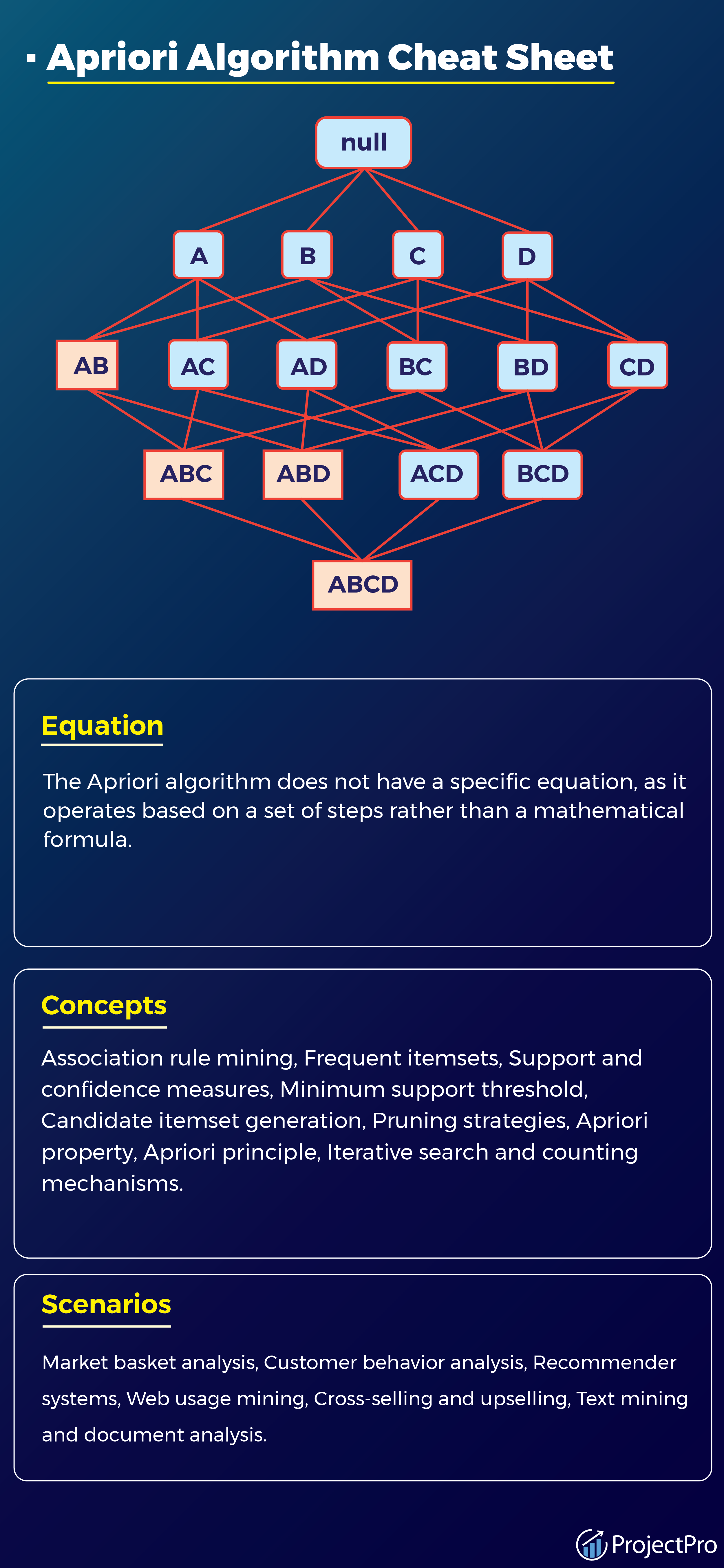

Apriori Algorithm

Apriori algorithm is a popular association rule mining algorithm used to discover frequent itemsets in a dataset. It employs a breadth-first search approach to generate frequent itemsets by iteratively applying a minimum support threshold and employing the "apriori" property to reduce the search space.

Equation: The Apriori algorithm does not have a specific equation, as it operates based on a set of steps rather than a mathematical formula.

Concepts:

Association rule mining, Frequent itemsets, Support and confidence measures, Minimum support threshold, Candidate itemset generation, Pruning strategies, Apriori property, Apriori principle, Iterative search and counting mechanisms.

Scenarios:

Market basket analysis, Customer behavior analysis, Recommender systems, Web usage mining, Cross-selling and upselling, Text mining and document analysis.

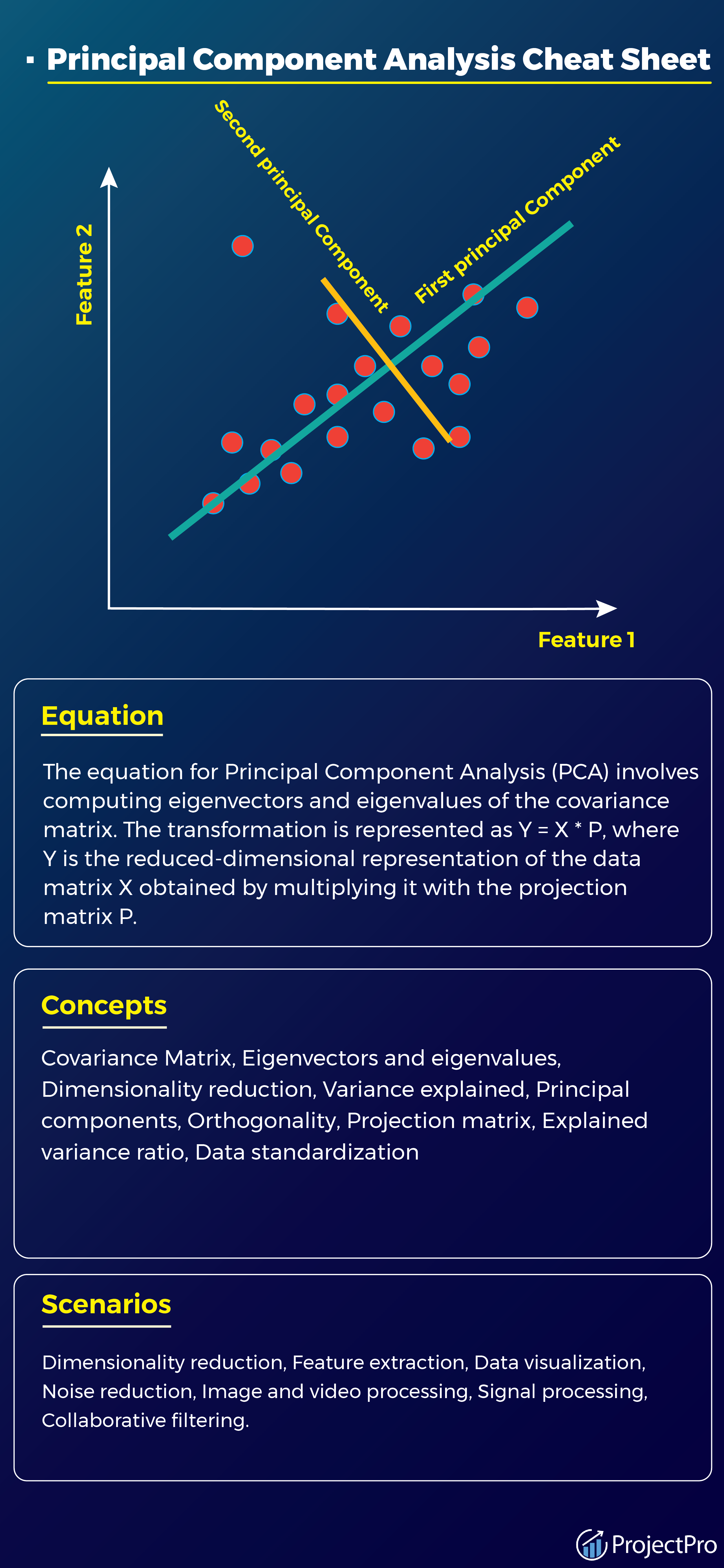

Principal Component Analysis

Principal Component Analysis (PCA) is a dimensionality reduction technique that transforms high-dimensional data into a lower-dimensional space while preserving the maximum amount of data variance. It achieves this by finding orthogonal axes, called principal components, that capture the most significant patterns and variations in the data.

Equation:

The equation for Principal Component Analysis (PCA) involves computing eigenvectors and eigenvalues of the covariance matrix. The transformation is represented as Y = X * P, where Y is the reduced-dimensional representation of the data matrix X obtained by multiplying it with the projection matrix P.

Concepts:

Covariance Matrix, Eigenvectors and eigenvalues, Dimensionality reduction, Variance explained, Principal components, Orthogonality, Projection matrix, Explained variance ratio, Data standardization

Scenarios:

Dimensionality reduction, Feature extraction, Data visualization, Noise reduction, Image and video processing, Signal processing, Collaborative filtering.

Machine Learning Metrics Cheat Sheet

Machine Learning (ML) models rely on metrics to gauge their performance across diverse tasks. This cheat sheet provides a concise reference to two critical categories: Machine Learning Evaluation Metrics and Machine Learning Performance Metrics.

This section aims to assist you in the assessment of machine learning models. It serves as a quick reference for selecting algorithms, fine-tuning model performance, interpreting results, and making informed decisions in real-world applications.

Machine Learning Evaluation Metrics Cheat Sheet

Evaluation Metrics encompass measurements like accuracy, precision, recall, and F1 score, tailored for classification, regression, and clustering tasks. They assess predictive capabilities across various domains.

|

Classification Metrics |

Regression Metrics |

Clustering Metrics |

|

|

|

Machine Learning Performance Metrics Cheat Sheet

Performance Metrics go beyond accuracy, focusing on efficiency, interpretability, scalability, and robustness. These metrics delve into resource consumption, model interpretability, scalability, and overall stability.

|

Speed and Efficiency |

Model Interpretability |

|

|

|

Robustness |

Scalability and Resource Requirements |

|

|

These metrics provide a comprehensive view of the performance and evaluation aspects of Machine Learning models across different types of tasks. They help in assessing the model's accuracy, generalization ability, efficiency, interpretability, and robustness.

Learn how to Apply ML Algorithms with ProjectPro!

We hope our comprehensive Machine Learning Cheatsheet will help you unlock the true potential of your machine learning journey. It's your ultimate guide, packed with quick references and insights to navigate through various algorithms, techniques, and concepts. This cheatsheet will be your trusted companion in all your machine learning related projects. But to benifit from this cheatsheet the most, you must work on a myraid of problems in Data Science and take your skills to the next level.

If you're unsure how to kickstart your own ML project, subscribe to ProjectPro. With a subscription to our platform, you gain access to a treasure trove of solved projects in Data Science and Big Data. Dive into real-world scenarios, unravel complex problems, and deepen your understanding of ML concepts. Subscribe to ProjectPro today and start your practical learning journey that will empower you to excel in the world of data science and big data.

FAQs

1. Which algorithm is best for prediction in machine learning?

There is no one-size-fits-all answer as the best algorithm for prediction in machine learning depends on various factors such as the nature of the data, problem domain, available resources, and performance requirements. Different algorithms like Random Forests, Gradient Boosting, Support Vector Machines, or Neural Networks may excel in different scenarios.

2. How do you explain machine learning in layman's terms?

In simple terms, machine learning is like teaching a computer to learn and make predictions on its own. Instead of giving it explicit instructions, we show it examples and let it learn patterns from the data. It's like training a dog to recognize objects by showing it different toys and rewarding it when it gets them right.

3. What are the 5 best algorithms in data science?

The selection of the "best" algorithms in data science depends on the specific problem and context. However, some popular and widely used algorithms include Random Forests, Gradient Boosting, Support Vector Machines, Neural Networks (Deep Learning), and k-Nearest Neighbors (k-NN). Their effectiveness varies depending on the data and task at hand.

About the Author

Manika

Manika Nagpal is a versatile professional with a strong background in both Physics and Data Science. As a Senior Analyst at ProjectPro, she leverages her expertise in data science and writing to create engaging and insightful blogs that help businesses and individuals stay up-to-date with the