Spark SQL for Relational Big Data Processing

Learn about Spark SQL architecture, need for spark sql,spark sql features with spark sql example and short spark sql video tutorial.

With increasing usage of Spark in production, big data developers often combine various spark components to build sophisticated big data applications. Spark SQL is the one of the most used Apache Spark component in production. Spark SQL is the heart of predictive applications at many companies like Act Now, Concur, ATP, PanTera and Kelkoo. Spark SQL is one of the fastest growing component of Spark with approximately 67% increase in the number of Spark SQL users in 2016.

Apache Spark Survey of 1615 users from 900 different organizations conducted in 2016 to highlight the growth trends of Spark technology revealed that

- 40% of the respondents were using Spark SQL component in production in 2016 compared to 24% in 2015.

- 64 % of respondents were using more than 3 spark components in production

- 74 % of respondents were using more than 2 spark components in production

Streaming Data Pipeline using Spark, HBase and Phoenix

Downloadable solution code | Explanatory videos | Tech Support

Start Project

What is Spark SQL?

Spark SQL is Apache Spark’s go-to interface for working with structured and semi-structured data that helps integrate relational big data processing with Spark’s functional programming API. Structured data here implies any data format that has a schema (pre-defined set of fields for every record) like Hive tables, Parquet format or JSON data.

New Projects

Need for Spark SQL

Spark SQL has been developed to overcome the limitations posed by Hive running on top of Apache Spark. In Apache Hive, SQL developers can write queries in a SQL way which are converted to MapReduce jobs. Mapreduce is slower in nature and Hive uses mapreduce that lags in performance when it comes small and medium sized datasets of size less than 200 GB.

Another major problem with Apache Hive for relational data processing was that it does not have job resume capability. If you consider any interesting workflow pipeline it is likely to have long series of SQL statements, for instance, let’s consider a workflow pipeline with 2K lines of Apache Hive code having 50 SQL statements. If the processing dies in the middle because of some error or breakdown of the hadoop cluster then Apache Hive cannot resume processing from the breakpoint.

Explore SQL Database Projects to Add them to Your Data Engineer Resume.

Considering from a security aspect, Hive cannot drop encrypted databases. Only soft deletes are supported in Hive meaning the complete encryption zone can be deleted or moved into trash. The problem is the users encrypt the whole /user/hive/warehouse directory and then when users try to drop a database in hive it will fail because it is a part of the encryption zone. Spark SQL overcomes all the above limitations of Apache Hive for relational data processing.

Get FREE Access to Data Analytics Example Codes for Data Cleaning, Data Munging, and Data Visualization

Spark SQL vs. Hive QL- Advantages of Spark SQL over HiveQL

-

Faster Execution - Spark SQL is faster than Hive. For example, if it takes 5 minutes to execute a query in Hive then in Spark SQL it will take less than half a minute to execute the same query.

-

No Migration Hurdles - Though both HiveQL and Spark SQL follow the SQL way of writing queries, the syntax for both is completely different. Any organization using Hive since the last few years would face difficulties in writing all the Hive queries in Spark SQL to attain performance gains. However, Spark SQL allows HiveQL queries to be executed directly in it without having to make any changes to the code, making migration from HiveQL to Spark SQL easier for organizations to achieve performance gains. Without any hassle, developers can continue to write queries in HiveQL and during execution they will automatically get converted to Spark SQL.

- Supports Real-Time Processing – Unlike Hadoop Hive that supports only batch processing (where historical data is stored and later used for processing), Spark SQL supports real-time querying of data by using the metastore services of Hive to query the data stored and managed by Hive. Spark SQL does not require developers to create a new metastore as it can directly use the existing Hive metastore.

Here's what valued users are saying about ProjectPro

Gautam Vermani

Data Consultant at Confidential

Abhinav Agarwal

Graduate Student at Northwestern University

Not sure what you are looking for?

View All ProjectsHive vs. Spark SQL

Hive |

Spark SQL |

|

It is a framework. |

It is a library, so integrating it with other libraries in the spark ecosystem is easy. |

|

Supports only Hive query language (HQL) |

Supports both Spark SQL and HQL. |

|

Metastore has to be created to run hive queries. |

Metastore is optional and can use Hive metastore. |

|

Has its own JDBC server – Hive Thrift Server. |

It does not have its own JDBC server but uses Hive Thrift Server. |

|

Users have to explicitly declare the schema in Hive. |

Automatically infers the schema. |

|

HQL |

It has two API’s – SQL and Dataframe DSL (Domain Specific Language). |

Recommended Reading:

Spark SQL Features

i) SQL Integration with Spark

Spark SQL helps easily combine SQL queries with spark programs. Users can query structured data within Spark programs using a familiar dataframe API or through SQL. Below is a spark SQL example on how one can apply functions to results of SQL queries –

context =HiveContext (sc)

result =context.sql (“SELECT * FROM EMPLOYEE”)

Names= result. Map (lambda p: p.name)

ii) Uniform Data Access

Spark SQL supports allows users to read and write data in a variety of data formats including Hive, JSON, Parquet, ORC, Avro and JDBC. Users can connect to any data source the same way through Schema-RDD’s and also join data across multiple data sources. Below is a spark SQL example that shows query and join on different data sources –

context.jsonFile (“s3n ://”)

.registerTemTable (“json”)

result=context.sql ( “”” SELECT * FROM EMPLOYEE JOIN json ….”””)

iii) Performance and Scalability

Spark SQL performs much better than Hadoop because of in-memory computing. It includes a cost based optimizer, columnar storage and code generation for faster execution of queries. Spark SQL has various performance tuning options like memory settings, codegen, batch sizes and compression codes. Spark SQL is highly scalable and provides mid-query fault tolerant making it easily scalable to large jobs with thousands of nodes and multi-hour queries. It uses the same execution engine for interactive and long queries.

iv) Support for Creating User Defined Functions

Users can extend the Spark vocabulary by creating new column based used defined functions. If there is a new function requirement in Spark SQL and it is not available then a UDF can be created and directly executed in Spark SQL to achieve the desired output. For example, if there is no function to convert a given string to lower case in Spark SQL then an UDF (for example toLowerCase) can be created for this purpose. Every new UDF created must be registered with the list of functions.

v) Compatible with Hive

Spark SQL reuses Hive metastore and frontend providing total compatibility with the execution of Hive queries and UDF’s.

vi) Provides Standard Connectivity through JDBC or ODBC drivers

The server mode in Spark SQL provides standard JDBC or ODBC connectivity for BI tools like Tableau.

Get More Practice, More Big Data and Analytics Projects, and More guidance.Fast-Track Your Career Transition with ProjectPro

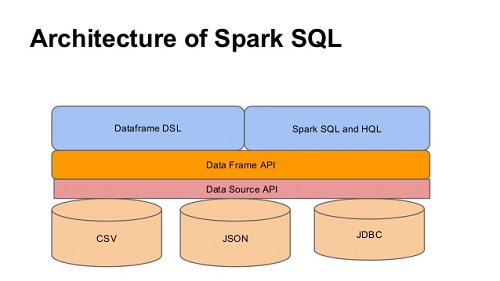

Overview of Spark SQL Architecture

The bottom layer in the Spark SQL architecture is the flexible data access (and store) which works through multiple data formats. Data is acquired from various input formats JSON, JDBC or CSV. DataSource API is used to read and store structured and semi-structured data into Spark SQL. DataSource API then fetches the data which is then converted to a Dataframe API (A dataframe is distributed collection of data organized into named columns). Dataframe API is equivalent to a relational table in SQL which converts the data that is read through the DataSource API into tabular column to perform SQL operations. Using the Dataframe DSL’s or Spark SQL or HQL, dataframe is processed to get the desired results.

You might be interested to read about Spark Architecture.

Spark SQL Use Cases

- Spark SQL finds great application in Twitter Sentiment Analysis where organizations can identify trending topics to create promotional campaigns and attract larger audience. This helps organizations to manage crisis, adjust their services and target marketing.

- For detecting credit card frauds and any other fraudulent banking transaction. For instance, your Credit Card is being currently swiped in California and after 15 minutes the same credit card is being swiped in India, then there is possibility of fraud which can be identified in real time using Spark SQL.

- Spark SQL also finds great application in the healthcare industry. One common use case of Spark SQL in the healthcare domain is for identifying genomic sequences. It is used to identify people who are predisposed to most of the common diseases, solve rare diseases and help doctors personalize medicines and their dosage to a particular individual.

- Used in real-time stock market analysis.

Build an Awesome Job Winning Project Portfolio with Solved End-to-End Big Data Projects

About the Author

ProjectPro

ProjectPro is the only online platform designed to help professionals gain practical, hands-on experience in big data, data engineering, data science, and machine learning related technologies. Having over 270+ reusable project templates in data science and big data with step-by-step walkthroughs,