Solved end-to-end Data Science & Big Data projects

Get ready to use Data Science and Big Data projects for solving real-world business problems

START PROJECTBig Data Project Categories

PySpark Projects

14 Projects

Spark SQL Projects

14 Projects

Spark GraphX Projects

3 Projects

Apache Hbase Projects

3 Projects

Neo4j Projects

2 Projects

Apache Sqoop Projects

2 Projects

Trending Big Data Projects

PySpark Project to Learn Advanced DataFrame Concepts

In this PySpark Big Data Project, you will gain hands-on experience working with advanced functionalities of PySpark Dataframes and Performance Optimization.

View Project Details

Learn How to Implement SCD in Talend to Capture Data Changes

In this Talend Project, you will build an ETL pipeline in Talend to capture data changes using SCD techniques.

View Project Details

Talend Real-Time Project for ETL Process Automation

In this Talend Project, you will learn how to build an ETL pipeline in Talend Open Studio to automate the process of File Loading and Processing.

View Project Details

Data Science Project Categories

Deep Learning Projects

29 Projects

Data Science Projects in Retail & Ecommerce

28 Projects

Data Science Projects in R

22 Projects

Data Science Projects in Entertainment & Media

21 Projects

Neural Network Projects

11 Projects

H2O R Projects

1 Projects

Trending Data Science Projects

Customer Market Basket Analysis using Apriori and Fpgrowth algorithms

In this data science project, you will learn how to perform market basket analysis with the application of Apriori and FP growth algorithms based on the concept of association rule learning.

View Project Details

Multilabel Classification Project for Predicting Shipment Modes

Multilabel Classification Project to build a machine learning model that predicts the appropriate mode of transport for each shipment, using a transport dataset with 2000 unique products. The project explores and compares four different approaches to multilabel classification, including naive independent models, classifier chains, natively multilabel models, and multilabel to multiclass approaches.

View Project Details

Deploy Transformer-BART Model on Paperspace Cloud

In this MLOps Project you will learn how to deploy a Tranaformer BART Model for Abstractive Text Summarization on Paperspace Private Cloud

View Project Details

Customer Love

Unlimited 1:1 Live Interactive Sessions

- 60-minute live session

Schedule 60-minute live interactive 1-to-1 video sessions with experts.

- No extra charges

Unlimited number of sessions with no extra charges. Yes, unlimited!

- We match you to the right expert

Give us 72 hours prior notice with a problem statement so we can match you to the right expert.

- Schedule recurring sessions

Schedule recurring sessions, once a week or bi-weekly, or monthly.

- Pick your favorite expert

If you find a favorite expert, schedule all future sessions with them.

-

Use the 1-to-1 sessions to

- Troubleshoot your projects

- Customize our templates to your use-case

- Build a project portfolio

- Brainstorm architecture design

- Bring any project, even from outside ProjectPro

- Mock interview practice

- Career guidance

- Resume review

Latest Blogs

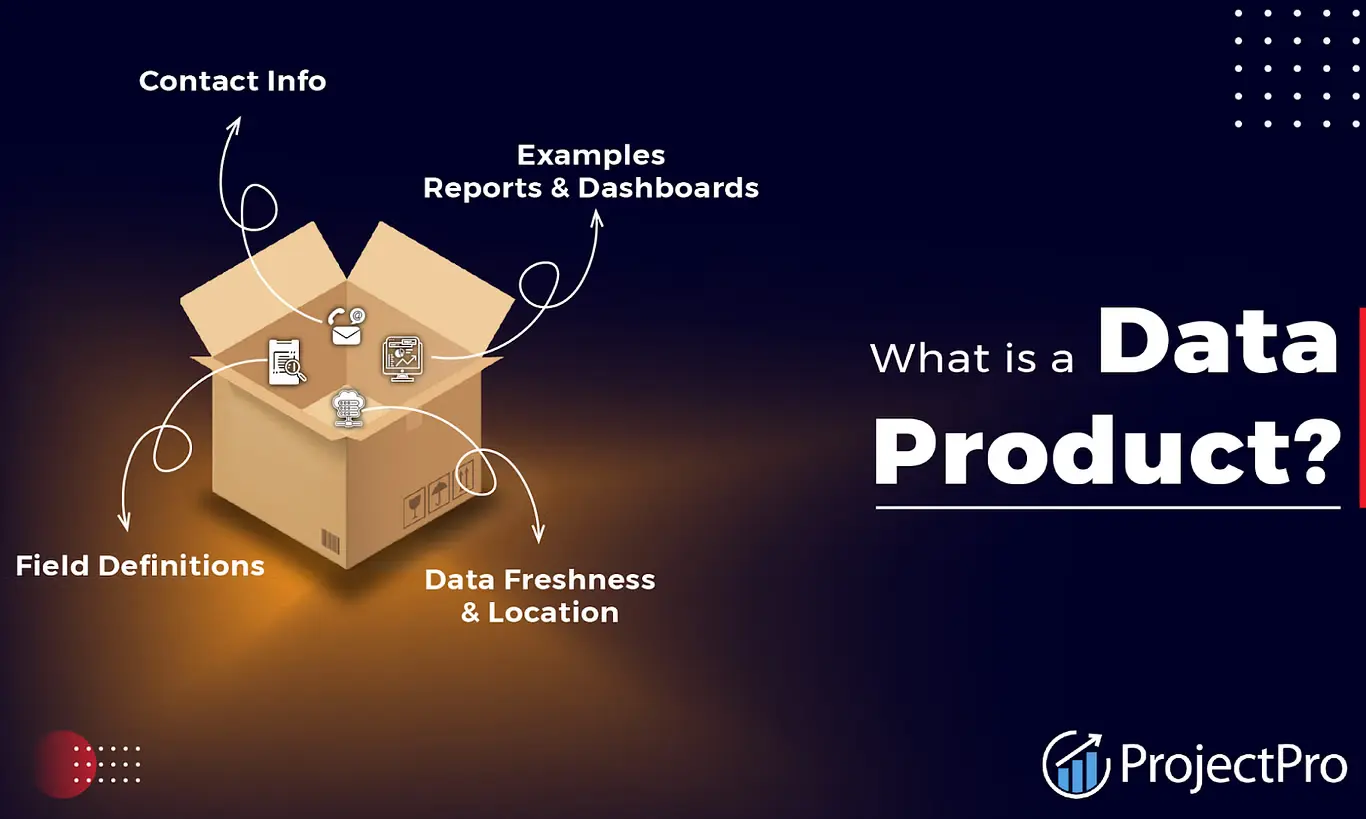

Data Products-Your Blueprint to Maximizing ROI

Explore ProjectPro's Blueprint on Data Products for Maximizing ROI to Transform your Business Strategy.

How to Become a Google Certified Professional Data Engineer?

Become a Google Certified Professional Data Engineer with confidence, armed with expert insights, curated resources, & a clear certification path.| ProjectPro

Learning Optimizers in Deep Learning Made Simple

Understand the basics of optimizers in deep learning for building efficient algorithms.

We power Data Science & Data Engineering

projects at

Join more than

115,000+ developers worldwide

Get a free demo