Understanding LLM Hallucinations and Preventing Them

A beginner-friendly handbook for understanding LLM hallucinations and exploring various prevention methods.

Studying LLM hallucinations can provide insights into the capabilities and limitations of AI systems and raise essential questions about the nature of language understanding and creativity. This blog is your gateway to the world of understanding LLM hallucinations. You will explore what LLM hallucinations are, the types of LLM hallucinations, methods for detecting LLM hallucinations, and techniques to reduce LLM hallucinations.

LLM Project to Build and Fine Tune a Large Language Model

Downloadable solution code | Explanatory videos | Tech Support

Start ProjectPicture this: You’re chatting with Google Assistant, and you use a simple prompt to inquire about the weather in your city. But what awaits you next is not the exact temperatures but rather an impractical explanation, as mentioned below.

You: "Hey, Google, what's the weather like in my city today?"

Google Assistant: "Ah, the weather! Allow me to consult my crystal ball... Hmm, it seems the skies are feeling rather indecisive today. You might wake up to a sprinkle of sunshine, followed by a downpour of confetti—wait, confetti? Scratch that; it seems my crystal ball's been attending too many parties lately! But fear not, for I predict a high chance of rainbows appearing at random intervals amidst the chaos of weather whims. Yes, you heard that right—rainbows on demand! So grab your sunglasses and umbrella because today's forecast is a delightful blend of whimsy and wonder!"

A Google Assistant answers user queries with the help of Large Language Models (LLMs) running in the background. These sophisticated AI models, like GPT-3, BERT, RoBERTa, etc., process vast amounts of text data to understand and generate human-like responses to user queries. But sometimes, these LLMs can experience what we call "hallucinations." These are instances where the model generates unexpected or nonsensical output, deviating from the intended response. In the case of our weather inquiry, the whimsical forecast of confetti showers and rainbows on demand is a prime example of an LLM hallucination. Here is an interesting take on ‘LLM Hallucinations’ by Andrej Karpathy, Previously Director of AI at Tesla.

LLM hallucinations can occur for various reasons, such as ambiguity in the input prompt, lack of context, or noise in the training data. While they can sometimes result in amusing or creative responses, they can also lead to inaccuracies or confusion for users. By recognizing and understanding the AI hallucination problem, we can better appreciate the capabilities and limitations of AI technology. So, join us on this journey of understanding AI hallucinations through an example of LLM hallucination. Together, we will dive deeper into LLM hallucination meaning LLM hallucinations examples, and explore ways to mitigate them effectively. Let’s get started!

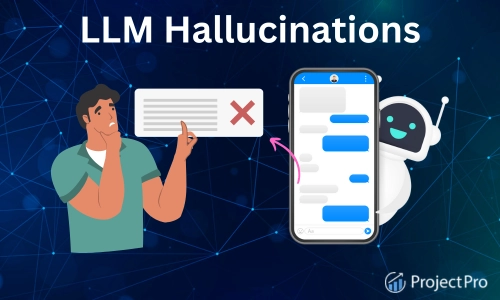

What are LLM Hallucinations?

LLM hallucinations refer to perceptual experiences that arise from interactions with Large Language Models (LLMs). These hallucinations occur when the model generates text that appears to be meaningful but is nonsensical, surreal, or unexpected. They can range from bizarre sentences to entire narratives that seem coherent at first glance but contain inconsistencies, absurdities, or fantastical elements upon closer inspection.

Here's an example of an LLM hallucination:

Original prompt: "Describe a day at the beach."

Generated hallucination: "The sun danced in the sky, casting its golden rays upon the sandy shore. Seashells whispered secrets to the waves, and the breeze carried the laughter of children playing in the surf. Suddenly, a rainbow-colored unicorn emerged from the depths, its horn sparkling with magic as it soared through the air, trailing a stream of glitter behind it."

In this example, the hallucination starts with a typical beach scene but quickly veers into surreal territory with the appearance of a rainbow-colored unicorn. While the imagery may be vivid and imaginative, it departs from the expected coherence of a realistic description of a day at the beach, illustrating the unpredictability and creativity of LLM-generated text.

LLM hallucinations can arise due to various factors, including the complexity of the model's training data, the inherent limitations of machine learning algorithms, and the unpredictable nature of language generation. Based on their causes, hallucinations are often segregated into various types. Let us explore them in the next section.

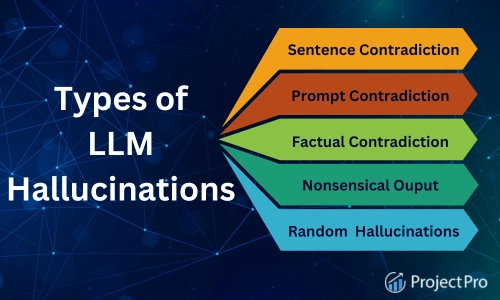

Types of LLM Hallucinations Examples

LLM hallucinations can manifest in various forms, each presenting unique characteristics. Here are a few types of LLM hallucinations with examples:

-

Sentence Contradiction

LLMs generate sentences that directly oppose or conflict with statements made earlier in the generated text. This contradiction undermines the coherence and logical flow of the text, leading to confusion for readers or users.

For example, if an LLM states, "The sky is blue," and later says, "The sky is green," it creates a contradiction within the narrative.

A recent study on Self-contradictory hallucinations of LLMs revealed that self-contractions are prevalent across various LLMs, e.g., in 17.7% of all sentences generated by ChatGPT in open-domain text generation. Thus, sentence contradictions are not that uncommon in modern language models.

Here's what valued users are saying about ProjectPro

Ameeruddin Mohammed

ETL (Abintio) developer at IBM

Savvy Sahai

Data Science Intern, Capgemini

Not sure what you are looking for?

View All Projects-

Prompt Contradiction

If generated sentences contradict the initial prompt provided to the LLM for text generation, they are labeled prompt contradictions. This contradiction indicates a failure of the LLM to adhere to the intended meaning or context established by the prompt.

For instance, if the prompt asks for a description of a sunny day, but the LLM describes a rainy scene, it contradicts the prompt's expectation.

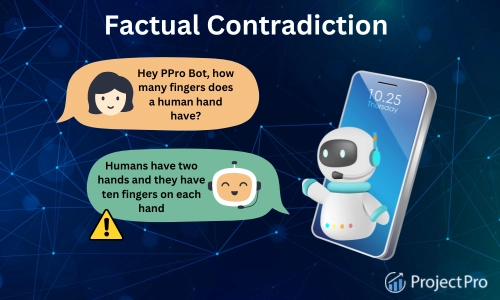

Factual Contradiction

LLMs often generate fictional or inaccurate information presented as factual within the generated text, resulting in a factual contradiction. This type of hallucination contributes to the spread of misinformation and undermines the reliability of LLM-generated content.

An example would be an LLM stating a false scientific fact as if it were true, such as "Humans have ten fingers on each hand."

New Projects

-

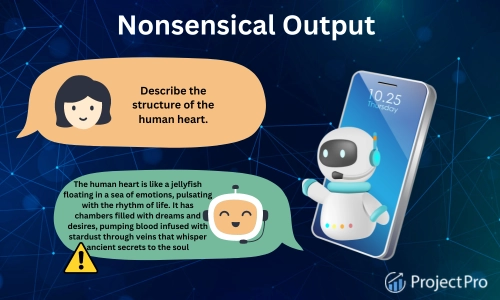

Nonsensical Output

Generated text lacks logical coherence and meaningful content, making it easier to interpret or use. This type of hallucination renders the LLM-generated text unusable or unreliable for its intended purpose.

For example, if the LLM outputs a string of random words or nonsensical phrases that do not form a coherent sentence.

-

Irrelevant or Random LLM Hallucinations

LLMs produce irrelevant or random information unrelated to the input or desired output. This type of hallucination adds extraneous content that confuses users and detracts from the usefulness of the generated text.

An example might be the LLM inserting unrelated anecdotes or facts into a text where they don't belong.

The various types of hallucinations we've explored reveal their everyday occurrence. Hence, enhancing the accuracy and trustworthiness of LLM-generated responses is crucial. Thus, Let's focus on how we can identify and detect these hallucinations.

Detecting LLM Hallucinations

Detecting LLM hallucinations is essential to ensure the reliability and trustworthiness of AI-generated outputs. By identifying these deviations, we can help prevent the spreading of false information, maintain user confidence, and enhance the overall usability of AI applications across diverse domains. So, let us discuss the methods for detecting LLM hallucinations. We'll explore simple yet effective approaches and a few mathematical techniques to identify these hallucinations.

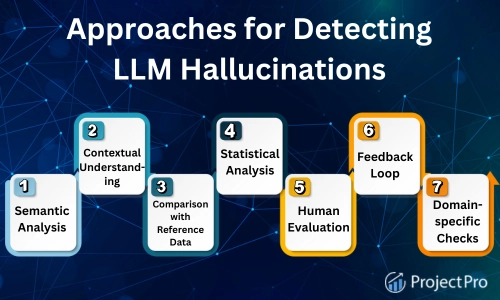

Straightforward Approaches for Detecting LLM Hallucinations

A simple approach for detecting LLM hallucinations involves analyzing the generated text to identify instances where the output deviates significantly from what is expected or desired. Here are more general methods for detecting LLM hallucinations:

1. Semantic Analysis

This approach involves the assessment of the semantic coherence and relevance of the generated text. We look for inconsistencies, nonsensical statements, or irrelevant information in the LLM outputs.

2. Contextual Understanding

The context provided by the input prompt or surrounding text must be analyzed carefully. It is crucial to determine if the generated output aligns with the intended context and its meaning.

3. Comparison with Reference Data

Compare the generated output with a reference dataset or ground truth to evaluate its accuracy and coherence. We look for discrepancies or deviations from expected results.

4. Statistical Analysis

Utilize statistical measures to assess the likelihood of hallucinations. It may include analyzing word frequency, language models' probabilities, or other statistical properties of the generated text.

5. Human Evaluation

Engage human evaluators to review the generated output and provide feedback on its coherence, relevance, and accuracy. Human judgment can complement automated detection methods.

6. Feedback Loop

Implement a feedback loop where users or domain experts provide input on the quality of the generated text. Use this feedback to improve the LLM's performance and reduce hallucinations iteratively.

7. Domain-Specific Checks

Incorporate domain-specific checks or rules to validate the accuracy and relevance of the generated text. This may involve verifying factual information or ensuring adherence to specific guidelines or constraints.

By combining these methods, data scientists and researchers can effectively detect LLM hallucinations and implement strategies to mitigate their occurrence, thereby improving the reliability and usefulness of LLM-generated text. Additionally, they can utilize mathematical tools to effectively gauge the presence or absence of these LLM hallucinations, as mentioned below.

Looking for end to end solved machine learning projects? Check out ProjectPro's repository of solved Machine Learning Projects in R with source code!

Mathematical Techniques for Detecting LLM Hallucinations

Efficient and accurate detection of hallucinations is vital for mitigating their impact. In recent years, several mathematical techniques have emerged for this purpose. Let's explore some of these methods, focusing on their strengths and weaknesses.

-

Log Probability

This method, introduced in the paper "Looking for a Needle in a Haystack: A Comprehensive Study of Hallucinations in Neural Machine Translation," calculates the length-normalized sequence log probability for each word in the generated translation. A lower log probability suggests lower model confidence, indicating a higher likelihood of hallucination. However, it may need help differentiating hallucinations mimicking training data patterns and requires access to internal LLM parameters.

-

G-Eval

G-Eval, from the paper "G-EVAL: NLG Evaluation using GPT-4 with Better Human Alignment," leverages LLMs with Chain-of-Thought (CoT) and form-filling paradigms to evaluate article summarization quality. While it provides human-readable justifications for judgments, it's limited to closed-domain hallucinations and uses a lot of tokens, reducing cost efficiency.

-

SelfCheckGPT

This technique, from "SELFCHECKGPT: Zero-Resource Black-Box Hallucination Detection for Generative Large Language Models," evaluates consistency by comparing sampled responses. It employs metrics like BERTScore, MQAG, and NGram to detect divergences and contradictions. However, it's costly due to repeated sampling and computation-intensive BERTScore calculations and can only detect open-domain hallucinations.

-

ChainPoll

ChainPoll, introduced in "ChainPoll: A HIGH EFFICACY METHOD FOR LLM HALLUCINATION DETECTION," employs the Chain of Thought technique to detect closed and open domain hallucinations. It's more accurate and cost-effective than many alternatives and doesn't require an external model like SelfCheckGPT. Additionally, it offers versions tailored for different types of hallucinations and performs well on realistic benchmark datasets like RealHall.

Each mathematical technique for detecting LLM hallucinations has its advantages and drawbacks. While some prioritize accuracy and efficiency, others offer interpretability or broader applicability across different types of hallucinations. Selecting the most suitable method depends on the specific requirements and constraints of the application context.

We've all heard the saying, "Prevention is better than cure." While doctors use it to emphasize disease prevention in humans, it's equally relevant for reducing hallucinations in LLMs. Just as humans adopt healthy habits, LLMs can benefit from strategies to reduce LLM hallucinations. Let's explore these methods together in the next section.

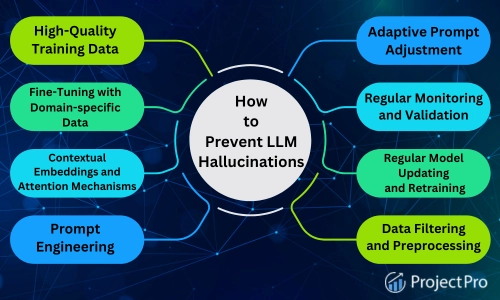

How to Prevent LLM Hallucinations?

Preventing LLM hallucinations involves implementing strategies and techniques during the model's training, fine-tuning, and deployment phases. Here are some approaches to consider:

1. High-Quality Training Data

Like humans, LLMs must also eat healthily. So, one must ensure the training dataset served as an input to the LLMs is comprehensive, diverse, and representative of the target domain. High-quality data helps the model learn accurate patterns and reduces the likelihood of hallucinations.

2. Prompt Engineering

Design clear and specific prompts to guide the LLM's generation process. Well-crafted prompts provide context and constraints that help steer the model toward producing relevant and coherent output.

3. Data Filtering and Preprocessing

Cleanse the training data to remove noise, biases, and irrelevant information. Preprocess the data to ensure consistency and quality, reducing the risk of introducing spurious patterns that may lead to hallucinations.

4. Fine-Tuning with Domain-Specific Data

Fine-tune the LLM using domain-specific data to adapt it to the target domain's nuances and requirements. Fine-tuning enhances the model's performance and reduces the likelihood of generating irrelevant or inaccurate text.

Don't be afraid of data Science! Explore these beginner data science projects in Python and get rid of all your doubts in data science.

5. Regular Monitoring and Validation

Continuously monitor the LLM's performance during training and deployment. To detect and address potential hallucinations early, validate the generated output using automated checks, human evaluation, or both.

6. Adaptive Prompt Adjustment

Dynamically adjust prompts based on model output feedback and user interactions. Adaptive prompts help steer the LLM towards generating more accurate and relevant text over time.

7. Contextual Embeddings and Attention Mechanisms

Incorporate contextual embeddings and attention mechanisms into the model architecture to capture long-range dependencies and contextual information. These mechanisms improve the model's ability to understand and generate coherent text.

8. Regular Model Updating and Retraining

Periodically update the model with new data and retrain it to adapt to evolving language patterns and domain-specific changes. Regular updating and retraining help maintain the model's relevance and effectiveness while reducing the risk of generating outdated or inaccurate text.

Here is one more bonus tip by Yann LeCun, the Turing-Award-winning French-American computer scientist.

By implementing these preventive measures, AI engineers can mitigate the occurrence of LLM hallucinations and enhance the reliability and usefulness of Generative AI. Additionally, enriched experience with LLM projects is crucial and comes with practice. You may receive projects at work one at a time, but we have a secret key to unlock a list of practical projects. Find out more about it in the final section of our blog.

Explore ProjectPro’s LLM Projects to Learn How to Prevent LLM Hallucinations

Mitigating LLM hallucinations can be daunting, but it becomes a breeze with ProjectPro's curated projects. Our repository, crafted by seasoned industry professionals, offers a diverse range of solved projects in data science and big data. Whether delving into advanced topics or mastering the basics, ProjectPro has you covered with its customized learning paths feature. Additionally, the platform offers free doubt sessions for those crucial moments when you're stuck and need help breaking through the loop of code. Don't wait any longer—join today and unlock your full potential!

Get FREE Access to Data Analytics Example Codes for Data Cleaning, Data Munging, and Data Visualization

FAQs

1. How to avoid LLM hallucinations?

To avoid LLM hallucinations, employ diverse training data, refine prompt engineering, and implement post-generation validation checks to ensure coherence and accuracy.

2. How to mitigate LLM Hallucinations with a Metrics First Evaluation Framework?

Mitigate LLM hallucinations with a Metrics First Evaluation Framework by establishing clear evaluation criteria, leveraging quantitative metrics like log-probability, SelfcheckGPT, G-val, and ChainPoll, and integrating human judgment for enhanced assessment.

About the Author

Manika

Manika Nagpal is a versatile professional with a strong background in both Physics and Data Science. As a Senior Analyst at ProjectPro, she leverages her expertise in data science and writing to create engaging and insightful blogs that help businesses and individuals stay up-to-date with the