Daily Prices Project

6 Answer(s)

DeZyre Support

hi Sachin,

Looks like the Mysql Driver is not available in your setup. Please download "mysql-jdbc.jar" connector from this link -> https://dev.mysql.com/downloads/connector/j/

Download the jar and copy to /usr/lib/sqoop/lib/ and restart your import job.

Mar 05 2016 08:36 PM

Looks like the Mysql Driver is not available in your setup. Please download "mysql-jdbc.jar" connector from this link -> https://dev.mysql.com/downloads/connector/j/

Download the jar and copy to /usr/lib/sqoop/lib/ and restart your import job.

SACHIN

Mar 06 2016 12:50 AM

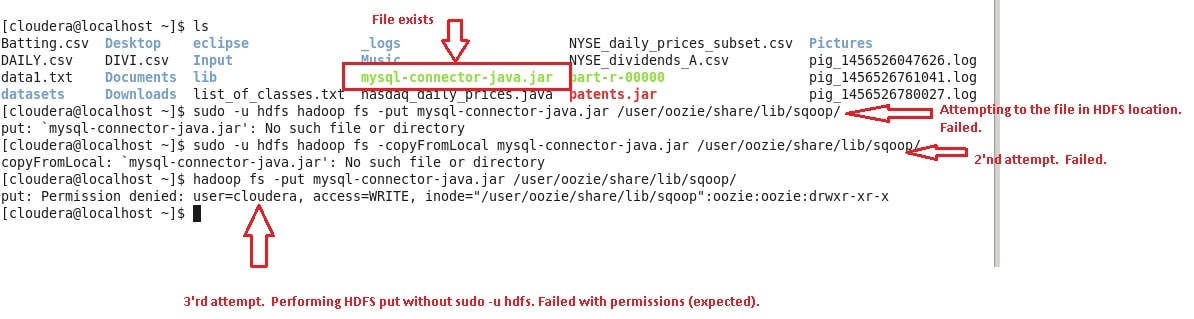

The mysql-connector already exists in that path mentioned above. I am attaching a snapshot. Still not working. Does Ooozie launched from Web UI run on jars on HDFS or on local linux file system?

Mar 06 2016 12:50 AM

Abhijit-Dezyre Support

Hi Sachin,

As you are using the Cloudera CDH-4. Please upload the connector at hdfs /user/oozie/share/lib/sqoop/

Command - hadoop fs -put location_of_connector /user/oozie/share/lib/sqoop/

If you find at permission error, please use the "sudo -u hdfs" as prefix the above command.

This will resolve the issue.

Please let me know if you face any issue.

Thanks.

Mar 06 2016 02:22 AM

As you are using the Cloudera CDH-4. Please upload the connector at hdfs /user/oozie/share/lib/sqoop/

Command - hadoop fs -put location_of_connector /user/oozie/share/lib/sqoop/

If you find at permission error, please use the "sudo -u hdfs" as prefix the above command.

This will resolve the issue.

Please let me know if you face any issue.

Thanks.

SACHIN

Mar 07 2016 08:42 PM

Hi Abhijit,

I am sorry, unfortunately that solution is not working either. I have attached the error snapshot. Please take a look at it.

As shown with 'ls' command, the mysql driver file exists in local linux space.

Next, I try to "put" using "sudo -u hdfs" as prefix, however it fails saying 'file not found'. I am thinking that once we do sudo -u hdfs, is it expecting the file to be picked up from some other location?

Thanks

I am sorry, unfortunately that solution is not working either. I have attached the error snapshot. Please take a look at it.

As shown with 'ls' command, the mysql driver file exists in local linux space.

Next, I try to "put" using "sudo -u hdfs" as prefix, however it fails saying 'file not found'. I am thinking that once we do sudo -u hdfs, is it expecting the file to be picked up from some other location?

Thanks

Mar 07 2016 08:42 PM

SACHIN

Finally got it working. Had to jump over the hoops to get the jar file to the destination.

1. Ran 'su' as super user.

2. copied jar file to linux file system path '/usr/share'

3. Ran "sudo -u hdfs" prefixed to "put" in hdfs, and it was successfully loaded to hdfs destination location "/user/oozie/share/lib/sqoop/".

4. Ran Oozie workflow from Web UI, and the job was successfully executed.

Thank you

-Sachin

Mar 07 2016 08:54 PM

1. Ran 'su' as super user.

2. copied jar file to linux file system path '/usr/share'

3. Ran "sudo -u hdfs" prefixed to "put" in hdfs, and it was successfully loaded to hdfs destination location "/user/oozie/share/lib/sqoop/".

4. Ran Oozie workflow from Web UI, and the job was successfully executed.

Thank you

-Sachin

Abhijit-Dezyre Support

Hi Sachin,

Thanks for your update.

@sachin, please check my previous answer, I mentioned to put the jar file in hdfs only not in local drive.

Thanks.

Mar 07 2016 10:30 PM

Thanks for your update.

@sachin, please check my previous answer, I mentioned to put the jar file in hdfs only not in local drive.

Thanks.