What will you learn from this Hadoop MapReduce Tutorial?

This hadoop tutorial aims to give hadoop developers a great start in the world of hadoop mapreduce programming by giving them a hands-on experience in developing their first hadoop based WordCount application. Hadoop MapReduce WordCount example is a standard example where hadoop developers begin their hands-on programming with. This tutorial will help hadoop developers learn how to implement WordCount example code in MapReduce to count the number of occurrences of a given word in the input file.

Pre-requisites to follow this Hadoop WordCount Example Tutorial

- Hadoop Installation must be completed successfully.

- Single node hadoop cluster must be configured and running.

- Eclipse must be installed as the MapReduce WordCount example will be run from eclipse IDE.

Word Count - Hadoop Map Reduce Example – How it works?

Hadoop WordCount operation occurs in 3 stages –

- Mapper Phase

- Shuffle Phase

- Reducer Phase

Hadoop WordCount Example- Mapper Phase Execution

The text from the input text file is tokenized into words to form a key value pair with all the words present in the input text file. The key is the word from the input file and value is ‘1’.

For instance if you consider the sentence “An elephant is an animal”. The mapper phase in the WordCount example will split the string into individual tokens i.e. words. In this case, the entire sentence will be split into 5 tokens (one for each word) with a value 1 as shown below –

Key-Value pairs from Hadoop Map Phase Execution-

(an,1)

(elephant,1)

(is,1)

(an,1)

(animal,1)

Hadoop WordCount Example- Shuffle Phase Execution

After the map phase execution is completed successfully, shuffle phase is executed automatically wherein the key-value pairs generated in the map phase are taken as input and then sorted in alphabetical order. After the shuffle phase is executed from the WordCount example code, the output will look like this -

(an,1)

(an,1)

(animal,1)

(elephant,1)

(is,1)

Hadoop WordCount Example- Reducer Phase Execution

In the reduce phase, all the keys are grouped together and the values for similar keys are added up to find the occurrences for a particular word. It is like an aggregation phase for the keys generated by the map phase. The reducer phase takes the output of shuffle phase as input and then reduces the key-value pairs to unique keys with values added up. In our example “An elephant is an animal.” is the only word that appears twice in the sentence. After the execution of the reduce phase of MapReduce WordCount example program, appears as a key only once but with a count of 2 as shown below -

(an,2)

(animal,1)

(elephant,1)

(is,1)

This is how the MapReduce word count program executes and outputs the number of occurrences of a word in any given input file. An important point to note during the execution of the WordCount example is that the mapper class in the WordCount program will execute completely on the entire input file and not just a single sentence. Suppose if the input file has 15 lines then the mapper class will split the words of all the 15 lines and form initial key value pairs for the entire dataset. The reducer execution will begin only after the mapper phase is executed successfully.

Learn Hadoop by working on interesting Big Data and Hadoop Projects

Running the WordCount Example in Hadoop MapReduce using Java Project with Eclipse

Now, let’s create the WordCount java project with eclipse IDE for Hadoop. Even if you are working on Cloudera VM, creating the Java project can be applied to any environment.

Step 1 –

Let’s create the java project with the name “Sample WordCount” as shown below -

File > New > Project > Java Project > Next.

"Sample WordCount" as our project name and click "Finish":

Step 2 -

The next step is to get references to hadoop libraries by clicking on Add JARS as follows –

Get FREE Access to Data Analytics Example Codes for Data Cleaning, Data Munging, and Data Visualization

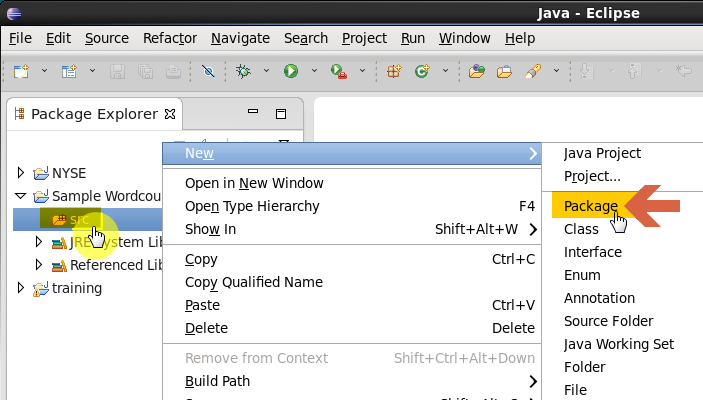

Step 3 -

Create a new package within the project with the name com.code.dezyre-

Recommended Tutorials:

Step 4 –

Now let’s implement the WordCount example program by creating a WordCount class under the project com.code.dezyre.

Step 5 -

Create a Mapper class within the WordCount class which extends MapReduceBase Class to implement mapper interface. The mapper class will contain -

1. Code to implement "map" method.

` 2. Code for implementing the mapper-stage business logic should be written within this method.

Mapper Class Code for WordCount Example in Hadoop MapReduce

public static class Map extends MapReduceBase implements Mapper {

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(LongWritable key, Text value, OutputCollector output, Reporter reporter)

throws IOException {

String line = value.toString();

StringTokenizer tokenizer = new StringTokenizer(line);

while (tokenizer.hasMoreTokens()) {

word.set(tokenizer.nextToken());

output.collect(word, one);

}

}

}

In the mapper class code, we have used the String Tokenizer class which takes the entire line and breaks into small tokens (string/word).

Step 6 –

Create a Reducer class within the WordCount class extending MapReduceBase Class to implement reducer interface. The reducer class for the wordcount example in hadoop will contain the -

1. Code to implement "reduce" method

2. Code for implementing the reducer-stage business logic should be written within this method

Reducer Class Code for WordCount Example in Hadoop MapReduce

public static class Reduce extends MapReduceBase implements Reducer {

public void reduce(Text key, Iterator values, OutputCollector output,

Reporter reporter) throws IOException {

int sum = 0;

while (values.hasNext()) {

sum += values.next().get();

}

output.collect(key, new IntWritable(sum));

}

}

Step 7 –

Create main() method within the WordCount class and set the following properties using the JobConf class -

- OutputKeyClass

- OutputValueClass

- Mapper Class

- Reducer Class

- InputFormat

- OutputFormat

- InputFilePath

- OutputFolderPath

public static void main(String[] args) throws Exception {

JobConf conf = new JobConf(WordCount.class);

conf.setJobName("WordCount");

conf.setOutputKeyClass(Text.class);

conf.setOutputValueClass(IntWritable.class);

conf.setMapperClass(Map.class);

//conf.setCombinerClass(Reduce.class);

conf.setReducerClass(Reduce.class);

conf.setInputFormat(TextInputFormat.class);

conf.setOutputFormat(TextOutputFormat.class);

FileInputFormat.setInputPaths(conf, new Path(args[0]));

FileOutputFormat.setOutputPath(conf, new Path(args[1]));

JobClient.runJob(conf);

}

}

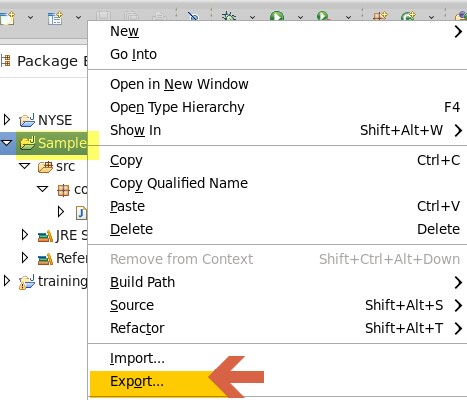

Step 8 –

Create the JAR file for the wordcount class –

How to execute the Hadoop MapReduce WordCount program ?

>> hadoop jar (jar file name) (className_along_with_packageName) (input file) (output folderpath)hadoop jar dezyre_wordcount.jar com.code.dezyre.WordCount /user/cloudera/Input/war_and_peace /user/cloudera/Output

Important Note: war_and_peace(Download link) must be available in HDFS at /user/cloudera/Input/war_and_peace.

If not, upload the file on HDFS using the following commands -

hadoop fs –mkdir /user/cloudera/Input

hadoop fs –put war_and_peace /user/cloudera/Input/war_and_peace

Output of Executing Hadoop WordCount Example –

The program is run with the war and peace input file. To get the War and Peace Dataset along with the Hadoop Example Code for the Wordcount program delivered to your inbox, send an email to khushbu@dezyre.com!

Send us an email at anjali@dezyre.com, if you have any specific questions related to big data and hadoop careers.

Build an Awesome Job Winning Project Portfolio with Solved End-to-End Big Data Projects